Archive for the ‘SOA’ Category

Christmas gift for the cubicle developer

Christmas gift for the cubicle developer

Thanks to Phil Windley for pointing this one out. I’d like one of these USB missile launchers for Christmas as well.

Update: There’s actually two different guns available according to Froogle, here’s an image of the second one.

You can find the first one at ThinkGeek for $39.99 and the second one at Vavolo.com (other stores were out of stock).

SOA and Outsourcing

SOA and Outsourcing

Joe McKendrick recently posted some comments on the impact of SOA to outsourcing. He admits that he’s been going back and forth on the question, and provides some insight from Ken Vollmer of Forrester and Sanjay Kalra on both sides.

My own opinion is that an appropriately constructed SOA allows an organization to make more appropriate outsourcing decisions. First, there are two definitions of outsourcing to deal with here. Joe’s article primarily focuses on outsourcing development efforts. Odds are, however, that code still winds up being deployed inside the firewall of the enterprise. The other definition of outsourcing would include both the development and the execution and management of the system, more along the lines of SaaS.

SOA is all about boundaries. Services are placed at boundaries in the systems that make sense to be independent from other components. A problem with IT environments today is that those boundaries are poorly defined. There is redundancy in the processing systems, tight coupling between user interfaces, business logic, and databases, etc. An environment like this makes it difficult to be successful with outsourcing. If the definition of the work to be performed is vague, it becomes difficult to ensure that the work is properly done. With SOA, the boundaries get defined properly. At this point, now the organization can choose:

- To implement the logic behind that boundary on its own, knowing that it may be needed for a competitive advantage or requires intimate knowledge of the enterprise;

- To have a third party implement the logic;

- To purchase a product that provides the logic.

By having that boundary appropriately defined, opportunities for outsourcing are thus more easily identified, and can have a higher chance for success. It may not mean any more or any less outsourcing, but it should mean a higher rate of success.

Portfolio Management and SOA

Portfolio Management and SOA

The title of this blog is “outside the box.” The reason I chose that title is because I think it captures the change in thinking that must occur to be successful with SOA. It’s my opinion that most enterprises are primarily doing “user-facing” projects. That is, the entire project is rooted in the delivery of some component that interacts with an end user. Behind that user interface, there’s a large amount of coding going on, but ultimately, it’s all about what that end user sees. This poses constraints on the project in terms of what can be done. Presuming a standard N-tier architecture, the first thing behind that UI from a technical standpoint is the business tier, or better stated from an SOA perspective, the business service tier. In order to have these services provide enterprise value, rather than project value, the project team must think outside the box. Unfortunately, that means looking for requirements outside of the constraints that have been imposed, a project manager’s worst nightmare.

So how should this problem be addressed? The problem lies at the very beginning- the project definition process. Projects need to have scope. That scope establishes constraints on the project team. Attempts to go outside those constraints can put the project delivery at risk. In order to do things right, the initial constraints need to be set properly. The first step would be to never tie a project delivering a user interface with a project delivering services. Interestingly, this shows how far the loose coupling must go! Not only should the service consumer (the UI) be loosely coupled from the service provider in a technical sense, it’s also true from a project management sense! How does this happen? Well, this now bubbles up to the project definition process which is typically called IT portfolio management. Portfolio management is part of the standard definition of IT Governance, and it’s all about picking the projects to do and funding them appropriately. Therefore, the IT governance committee than handles portfolio management needs to be educated in SOA. The Enterprise Architects must deliver a Service Roadmap to that group so they know what services are needed for the future and can now make appropriate decisions to ensure that projects to create those services happen at the right time, and are not inappropriately bundled with service consumers, leading to a conflict of interest, and ultimately, a service that may not meet the needs of the enterprise.

Thrown under the enterprise service bus

Thrown under the enterprise service bus

I’ve recently been involved in a number of discussions around the role of an ESB. My “Converging in the middle” provided a number of my thoughts on the subject, but it focused exclusively on the things that belong in the middle. The things in the middle aren’t used to build services, but are used to connect the service consumer and the service provider. There will always been a need for something in the middle, whether it’s simply the physical network, or more intelligent intermediaries that handle load balancing, version management, content-based routing, and security. These things are externalized from the business logic of the consumer and the provider, and enforced elsewhere. It could be an intelligent network, ala a network appliance, or it could be a container managed endpoint, whether it’s .NET, Java EE, a WSM agent, an ESB agent or anything else. There are people who rail on the notion of the Intelligent Network, and there are people who are huge proponents of the intelligent network. I think the common ground is that of the intelligent intermediary, whether done in the network or at the endpoints. The core concerns are externalized away from the business logic of the consumer and provider.

This brings me to the subject line of this entry. I came across this quote from David Clarke of CapeClear on Joe McKendrick’s SOA In Action blog:

“We consider ESB the principal container for business logic. This is next generation application server.”

What happened to separation of concerns? In the very same entry, Joe quoted Dave Chappell of Progress Software/Sonic saying:

ESB as a platform [is] “used to connect, mediate and control the interactions between a diverse set of applications that are exposed through the bus using service level interfaces.”

To this, I say you can’t have your cake and eat it too, yet this is the confusion currently being created. The IT teams are being thrown under the bus trying to figure out appropriate use of the technology. The problem is that the ESB products on the market can be used to both connect consumers and providers and build new services. Orchestration creates new services. It doesn’t connect a provider and a consumer. It does externalize the process, however, which is where the confusion begins. On the one hand, we’re externalizing policy enforcement and management of routing, security, etc. On the other hand, we’re externalizing process enforcement and management. Just because we’re externalizing things doesn’t mean it all belongs in one tool.

Last point: Service hosting and execution clearly is an important component to the overall SOA infrastructure. I think traditional application servers for Java EE or .NET are too flexible for the common problems many enterprises face. There definitely is a market for a higher level of abstraction that allows services to be created through a visual environment. This has had many names: EAI, BPM, and now ESB. These tools tend to be schema-driven, taking a data-in, data-out approach, allowing the manipulation of an XML document to be done very efficiently. Taking this viewpoint, the comment from David Clarke actually makes sense, if you only consider the orchestration/service building capabilities of their product. Unfortunately, this value is getting lost in all the debate because it’s being packaged together with the mediation capabilities which have struggled to gain mindshare, since they are more operational focused than developer focused. A product sold on those capabilities isn’t as attractive to a developer. Likewise, a product that emphasizes the service construction capabilities may get implemented without regard for how part of it can be used as mediation framework, because the developers aren’t concerned about the externalization of those capabilities.

The only way this will change is if the enterprise practitioners make it clear the capabilities that they need before they go talk to the vendors, rather than letting the vendors tell you what you need, leaving you to struggle to determine how to map it back to your organization. I hope this post helps all of you in that effort, feel free to contact me with questions.

SOA Funding

SOA Funding

In his eBizQ SOA In Action blog, Joe McKendrick presents some comments from industry experts of the funding of SOA. He starts the conversation with the question:

Who pays for SOA, at least initially? Should IT pay? Should the business unit that originally commissioned the service bear the initial development costs? What motivation is there for a business unit that spent $500,000 to build a set of services to share it freely across the rest of the enterprise?

First, this isn’t an SOA problem. SOA may bring more attention to the problem because more things (theoretically) should be falling into the “shared” category instead of the domain specific category. Reusable services are not the first technology to be shared, however. Certainly, your enterprise leverages shared networking and communication infrastructure. You likely share databases and application servers, although the scope of the sharing may be limited within a line of business or a particular data center. Your identity management infrastructure is likely shared across the enterprise, at least the underlying directories supporting it.

To Joe’s question about the business unit the originally commissioned the service, clearly, that service is going to be written one way or another. The risk is not in the service being unfunded, but that the service is designed only for the requirements of the project that identified the initial need. Is this a problem with the funding model, or is it a problem with the project definition model? What if the project had been cleanly divided into the business unit facing components (user interface) under one project and each enterprise service under its own project? In this scenario, the service project can have requirements outside of the that single consuming application and a schedule independent of, but synchronized with, that consuming application.

If this is done, now the IT Governance process can look at the service project independent from the consuming application project. If the service project has immediate benefits to the enterprise, it should be funded out of enterprise dollars to which each business unit contributes (presuming the IT Governance process is conducive to this approach). If the service project doesn’t have immediate benefits to the enterprise, then it may need to be funded from the budgets of the units that are receiving benefits. It will likely require changes to support the needs of the rest of the enterprise in the future, but those changes would be funded by the business units requiring those changes. Yes, this may impact the existing consumers, anyone who is thinking services can be deployed once and never touched will be sadly mistaken. You should plan for your services to change, and have a clearly communicated change management strategy with release schedules and prior version support.

While I certainly understand that funding can be a major concern in many organizations, I also think that IT needs to take it upon themselves to strive to do the right thing for the enterprise while working within the constraints that the project structure has created. If a project has identified a need for service, that service should always be designed as an independent entity from the consuming application, even if that application is the only known consumer. Funding is not an issue here, because the service logic needs to be written. An understanding of the business processes that created the need for the service should be required to write the application in the first place, so the knowledge on how to make the service more viable for the enterprise should not require a tremendous amount of extra effort. While studies have shown that building reusable components can be more expensive, I would argue that a mantra of reuse mandates better coding practices in general, and is how the systems should have been designed all along. Many of our problems are not that we don’t have the service logic implemented in a decent manner somewhere, but rather that the service logic was never exposed to the rest of the enterprise.

Use or reuse?

Use or reuse?

Joe McKendrick referred to a recent BEA study that said that “a majority of the largest global organizations (61%) expect no more than 30 percent of their SOA services to be eventually reused or shared across business units.” He goes on to state that 84% of “these same respondents consider service reuse is one of their most critical metrics for SOA success.” So what gives?

Neither of these statements surprise me. A case study I read on Credit Suisse and their SOA efforts (using CORBA) was that the average number of consumers per service was 1.5. This means that there’s a whole bunch of services with only one consumers, and probably a select few with many, many consumers. So, 30% reuse sounds normal. Now, as for the critical success metric, I think the reason this is the case is because it’s one of the few quantifiable metrics. Just because it’s easy to capture doesn’t make it right, however.

First off, I don’t think that anyone would argue that there is redundancy in the technology systems of a large enterprise. Clearly, then, the possibility exists to eliminate that redundancy and reuse some services. Is that the right thing to do, however? The projects that created those systems are over and done with, costs sunk. There may be some maintenance costs associated with them, but if they were developed in house, those costs are likely to be with the underlying hardware and software licenses, not with the internally developed code. Take legacy systems, for example. You may be sending annual payments to your mainframe vendor of choice, but you may not be paying any COBOL developer to make modifications to the application. In this situation, it’s going to be all but impossible to justify the type of analysis required to identify shared service opportunities across the board. The opportunities have to be driven from the business, as a result of something the business wants to do, rather than IT generating work for itself. If the business needs to cut costs and has chosen to get rid of the mainframe costs in favor of smaller commodity servers, great. There’s your business-driven opportunity to do some re-architecting for shared services.

The second thing that’s missing is the whole notion of use. The problem with systems today is not that they aren’t performing the right functionality. It’s that the functionality is not exposed as a service to be used in a different manner. This is an area where I introduce BPM and what it brings to the table. A business driver is improved process efficiency. Analysis of that business process may result in the application being broken apart into pieces, orchestrated by your favorite BPM engine. If that application wasn’t built with SOA in mind, there’s going to be a larger cost involved in achieving that process improvement. If the application leveraged services, even if it was the only consumer of those services, guess what? The presentation layer is properly decoupled, and the orchestration engine can be inserted appropriately at a lesser cost. That lesser cost to achieve the desired business goal is the agility we’re all hoping to achieve. In this scenario, we still only have one consumer of the service. Originally it was the application’s user interface. That may change to the orchestration engine, which receives events from the user interface. No reuse, just use.

Importance of Identity

Importance of Identity

I’m currently working on a security document, and it brought to mind a topic that I’ve wondered about in the past. Why is all of the work around Web Services security user centric? Services are supposed to represent system-to-system interactions. As a result, won’t most policies be based on system identifiers rather than user identifiers?

When outlining an SOA security solution, I think an important first step is to determine what “identity” is in the context of service security. Identity will certainly include user identity, but should also include system identity. There may be other contextual information that needs to be carried along, such as originating TCP/IP address, or branch office location. Not all information may be used for access control purposes, but may be necessary for auditing or support purposes. Identity identification (doesn’t that sound great) can be quite a challenging task, and may only grow more difficult when trying to map it to a credential format, such X.509, Kerberos, SAML, or something else.

The problem gets even more complicated when dealing with composite services. If policies are based on system identity, what system identity do you use on service requests? I think there will likely be scenarios where you want the original identity of the call chain passed through, as well as scenarios where policies are based upon the most recent consumer in the call chain.

If this wasn’t enough, you also have to consider how to represent identity on processes that are kicked off by system events. I’ve previously blogged a bit about events, but in a nutshell, I believe there is a fundamental difference between events and service requests. Events are purely information. Service requests represent an explicit request to have action taken. Events do not. Events can trigger action, and often do, but in and of themselves, they’re just information. This now poses a problem for identity. If a user performs some action that results in a business event, and some subscriber on that event performs some action as a result, what identity should be carried on that action? While the implementation may result in events and implied action, to the business side, the actions the end user took that kicked off the event may represent an explicit request for that action. In other scenarios, it might not. It is safe to say that identity should be carried on both service requests and events allowing the flexibility to choose appropriately in particular scenarios.

All of this should make it painfully clear why Security Architecture (which includes Identity Management in my book) is extremely important.

The importance of communication

The importance of communication

My last two posts have actually generated a few comments. Any blogger appreciates comments because above all else, it means someone is reading it! I should improve my commenting habits on the blogs I follow, even if to say nothing more than I agree. To that end, as a courtesy to those who have commented, I recommend that all of you visit the following:

The reason I do this is because of the importance of the communication that these individuals publish as their time allows. If you follow James McGovern, a common theme for him to ask practicing architects to share their experiences. In reality, how much of what we practice could really be considered a competitive advantage? Personally, I think it would the majority of our efforts, not the minority. We all get better through shared experiences. It’s when those experiences are not shared for the common good that they are repeated again and again (of course, we’ll always have ignorance on top of that accounting for some amount of repeated mistakes, they’re not ALL due to lack of communication).

One blog I didn’t mention above, but would like to give special attention to here is Joe McKendrick’s SOA in Action blog. In addition to his ZDNet blog, Joe’s SOA in Action blog on eBizQ is intended to focus on the practitioners of SOA, not the vendors marketing it. So much of IT communication in the public domain is dominated by the vendors, not by the practitioners. This isn’t a knock at the vendors- they have some very smart people and put out some useful information, but I tend to think that product selection isn’t what is holding companies back from a successful SOA adoption. It’s like a big chess game. Each enterprise represents a pattern on the chess board. We must recognize the patterns in front of us and make appropriate decisions to move toward success. The factors involved in IT are far more complicated than any chess board. Wouldn’t it be great if we knew certain players in the enterprise could only move one space at a time, diagonally, or in an L? After all, governance is about generating desired behavior. If the roles and capabilities are not well communicated, desired behavior will be difficult to achieve.

As I mentioned in a previous post, I’m reading IT Governance. It would be great if Harvard Press would do a book like this on Service Oriented Architecture, with case studies riddled throughout, attempting to articulate the patterns of both the successful and the unsuccessful companies. Unfortunately, I’m not aware of any such book (leave me a comment if you are). So, in the absence of it, your best bet is to follow the blogs of my fellow practicing architects and see what you can learn!

Management and Governance

Management and Governance

Thanks to James McGovern, Brenda Michelson, and Sam Lowe for their comments on my last post on EA and SOA. Sam’s response, in particular, got my mind thinking a bit more. He stated:

One of contacts likes to describe EA’s new role in the Enterprise as being managing SOA-enabled (business) change.

The part that caught my attention was the word managing. What’s great about this is that it’s an active word. You can’t manage change by sitting in an ivory tower. Managing is about execution. EA’s not only need to define the future state using their framework of choice, but they need to put the actions in place to actually get there. Creating a powerpoint deck and communicating it to the organization is not execution, it’s communication. While communication is extremely important, it’s not going to yield execution. Execution involves planning. Ironically, this is something that I personally have struggled with, and I’m sure many other architects do, as well. I am a big picture thinker. The detail oriented nature of a good project manager is foreign to me. I’ve told many a manager that assigned me as a technical lead or a project architect to assign the most detail-oriented project manager to keep me in check. It may drive me nuts, but that’s what it takes to be successful.

Moving on, how does governance fit into the mix? First off, governance is not management, although the recent use of the term in SOA product marketing has certainly confused the situation. James McGovern has blogged frequently that governance is about encouraging desirable behavior. The book IT Governance by Peter Weill and Jeanne Ross states that

IT governance: Specifying the decision rights and accountability framework to encourage desirable behavior in the use of IT.

One of the five decisions associated with IT governance stated by the book is IT Architecture, the domain of the Enterprise Architect. I think the combination of decision making, strategy setting, and execution is what the EA needs to be concerned with. An EA group solely focused on decision making without having a strategy to guide them is taking a bottom up approach that may achieve consistency, but is unlikely to achieve business alignment. An EA group solely focused on strategy becomes an ivory tower that simply gets ignored. An EA group focused too much on execution may get mired in project architecture activities, losing both consistency and direction.

Added note: I just saw Tom Rose’s post discussing EA and SOA in response to my post and others. It’s a good read, and adds a lot to what I said in this post.

SOA and EA…

SOA and EA…

David Linthicum recently posted his thoughts about the Shared Insights Enterprise Architectures Conference held at the Hotel del Coronado near San Diego. David states:

The fact is I heard little about SOA during the entire conference. Even the EA magazines and vendors did not mention SAO, and I’m not sure the attendees understand the synergies between the two disciplines. In fact, I think they are one in the same; SOA at its essence is “good enterprise architecture.”

I attended this conference, and I have to agree 100% with Dave. There was a great case study from Ford, a facilitated discussion which I participateed in (this was a very good idea, I thought), and Dave’s talk (I was in another session during this time), and not much more. It was mentioned here and there, but I was quite surprised at the lack of discussion around it. This was the first EA-specific conference I’d been to, so I didn’t know what to expect. I came away feeling that the EA community is a bit too disconnected from the real world. The field is still dominated by the notion of frameworks, Zachman, TOGAF, etc. It reminds me of the early days of OO and the multitude of methodologies that were available. While these frameworks and methodologies were extremely powerful, no organization could ever adopt them completely due to the huge learning curve involved. Many organizations have resentment toward their EAs as they see them as sitting up in an ivory tower somewhere pontificating the standards down on the enterprise. As with anything of this nature, there’s a little bit of truth in it. Many developers don’t understand the importance of EA.

There’s a need to bring these two worlds together. I don’t think SOA can be successful at the enterprise level without a strong EA team. At the same time, if EA’s are not driving SOA, and instead focusing on the models within their chosen framework, that won’t help either. I think that SOA has appeal at multiple levels, from the developer to the business strategist and everywhere in between. An interesting thing about the Zachman framework is that it’s built around the concept that each consumer of the information in the framework needs their own view. I believe that the core concept of SOA, services, can be shared across these views, linking them together, whether business or technical. That linkage is what’s missing today, and it’s a shame that no speaker at the conference hammered this point home. The presentations were either EA or SOA, not both. Joe McKendrick makes similar points in his blog about the recent BPM and SOA divide. It’s time to stop dividing and fighting separate battles and realize we’re all on the same team.

Converging in the middle

Converging in the middle

Scott Mark posted some comments on his blog about ESBs versus Smart Routers. This is one of my favorite subjects, and I posted some extensive comments on Scott’s blog. I wanted to go a bit further on the subject, so I decided to post my own entry on the subject.

First off, full disclosure. My personal preference is toward appliances. That being said, I don’t consider myself an ESB basher, either. If there’s one takeaway from this that you get, it should be that the selection of a product for intermediary capabilities is going to be different for every organization, based upon their culture and who does what. I spoke with a colleague at a conference last June who had both an appliance and software-based broker, each performing distinct functions, largely due to organizational responsibilities. While both products could have provided all of the capabilities on their own, there were two different operational teams responsible for subsets of the capabilities.

Let’s start with the capabilities. This is the most important step, because as soon as you throw ESB’s into the mix, the list of capabilities can become volatile. The area of concern that I believe is the right set of capabilities are those than belong “in the middle.” The core principle guiding what belongs “in the middle” are things that developers shouldn’t be concerned about. Developers should be concerned about business logic. Developers should not have to code in security (in the Authentication/Authorization sense), routing, monitoring, caching. These are things that should be externalized. J2EE and .NET try to do this, but they still make the policies part of the developers domain, either by bundling into an archive file or by using annotations in source code. In a Web Services world, these capabilities can be completely externalized from the execution container. My list of capabilities include:

- Routing

- Load Balancing

- Failover

- Monitoring and Metrics

- Caching

- Alerting

- Traffic Optimization

- Transport Mapping

- Standards Mediation (e.g. WS-Security 1.0 to WS-Security 1.1)

- Credential Mapping / Mediation

- Authorization

- Authentication

- Encryption

- Digital Signing

- Firewall

- Auditing

- Content-Based behavior, including version management

- Transformation (not Translation)

Note that one area commonly associated with ESBs these days is missing from my list: Orchestration. I don’t view orchestration as an “in the middle” capability. Orchestration does represent an externalization of process, just as we are externalizing these capabilities, however, an orchestration engine is an endpoint. It initiates new requests in response to events or incoming service requests. Adapter-based integration ala EAI is also not on my list. Many cases fall into the category of translation, not transformation. There is business logic associated with translating between an incoming request, and one or more requests that need to be directed at the system being “adapted.” The grey areas that are tougher to draw a line include queueing via MOM and basic transformation capabilities.

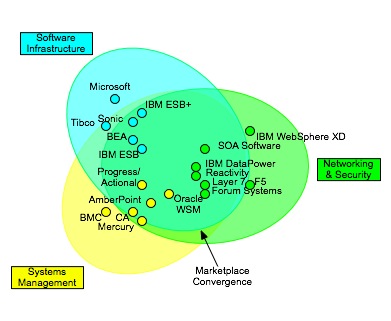

Now, if we take this list, you’ll see that it can be covered by products from three major areas: Networking and Security (normally appliances), Software Infrastructure (ESBs, Application Servers, EAI/BPM), and to a lesser extent, Systems Management (most management systems employ an agent based architecture. Most Web Services Management providers include gateways and agents that perform these). My own tracking and discussion with various vendors that deal in this space yields a diagram like this:

As you can see, these three areas overlap. There’s a convergence of capabilities in the middle, but not complete overlap. Also, note that this is more about the target space of the product versus the form factor. There are some surprises on the diagram. SOA Software’s Network Director (formerly BlueTitan) and perhaps IBM’s WebSphere XD are much more like network devices than software infrastructure, even though they are software based.

So how do you choose one of these providers? First off, I believe all of the products that control the capabilities I listed should be policy-driven, utilizing distributed enforcement points with centralized management. The notion of policy-driven is extremely important, because we need to externalize policy management from policy enforcement. Why? If you look at those capabilities, who sets the policies associated with them? It likely should be multiple groups. Information Security may handle authorization and authentication policies, Compliance may handle auditing, Operations will handle routing, and the development team may specify transformations in support of versioning. Right now, the management tools are all tightly coupled to the enforcement points. Therefore, if you choose an ESB for all capabilities, your Information Security team may need to use Eclipse to set policies, and they may have visibility into other policy domains. This could be a recipe for disaster. You need to match the tools to the groups that will be using them. Software infrastructure tools will likely be targeted at developers. Network and Security tools will likely be targeted toward network and security operations, and may be in an appliance form factor. Systems Management will also be operations focused, but may have a stronger focus on monitoring and a weaker focus on active enforcement. It may involve the management of agents. Think about who in your organization will be using these tools and whether it fits their way of working. When evaluating products, see how far they’ve gone in externalizing policy. Can a policy be reused across multiple services via reference? Can policies be defined independent of a service pipeline? Is there clear separation of the policy domains? Do you have to be a developer to configure policies?

In short, I think if you nail down the capabilities, eliminating the grey area in integration and transformation, and have a clear idea of who in the organization will use the system, the product space should be much easier to navigate, for now. This convergence will continue, as smaller vendors get gobbled up and registry/repository becomes a more integrated part of the solution as a policy repository. It will begin to converge with the Configuration Management database space as I’ve previously discussed. Network devices continue to converge with MPLS and the addition of VoIP capabilities. If you have an intermediary today, continue to watch the space every 6 months to a year to see what’s happening and know when it might make sense to converge.

SOA and EA

SOA and EA

Greetings from the Shared Insights EA Conference. I just finished attending a case study given by Eric Carsten of Ford Motor Company. It was an excellent presentation in two ways. First, the concerns he outlined that their EA organization was trying to address certainly hit home with my own experiences within a large enterprise: technology consolidation and everything that goes along with it. He had a great item when he showed five business goals, and how those same goals could be applied to the technology domain. This was great, however don’t misinterpret this as business/IT alignment. For example (hypothetical, Eric didn’t say this), a goal of Ford could be consolidation. Applied to the business, this would be a reduction in the number of models being sold. Applied to IT, this would be a reduction in the number of technology vendors being used. While both will contribute to lowering costs, it would be difficult to directly show how a reduction in the technology platforms leads to a reduction in the models being produced.

This leads to the second thing that I found interesting. Ford’s EA organization, at least what I inferred from this one presentation, is very technology focused. It is involved with governing project technology decisions. That is, it is focused on the “how” to do things, rather than the “what.” This poses a problem if SOA is expected to be driven from the EA organization. If the EA organization is too focused on the how, it will help out in selecting service technologies but it won’t help on what services to build. I think this is a natural evolution for an EA group, however, it’s a big, big challenge. Technology selection is inherently part of EA, akin to cleaning your own house. Choosing what solutions to build on that technology is a business decision. This means that the EA organization must be involved closely with the business in order to pull it off. If they aren’t, what organization will drive the SOA effort? Perhaps that’s an indication that the organization isn’t ready for it yet. Once the EA organization has been invited to the portfolio planning/IT governance table, their chances of success will likely increase. I think I’ll ask this question now of the panel discussion that I’m sitting in…

Policy Management Domains

Policy Management Domains

Robin Mulkers had this post :Transformation in a SOA recently. He provided three options for providing transformation services:

1. A central message or transaction broker ESB platform is using connectors to access the various back-end systems and mediates between the service consumers and the service providers. The service consumers don’t see the mediation, they see a semantically coherent service offered by the mediation platform. It’s an EAI like architecture.

2. Externalize the transformation as a service, that’s the solution described by Zapthink in their paper. In this scenario, it is up to the service provider or the service consumer to detect an unsupported message format and know which transformation service to use to transform that unsupported format into something understandable.

3. It’s up to the service provider to implement the transformation as part of the service.

The options were a bit confusing, in that what the first one was really saying was the use of a centralized broker that was the responsibility of some centralized team, and not the service provider. If a centralized team handled this, that team “would have to be aware of all the legacy messages and schemas used throughout the enterprise,” as stated by Robin. He continues on to emphasize that the service provider is responsible for exposing various interfaces to the service functionality, using whatever infrastructure might be appropriate.

Let’s think about this for a second, however. This is where problems can arise. Often times, a group will choose technology based upon familiarity. In this scenario, the team providing the service is likely composed of developers. What’s the tool they can directly use? Their code. If an organization has some form of intelligent intermediary (XML appliance, Web Service Intermediary, ESB, etc.), the developer may not have access to the management console to properly leverage the transformation capabilities of the device. Note that this problem goes beyond the developer, however. What are some of the other core capabilities of an intermediary? Security. For a number of reasons, many organizations want to centralize the management of security policies within the organization. I’m sure they don’t have access to either code or the intermediary console. What about routing policies? That’s probably under the domain of an infrastructure operations group. What about audit logs? The compliance group may want to control these.

So, now the situation is that I have at least four different policy domains, each managed by different areas of the organization, all capable of being enforced by specialized infrastructure. Do I let these groups use the tools they have access to, all of which may take far longer in getting policy changes out into the enterprise (e.g. a development cycle for transformations coded in the service implementation) limiting the agility of the organization? If I want to leverage the specialized infrastructure, I’m in a bind as the console probably provides access to the entire policy pipeline for a service, putting my environment at risk.

What we need is an externalized policy manager, complete with role-specific access to particular policy domains. So who’s the vendor that’s going to come up with this? Unfortunately, policy managers are tightly coupled to policy enforcement points, because there are very few standard policy languages that could allow a third party manager. Security is the most likely policy domain, but even in the broader web access management space, how many policy managers can control heterogeneous enforcement points? It’s more likely that the policy managers require proprietary policy enforcement agents on those enforcement points. So, how about this vendors? Who’s going to step up to the plate and drive this solution home?

Writing on water…

Writing on water…

While I can’t think of any practical applications right now, this is just cool. Anyone want to go watch The Abyss?

Infrastructure Services

Infrastructure Services

A favorite topic of mine, SOA for IT, has come back up with a blog from Robin Mulkers at ITtoolbox. He attended the Burton Group’s Catalyst conference in Barcelona and heard Anne Thomas Manes talk about her Infrastructure Services Model.

Robin states:

Instead of Account management and order processing, think of services like authentication, auditing, integration, content management, etc. etc.

Security is a great example. If you’re a large enterprise, there’s a good chance that you’ve adopted some Identity and Web Access Management infrastructure from Oracle, CA-Netegrity, IBM or the like. Typical usage involves installing some agent in a reverse proxy or application server (policy enforcement point or PEP) which intercepts requests, obtains security tokens and contextual information about the request, and then communicates with a server (policy decision point or PDP) to get a yes/no answer on whether the request can be processed. Today, this communication between the PEP and the PDP is all proprietary. Vendors license toolkits to other providers, such as XML appliance and Web Service Management products, to allow them to talk to the PDP. In this scenario, the PDP is providing the security services. Why does this need to be proprietary? Is there really any competitive difference between the players in this space? Probably not. If there were standard interfaces for communicating with an authorization service, one PDP could easily be exchanged for a new PDP.

One challenge in the infrastructure space, however, is understanding the difference between implied capabilities and services. Take routing, for example. In a typical HTTP request, routing is implied. The actual service request is to GET/POST/PUT/DELETE a resource. It’s not to route a message, other than through the specification of a URI. It’s likely that the URI used can represent one of many web/application servers, the exact one determined by a routing service provided by the networking infrastructure or clustering technology. In this case, routing is an implied capability, not an explicit service. The security example is an implied capability from the point of view of the service consumer, however, the communication between a PEP and a PDP is an explicit service request.

In applying SOA to IT, it’s important to identify when a capability should be implied and when it should be explicitly exposed. In some cases it may one or the other, in other cases it will be both. The most important services in SOA for IT, in my opinion, are the management services. In both the security and the routing example, the decision made are based upon policies configured in the infrastructure through some management console or management API/scripting capability. Wouldn’t it be great if all of the capabilities in the management console were available as standards based services? Automation would be far easier. This is a daunting challenge, however, as there are no vertical standards for this. We have horizontal standards like JMX and WS-DistributedManagement, but there are few standards for the actual things being managed. Having a Web Service for deploying applications is good, but odds are that the services for JBoss, WebLogic, and WebSphere will have significant semantic differences.

Infrastructure services aren’t going to happen overnight, but it’s time for IT Operations Managers to begin pushing the vendors for these capabilities. The time is ripe for some vendors in the management space to switch from management of Web Services ala Sonic/Actional and Amberpoint to management using Web Services. In the absence of standards, it would be great if some of the big systems management players decided to create a standards-based insulation layer and present a set of services that could be used with a variety of infrastructure products, allowing IT Operations to leverage automation and workflow infrastructure to improve their efficiency.