Archive for the ‘Infrastructure’ Category

EDA begins with events

EDA begins with events

Joe McKendrick asks, “Is EDA the ‘new’ SOA?” First, I’ll agree with Brenda Michelson that EDA is an architecture that can effectively work in conjunction with SOA. While others out there view EDA as part of SOA, I think a better way of viewing it would be that services and events must both be part of your technology architecture.

The point I really want to make however, which expounds on my previous post, is that I simply think event-oriented thinking is the exception, rather than the norm for most businesses. I’m not speaking about events in the technical sense, but rather, in the business sense. What businesses are truly event driven, requiring rapid response to change? Certainly, the airlines do, as evidenced by JetBlue’s recent difficulties. There are some financial trading sectors that must operate in real-time, as well. What about your average retail-focused company, however? Retail thinking seems to be all about service-based thinking. While you may do some cold calls, largely, sales happen when someone walks into the store, goes to the website, or calls on the phone. It’s a service-based approach. They ask, you sell. What are the events that should be monitored that would trigger a change in the business? For companies that are not inherently event-driven, the appropriate use of events are for collecting information and spotting trends. Online shopping can be far more informative for a company than brick-and-mortar shopping because you’ve got the clickstream trail. Even if I don’t buy something, the company knows what I entered in the search box, and what products I looked at. If I walk into Home Depot and don’t purchase anything, is there any record of why I came into the store that day?

Again, how do we begin to go down the direction of EDA? Let’s look at an event-driven system. The October 2006 issue of Business 2.0 had a feature on Steve Sanghi, CEO of Microchip Technology. The article describes how he turned around Microchip by focusing on commodity processors. As an example, the articles states that Intel’s automotive-chip division was pushing for “a single microprocessor near the engine block to control the vehicle’s subsystems and accessories.” Microchip’s approach was “to sprinkle simpler, cheaper, lower-power chips throughout the vehicle.” Guess what, today’s cars have about 30 micro-controllers.

So, what this says is that the appropriate event-based architecture is to have many, smaller points of control that can emit information about the overall system. This is the way that many systems management products work today- think SNMP. To be appropriate for the business, however, this approach needs to be generating events at the business level. Look at the applications in your enterprise’s portfolio and see how many of them actually publish any sort of data on how it is being used, even if it’s not in real time. We need to begin instrumenting our systems and exposing this information for other purposes. Most applications are like the checkout counter at Home Depot. If I buy something, it records it. If I don’t buy something and just exit the store, what valuable information has been missed that could improve things the next time I visit?

I’d love to see events become more mainstream, and I fully believe it will happen. I certainly won’t argue that event-driven systems can be more loosely coupled, however, I’ll also add that the events we’re talking about then are not necessarily the same thing as business events. Many of those things will never be exposed outside of IT, nor should they be. It’s the proper application of business events that will drive companies opening up their wallets to purchase new infrastructure built around that concept.

More consolidation: Cisco to acquire Reactivity

More consolidation: Cisco to acquire Reactivity

The consolidation in the vendor space continues. From tmcnet.com:

Cisco Systems Inc., a leading supplier of Internet network equipment, has announced its intention to acquire Reactivity Inc., a provider of gateway solutions that simplify web services management. The agreement, which is subject to the standard closing conditions, states that Cisco will pay $135 million and assumed options of Reactivity.

Jeff will need to update his scorecard. This certainly puts two big boys head-to-head in this space with IBM previously having acquired DataPower. Cisco has been very quiet for some time regarding its AON technology, so I’m wondering how this acquisition impacts that effort.

The more important question, however, is what this means for the bigger picture. I previously posted on the need for an open, integrated world between service management, service connectivity, and service hosting. Unfortunately, these acquisitions may point more toward single-vendor, proprietary integrations, rather than open solutions. Will the Cisco version of Reactivity still partner with companies like AmberPoint, or will the management focus now shift all to Cisco technologies, starting with the Application Control Engine mentioned in Cisco’s press release? What’s been happening with the Governance Interoperability Framework now that HP owns Systinet? IBM’s Registry/Repository solution doesn’t support UDDI and has a new API.

While bigger fish eating the smaller fish is way things frequently work with technology startups, these players need to keep in mind the principles of SOA. Their products should be providing services to enterprise, and those services should be accessible in an open, standards-based way. Just as the business doesn’t want a bunch of packaged business applications that require redundant data and can’t talk to each other, IT doesn’t want a bunch of infrastructure that requires redundant management capabilities with only proprietary APIs to work with. We need SOA for IT, and any vendor selling in this space should be making that a reality, not making it worse.

Uptake of Complex Event Processing (CEP)

Uptake of Complex Event Processing (CEP)

I’m seeing more and more articles about complex event processing (CEP) these days. If you’ve followed by blog, you’ll know that I’m a big fan of events, so I try to read these when they come across my news reader. One of the challenges I see, however, is that event-driven thinking is not necessarily the norm for businesses. Yes, insurance companies may deal with disaster events, and financial services companies may deal with “life” events like weddings, births, kids going to college, but largely, the view is very service-based. It is reactionary in nature. You ask me for something, and I give it to you.

This poses a challenge for event processing to gain mindshare. While event processing is certainly the norm in user interface processing and embedded systems, it’s not in your typical business IT. Ask yourself- if you were to install a CEP system in your enterprise today, what events would it see?

The starting point that I see for events should merely be publication. Forget about doing anything but collecting statistics at the beginning. Since events don’t align with how we’re normally thinking, perhaps we should let them show us how we should be thinking. This gets into the domain of business intelligence. The beauty of events, however, is that they can make the intent explicit, rather than implicit. If I’m only performing analysis based on database changes, am I seeing the right thing, or am I only seeing symptoms of the event? Not all events may result in a database change, and that’s where the important correlations may lie. If some companies shows up on page one of the Wall Street Journal, it could result in increased trading activity for that company. My databases may record the increased trading, I may not have a record of the triggering event- the news story.

Humans are very good an inferring relationships between events, sometimes better than we think. But without any events, how can we infer any relationships? We don’t want to overwhelm the network with XML messages that no one ever looks at, but we shouldn’t be at the opposite extreme either. Starting with new applications, I’d make sure that those systems are publishing some key events based upon their functionality. Now, we can start doing some analysis and looking for correlations. We then start to encourage event-driven thinking about the business, and as a result, have now created the potential for CEP systems to be used appropriately.

As an example of how far we still have to go, let’s look at Amazon. They certainly leverage business intelligence extremely well. Unfortunately, as far as I can tell, it’s largely based upon tracking the event that impacts their bottom line directly- purchasing. If I were them, I’d be looking at wish list activity much more strongly. Interestingly, my wife gets more recommendations on technical books that I do. Why? Because she’s purchasing them as gifts for me. I put them on my wish list, she buys them. Because they’re looking at the wrong event, they now make an inference that she’s interested in them when she isn’t. I am. They need to track the event of me adding it to my wish list, along with someone purchasing it for me, and then turn around and make recommendations back to me. Of course, that’s part of the challenge, though. There is simply a ton of information that you could collect, and if you collect the wrong stuff, you can waste a lot of time. Start with a small set of information that you know is important to your business and build out from there.

The Scope of SOA Adoption

The Scope of SOA Adoption

I just finished giving a webinar on the importance of SOA pilots with Alex Rosen, and I hope the attendees found it informative. One of the things that I discussed in the webinar was the scope of SOA adoption. Given the recent attention to my last post, I thought I’d discuss it a bit more, since it’s one of the two dimensions of the maturity matrix. It’s also what makes the effort more than just a “search and replace” on the SEI CMMI models as one commenter over on InfoWorld thought.

The last post introduced the levels of maturity, which are:

- Ad Hoc

- Common Goals

- Pilot

- Extend

- Standardize

- Optimize

Those levels are a pretty straightforward way of describing the maturation process of just about anything. So what’s really import is the other dimension which defines exactly what we’re maturing.

In the case of this model, we’re discussing SOA adoption maturity. SOA adoption is not simply about purchasing technology. No one can sell you an SOA, although there was someone selling “SOA in a box” back around Christmas on eBay in Australia. SOA adoption does involve new technologies that can provide support in service development and hosting, such as orchestration engines or web service frameworks, service connectivity, such as SOA appliances or ESBs, and service management. SOA adoption also involves organizational changes. If your organization is structured around application development, which team is responsible for building a service that spans multiple groups? SOA adoption involves governance, whether it be funding models, design time policies, or run time policies. SOA adoption involves new processes designed around the consumer/provider interaction. SOA adoption involves training and communication. How do we market services that have been created to ensure their reuse? Clearly, SOA adoption involves architecture. Enterprise architecture must provide appropriate reference architectures and reviews to ensure both tactical and strategic success. SOA adoption involves Operational Management. Services can’t be dumped into production and forgotten, we must take a proactive approach to monitoring and metric collection and feed that information back into the machine for continuous improvement.

SOA is not easy. If it were, we’d all be done by now. Every company will have different drivers, and different technology needs. An assessment of their maturity in SOA adoption should examine all of dimensions required.

SOA Maturity Model

SOA Maturity Model

David Linthicum recently re-posted his view on SOA maturity levels and I wanted to offer up an alternative view, as I’ve recently been digging into this subject for MomentumSI.

Interestingly, Dave calls out a common pattern that other models he’s seen define their levels around components and not degrees of maturity. He states:

While components are important, a maturity model is much more important, considering that products will change over time…

I completely agree on this. Maturity is not about what technologies you use, it’s about using them in the right way. Comparing this to our own maturity, just because you’re old enough to drive a car, doesn’t mean you’re mature. Just because you’ve purchased an ESB, built a web service, or deployed a registry doesn’t mean you’re mature.

Dave then presents his levels. I’ve cut and paste the first sentence that describes each level here.

- Level 0 SOAs are SOAs that simply send SOAP messages from system to system.

- Level 1 SOAs are SOAs that also leverage everything in Level 0 but add the notion of a messaging/queuing system.

- Level 2 SOAs are SOAs that leverage everything in Level 1, and add the element of transformation and routing.

- Level 3 SOAs are SOAs that leverage everything in Level 2, adding a common directory service.

- Level 4 SOAs are SOAs that leverage everything in Level 3, adding the notion of brokering and managing true services.

- Level 5 SOAs are SOAs that leverage everything in Level 4, adding the notion of orchestration.

While these levels may be an accurate portrayal of how many organizations leverage technology over time, I don’t see how they are an indicator of maturity, because there’s nothing that deals with the ability of the organization to leverage these things properly. Furthermore, not all organizations may proceed through these levels in the order presented by Dave. The easiest one to call out is level 5: orchestration. Many organizations that are trying to automate processes are leveraging orchestration engines. They may not have a common directory yet, they may have no need for content based routing, and they may not have a service management platform. You could certainly argue that they should have these things in place before leveraging orchestration, but the fact is, there are many paths that lead to technology adoption, and you can’t point to any particular path and say that is the only “right” way.

The first difference between my efforts on the MomentumSI model and Dave’s levels is that my view is targeted around SOA adoption. Dave’s model is a SOA Maturity Model, and there is a difference between that and a SOA Adoption Maturity Model. That being said, I think SOA adoption is the right area to be assessing maturity. To get some ideas, I first looked to other areas, such as CMMI and COBIT. If we look at just the names of the CMMI and COBIT levels, we have the following:

| Level | CMMI | COBIT |

| 0 | Non-Existent | |

| 1 | Initial | Initial |

| 2 | Managed | Repeatable |

| 3 | Defined | Defined |

| 4 | Quantitatively Managed | Managed |

| 5 | Optimizing | Optimized |

So how does this apply to SOA adoption? Quite well, actually. COBIT defines a level 0, and labels it as “non-existent.” When applied to SOA adoption, what we’re saying is that there is no enterprise commitment to SOA. There may be projects out there building services, but the entire effort is ad hoc. At level 1, both CMMI and COBIT label it as “Initial.” Again, applied to SOA adoption this means that the organization is in the planning stage. They are learning what SOA is and establishing goals for the enterprise. Simply put, they need to document an answer to the question “Why SOA?” At level 2, CMMI uses “Managed” and COBIT uses “Repeatable.” At this level, I’m going to side with CMMI. Once goals have been established, you need to start the journey. The focus here is on your pilot efforts. Pilots have tight controls to ensure their success. Level 3 is labeled as “Defined” by both CMMI and COBIT. When viewed from an SOA adoption effort, it means that the processes associated with SOA, whether it be the interactions required, or choosing which technologies to use where, have been documented and the effort is now underway to extend this to a broader audience. Level 4 is labeled as “Quantitatively Managed” by CMMI and “Managed” by COBIT. If you dig into the description on both of these, what you’ll find is that Level 4 is where the desired behavior is innate. You don’t need to handhold everyone to get things to come out the way you like. Standards and processes have been put in place, and people adhere to them. Level 5, as labeled by CMMI and COBIT is all about optimization. The truly mature organizations don’t set the processes, put them in place, and then go on to something else. They recognize that things change over time, and are constantly monitoring, managing, and improving. So, in summary, the maturity levels I see for SOA Adoption are:

- Ad hoc: People are doing whatever they want, no enterprise commitment.

- Common goals: Commitment has been established, goals have been set.

- Pilot: Initial efforts are underway with tight controls to ensure success.

- Extend: Broaden the efforts to the enterprise. As the effort expands beyond the tightly controlled pilots, methodology and governance become even more critical.

- Standardize: Processes are innate, the organization can now be considered a service-oriented enterprise.

- Optimize: Continued improvement of all aspects of SOA.

You’ll note that there’s no mention of technologies anyway in there. That’s because technology is just one aspect of it. Other aspects include your organization, governance, operational management, communications, training, and enterprise architecture. SOA adoption is a multi-dimensional effort, and it’s important to recognize that from the beginning. I find that the maturity model is a great way of assessing where an organization is, as well as providing a framework for measuring continued growth. That being said, your ability to assess it is only as good as your model.

Integration, not convergence

Integration, not convergence

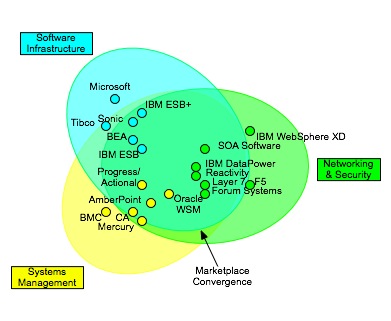

I recently had the opportunity to discuss the positioning of SOA appliances and it caused me to revisit my convergence model, as shown here:

This diagram was intended to show the challenges that enterprise face in choosing vendors today, as there are solutions from multiple product spaces. The capabilities frequently associated with activities “in the middle” can come from network appliances, application servers, ESBs, service management systems, etc. My original post talked about the challenges that organizations face in trying to pick a solution. A key factor that must be weighed is the roles in the organization. My view is that the activities in the middle should beconfigured, not coded. That’s a topic for another post, however.

I started thinking about the future state, and realized that while the offerings from vendors overlap today, that shouldn’t be the long term trend. Even vendors that can cover the entire space do it with multiple products. The right model that we should be shooting for is one that looks like this:

In this model, we have four distinct components. Service hosting is concerned with the development and execution of services. Service connectivity consists of the capabilities in the middle: routing, mediation, etc. Service management provides management facilities over both of these domains. All of these systems rely on a set of information resources which provide both the information to process, as well as the policy and meta-information required for the appropriate execution of the systems. A registry/repository, therefore, would fall into the information resource domain.

What we’d like is for all of these domains to be integrated in an open, standards-based manner. Unfortunately, we’re still a ways off from that day. There have been some proprietary efforts to create integrated solutions that look like this, such as the Governance Interoperability Framework effort by Systinet, but there’s still a long way to go. None of the vendors associated with GIF are the big players in the service hosting space (IBM, BEA, Oracle, Microsoft, etc.), and the integration standards are not open. When we have open, integration standards, we can now begin to create the feedback loop.

One short-term issue that needs to improve is the tight coupling of management consoles to the platforms. In the model, service management is loosely coupled. It integrates with the other domains through loosely coupled services, all of the best practices of SOA. Today, service hosting platforms and service connectivity systems all come with their own management consoles. In order to enable this model, the management architecture of those systems must be built on SOA principles. That means that all of the capabilities that can be managed should be exposed as services. You want to deploy a new application to the application server? Call the application deployment service. This creates a great situation for automated build systems. Out of the box, the build process could be executed in your favorite BPEL engine, with controls for compilation, automated testing, source code tagging, and deployment all orchestrated through web service interactions. Now add in a feedback loop by which metrics cause additional provisioning, or even where an uncaught exception results in a tag being placed on the source files associated with the stack trace to aid in debugging. It all begins with having the services available. Ask your vendors one simple question: are all the capabilities available through the management console also available as services?