Archive for the ‘Enterprise Architecture’ Category

More on Decision Rights

More on Decision Rights

Nick Malik posted this response to my previous post on governance and decision rights. In it, Nick claims that what I posted was a workable set of decision rights, which I partially agree with. He made three comments on quotes from my post, and it is the third where I disagree. He stated:

“If we focus on creating policies” — And here really is the confusion. What are those policies called? They are called “decision rights.”

While a policy can be statement of decision rights, such as “All solution architectures for projects costing more than $X must be approved by Enterprise Architecture,” they don’t have to be and I argue that the majority shouldn’t be. A policy like “All services must be entered into the registry/repository at the time they are identified.” is not a decision right, rather, it is a statement of expected behavior. If followed (in conjunction with other policies), the expectation is that the goals will be achieved, such as reduced redundant implementations of business logic. If goals aren’t reached, you need to revisit policies and processes, or even the people involved.

Decision rights are certainly part of governance, but a view that makes them the defining part is wrong, in my opinion. If we focus too much on decision rights and not enough on decisions, we are at risk of creating fiefdoms of power that perpetuate the negative, command and control view of governance. If we focus on policies that enable anyone to make the correct decisions, I think that is a better position for success.

Shameless plug: Want to learn more on SOA Governance? Check out my book by the same name, available now for pre-order and generally available in late October 2008.

SOA Governance and Decision Rights

SOA Governance and Decision Rights

At the SOA Consortium meeting, Fill Bowen of IBM asked me a question after my soapbox on SOA Governance that I thought would make a great post. He was surprised that I didn’t mention the words “decision rights” a single time in my post. It’s a great question, especially because the IT Governance book from Jeanne Ross and Peter Weill of MIT’s CISR defines IT governance as “specifying the decision rights and accountability framework to encourage desirable behavior in the use of IT.”

In all of my posts on SOA Governance, and in my SOA Governance book, I don’t think I’ve talked about decision rights once. While this was certainly unintentional at first, I’ve now given it some thought and I’m happy that I didn’t include it or emphasize this viewpoint. The closest I’ve come is in my discussions around the people component, and stating that the people do need to be a recognized authority. Obviously, recognized authority does imply some amount of decision rights. At the same time, I think that’s emphasizing the wrong thing. There is a negative view around the term governance because people associate it with authorities flaunting their power. The emphasis needs to be moved away from the people, and instead focus on the policies. As I stated in my “Governance does not imply Command and Control” post, if you focus on education, you can allow individual teams to make decisions, because you’ve given them the necessary information to make the right decisions. If they don’t have the information to make decisions that will lead toward the desired behavior, it turns into a guessing game. Not only can there be confusion in what the correct decisions are, there can also be confusion on what decisions should be escalated up the chain. If we instead focus on creating policies and making those policies known so that anyone in the organization can make the right decision, we’re in a much better state. Yes, you will run into some situations with conflicting policies, or the absence of a policy, but if those are the exception, it should be far easier to know that those decisions require escalation or inclusion of others.

Governance is not Optional

Governance is not Optional

In a post on his Fast Forward blog, Joe McKendrick asked the question, “Does Enterprise 2.0 Need to be Governed?” This title brought to mind a common misconception about governance. It’s not an optional activity. While there may be people that aren’t aware of it, it’s there. Even in the smallest organization, governance is there, it’s just that it’s completely manageable because everyone can talk to everyone else and there’s a small number of goals that everyone is aware of. In a startup, there needs to be desired behaviors and policies that guide the business strategy of the company. For example, what will be the balance between funding for marketing and funding for engineering? Both are needed, and failure of either one can doom the startup, yet competing startups will often take very different approaches.

In the writing of my SOA Governance Book, the reviewers both asked about connections between SOA Governance and IT Governance, or even the overall corporate governance. In my post about the four processes of governance, Rob Eamon asked why the processes couldn’t apply to architecture governance in general. The truth is, the fundamentals of governance- desired behavior, people, policies, and processes- apply regardless of the domain being governed. If you reach a point where there are enough variables involved with the efforts of your organization, whether that be the number of people employed, the number of projects executed, or many other factors, that there is now risk of people going off in a variety of directions due to unclear or competing priorities, you need governance. Anne Thomas Manes pointed this in out in the context of REST in Joe’s post. From Joe’s post:

Anne Thomas Manes says a lot of REST advocates she speaks with feel that governance isnít required for REST-based services. ìAt which point I respond saying, are you kidding? Think about how many people have created really, really bad POX applications that they claim to be rest and actually have almost no representation of the REST principles involved. They donít follow any of the constraints, and theyíre basically just tunneling RPCs to URLs.î

Put simply, we need to ensure that rather than just building things, we are building the right things, the right way. Good governance that can make that happen. Poor governance results in teams doing whether they deem as important in their corner of the world, or more likely, whatever the easiest path is for them, by first focusing on things completely within their control.

As a side note, it was disappointing to see that ebizQ’s SOA Governance Panel didn’t include any practitioners. They had 2 analysts and 4 vendors. While I’m not campaigning for future panelist slots, and I know and respect all of the panelists, governance is one of those topics that shouldn’t be discussed without at least one practitioner, preferably a corporate practioner. Some of the big federal consulting practices would also have a lot to offer, since they effectively are the corporate IT for the government agencies. There are plenty of ways to get anaylst and vendor views on it, let’s here from the people that are dealing with it on a day to day basis on what works and what doesn’t.

SOA Consortium Meeting: Jeanne Ross

SOA Consortium Meeting: Jeanne Ross

I’m here at the SOA Consortium meeting in Orlando. The first speaker at the event was Jeanne Ross from the Center for Information Systems Research at the MIT Sloan School of Management. They had recently completed a study on SOA adoption, and she presented some of the findings to the consortium. If you’re not familiar with Jeanne Ross, I thoroughly recommend two of her books, IT Governance and Enterprise Architecture as Strategy. I continue to refer to some of the concepts I learned from these books as part of my day-to-day job.

One of the interesting takeaways I had from Jeanne’s presentation was the information on the value companies are getting out of reusing services. What the study has found is that while there is definite measurable value that comes out of reuse, in many cases that effort comes at a very significant cost, a cost that often usurps the value generated. This made me think about a discussion that I frequently have with people concerning “enterprise” services versus “non-enterprise” services. In these discussions, it’s always been a black-or-white type of discussion where my stance is that we should assume a service has the potential to be an enterprise service unless told otherwise while the stance of the person I’m speaking to is frequently the exact opposite. It turns out that neither of these positions is the right way.

If you took my old stance, the problem is that you incur costs associated with building and operating a service. If that service doesn’t get reused, you won’t recoup that cost. If you took the opposing stance, you avoid the initial cost, but then you’re at risk of incurring an even higher cost when someone wants to reuse it. To maximize the value that you get out of your services, we need to find something in the middle. There does need to be a fixed amount of cost that will get sunk into any service development effort, but it needs to be focused on answering the question of whether or not we should incur the full cost of making it an enterprise service with full service lifecycle management, or if we should simply focus on the project at hand and leave it at that. I’m going to give this topic more thought and try to determine what that fixed cost should be and hopefully have some future blog entries on the subject. In the meantime, if you have ideas on what you think it should be, please comment or trackback. I’d love to hear more on how others are approaching this.

SOA Governance Book

SOA Governance Book

I can finally let my secret out. For the past few months, I’ve been working on my first book, SOA Governance, and I’m now happy to say that it is available from my publisher, Packt Publishing. It can also be purchased from Amazon.

If you’ve followed this blog, you’ll know that my take on SOA governance is that it’s all about using people, policies, and process to achieve a desired behavior, and that same theme carries through the book, showing how it applies to all aspects of your SOA efforts, ranging from project governance, to run-time governance, and finally to what I call pre-project governance.

The style of the book is inspired by the great books of Patrick Lencioni, including Five Dysfunctions of a Team, Death by Meeting, and Silos, Politics and Turf Wars. In it, he presents a fable that illustrates the concepts and points. In my book, I present the story of a fictitious company, Advasco, and their journey in adopting SOA. Along the way, the book analyzes the actions of Advasco and the role of SOA governance in the journey.

Please check it out, and feel free to send me your feedback or questions. I hope it helps you in your SOA efforts.

Best of Breed or Best Fit?

Best of Breed or Best Fit?

I saw the press release from SoftwareAG that announced their “strategic OEM partnership” with Progress Software for their Actional products. Â While I’m not going to comment on that particular arrangement, I did want to comment on the challenge that we industry practitioners face when trying to leverage vendor technologies these days.

There has been a tremendous amount of consolidation in the SOA space. Â There’s also been a lot of consolidation in the Systems Management space, another area where I pay a lot of attention. Unfortunately, the challenge still comes down to an integration problem. The smaller companies may be able to be more nimble and add desired capabilities. Â This approach is commonly referred to as a “best of breed” approach, where you pick the product that is the best for the immediate needs in a somewhat narrow area. Â Eventually, you will need to integrate those systems into something larger. Â This is where a “best fit” approach sometimes comes into play. Â Here, the desire is to focus more on breadth of capability than on depth of capability.

The definition of what is appropriate breadth is always changing, which is why many of the “best fit” vendors have grown by acquisition rather than continued enhancements and additions to their own solutions. Â Unfortunately, this approach doesn’t necessarily make the integration challenges go away. Â Sometimes it only means that a vendor is well positioned to offer consulting services as part of their offering, rather than having to go through a third party systems integrator. Â It does mean that the customer has a “single throat to choke,” but I don’t know about you, I’d much rather have it all work and not have to choke anyone.

This recent announcement is yet another example of the relationships between vendors that can occur. Â OEM relationships, rebranding, partnerships, etc. Â Does it mean that we as end users get a more integrated product? Â I think the answer is a firm maybe.

The only way that makes sense to me is to always retain control of your architecture. Â It doesn’t do any good to ask the questions, “Does your product integrate with foobar?” or “How easy is it to integrate with such-and-such?” Â You need to know the specifics of where and how you want these systems to integrate, and then compare that to what the vendors have to say, whether it’s all within their own suite of branded products or involves partners and OEM agreements. Â The more specifics you have the better. Â You may find that highly integrated suites, perhaps are integrated in name only, or maybe you’ll find that the suite really does operate as a well-oiled machine. Â Perhaps you’ll see a small vendor that has worked their tail off to integrate seamlessly into a larger ecosystem, and perhaps you’ll find a small vendor that is best left as an island in the environment.

Then, after getting answers, go through a POC effort to actually prove it out and get your hands dirty (you execute the POC, not the vendor). Â There are many choices involved in integrating these systems, such as what the message schemas will be, and the mechanisms of the integration itself- are you integrating “at the glass” via cut and paste between applications? Â Are you integrating in the middle via service interactions in the business tier? Â Or are you integrating at the data layer, either through direction database access or through some data integration/MDM-like layer? Â Just those questions alone can cause significant differences in your architecture. Â The only way you’ll see what’s really involved with the integration effort is to sit down and try it out, just do so after first defining how you’d like it to work through a reference architecture, then questioning the vendors on how well they map to your reference architecture, and finally by getting your hands dirty in a POC and actually trying to make it work as advertised in those discussions.

More on ITIL and SOA

More on ITIL and SOA

In his “links” post, James McGovern was nice enough to call additional attention to my recent ITIL and SOA post, but as usual, James challenged me to add additional value. Here’s what he had to say:

Todd Biske provides insight into how ITIL can benefit SOA but misses an opportunity to provide even more value. While it is somewhat cliche to talk about continual process improvement, it would be highly valuable to outline what types of feedback do operations types observe that could benefit the software development side of the house.

I thought about this, and it actually came down to one word: measurement. You can’t improve what you’re not measuring. It’s debatable as to whether or not operations does any better than software development in measuring the service they provide, but operations is probably in a better position to do so. Why? There is less ambiguity about the service being provided. For example, a common service from operations in big IT shops is building servers. They can measure how many servers they’ve built, how quickly they’ve been built, and they and how correctly they’ve been built, among other things.

In the case of softwre development, is the service being provided software development, or is the capability provided by the software? I’d argue that most shops are focused on the former. If you measure the “software development” service, you’ll probably measure whether the effort was completed on time and on budget. If, instead, you measure based on the capability provided by the software, it now becomes about the business value being provided by the software, which, in my opinion, is the more important factor. Taking this latter view also positions the organization for future modifications to the solutions. If my focus is solely on time and budget, why wouldn’t I disband the team when the project is done? The team has no vested interest in adding additional value. They may be challenged on some other project to improve their delivery time or budget accuracy, but there’s no connection to business value. Putting it very simply, what good does it do to deliver an application on time and on budget that no one uses?

So, back to the question, what can we learn from the ops side of the world. If ops has drunk the ITIL kool-aid, then they should be measuring their service performance, the goals for it should be reflected in the individual goals of the operations team, and it should be something that allows for improvement over time. If the measurement falls into the “one-time” measurement category, like delivering on-time and on-budget, that should be a dead giveaway that you may be measuring the wrong thing, or not taking a service-based view on your efforts.

ITIL and SOA

ITIL and SOA

I’ve been involved in some discussions recently around the topic of ITIL Service Management. While I’m no ITIL expert, the little bit of information that I’ve gleaned from these discussions has shown me that there are strong parallels between SOA and ITIL Service Management.

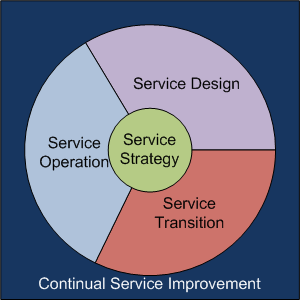

There’s the obvious connection that both ITIL Service Management and SOA contain the term “service,” but it goes much deeper than that (otherwise, this wouldn’t be worth posting). There are five major domains associated with ITIL: service strategy, service design, service transition, service operation, and continual service improvement. Here’s a picture that I’ve drawn that tries to represent them:

Keeping it very simple, service strategy is all about defining the services. In fact, there’s even a process within that domain called “Service Portfolio Management.” Service Design, Service Transition, and Service Operation are analogous to the tradition software development lifecycle (SDLC): service design is where the service gets defined, service transition is where the service is implemented, and service operation is where the service gets used. Continual Service Improvement is about watching all aspects of each of these domains and striving to improve it.

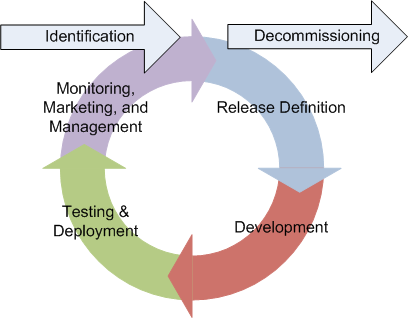

Now back to SOA side of the equation. I’ve previously posted on the change to the development process that is possible with SOA, most recently, this one. The essence of it is that SOA can shift the thinking from a traditional linear lifecycle that ends when a project goes live to a circular lifecycle that begins with identification of the service and ends with the decommissioning of the service. Instead, the lifecycle looks like this:

The steps of release definition, development, testing, and deployment are the normal SDLC activities, but added to this is what I call the triple-M activity: monitoring, marketing, and management. We need to do the normal “keep the lights on” activities associated with monitoring, but we also need to market the service and ensure its continued use over time, as well as manage its current use and ensure that it is delivering the intended business value. If improvements can be made through either improvements in delivery or by delivering additional functionality, the cycle begins again. This is analogous to the ITIL Service Management Continual Service Improvement processes. While not shown, clearly there is some strategic process that guides the identification and decommissioning activities associated with services, such as application portfolio management. Given this, these two processes have striking similarities.

What’s the point?

The message that I want you to take away is that we should be thinking about application and “web” service delivery in the same way that we are thinking about ITIL service delivery. Many people think ITIL is only about IT operations and the infrastructure, but that’s not the case. If you’re a developer, it equally applies to the applications that you are building and delivering. Think of them in terms of services and the business value that they deliver. When the project for version 1 ends, don’t stop thinking about it. Put in place appropriate metrics and reporting to ensure that it is delivering the intended value and watch it on a regular basis. Understand who your “users” are (it could be other systems and the people repsonsible for them), make sure they’re happy, and seek out new ones. Adopt a culture of continuous improvement, rather than simply focus on meeting the schedule and the budget and then waiting for the next project assignment.

A Key Challenge of Context Driven Architecture

A Key Challenge of Context Driven Architecture

The idea of context-driven architecture, as coined by Gartner, has been bouncing around my head since the Gartner AADI and EA Summits I attended in June. It’s a catch phrase that basically means that we need to design our applications to take into account as much as possible of the context in which it is executed. It’s especially true for mobile applications, where the whole notion of location awareness has people thinking about new and exciting things, albeit more so in the consumer space than in the enterprise. While I expect to have a number of additional posts on this subject in the future, a recent discussion with a colleague on Data Warehousing inspired this post.

In the data warehousing/business intelligence space, there is certainly a maturity curve to how well an enterprise can leverage the technology. In its most basic form, there’s a clear separation between the OLTP world and the DW/BI world. OLTP handles the day to day stuff, some ETL (extract-transform-load) job gets run to put it into DW, and then some user leverages the BI tools to do analytics or run reports. These tools enable the user to look for trends, clusters, or other data mining type of activities. Now, think of a company like Amazon. Amazon incorporates these trends and clusters into the recommendations it makes for you when you log in. Does Amazon’s back-end run some sophisticated analytics process every time I log in? I’d be surprised if it did, since performing that data mining is an expensive operation. I’m guessing (and it is a guess, I have no idea on the technical details inside of Amazon) that the analytics go on in the background somewhere. If this is the case, then this means that the incorporation of the results of the analytical processing (not the actual analytics itself) is coded into the OLTP application that we use when we log in.

So what does this have to do with context-driven architecture? Well, what I realized is that it all comes down to figuring out what’s important. We can run a BI tool on the DW, get a visual representation, and quickly spot clusters, etc. Humans are good at that. How do you tell a machine to do that, though? We can’t just expect to hook our customer facing applications up to a DW and expect magic to happen. Odds are, we need to take the first step of having a real person look at the information, decide what is relevant or not with the assistance of analytical tools. Only then can we set up some regular jobs to execute those analytics and store the results somewhere that is easily accessible by an OLTP application. In other words, we need to do analysis to figure out what the “right” context is. Once we’ve established the correlation, now we can begin to leverage that context in a way that’s suitable for the typical OLTP application.

So, if you’re like me, and just starting to noodle on this notion, I’d first take a look at your maturity around your data warehousing and business intelligence tools. If you’re relatively mature in that space, that I expect that a leap toward context-driven applications probably won’t be a big stretch for you. If you’re relatively immature, you may want to focus on building that maturity up, rather than jumping into context-driven applications before you’re ready.

Governance and SOA Success

Governance and SOA Success

Michael Meehan, Editor-in-Chief of SearchSOA.com, posted a summary of a talk from Anne Thomas Manes of the Burton Group given at Burton’s Catalyst conference in late June. In it, Anne presented the findings of a survey that she did with colleague Chris Haddad on SOA adoption.

Michael stated:

Manes repeatedly returned to the issues of trust and culture. She placed the burden for creating that trust on the shoulders of the IT department. “You’re going to have to create some kind of culture shift,” she said. “And you know what? You’ve been breaking their hearts for so many years, it’s up to you to take the first step.”

I’m very glad that Anne used the term “culture shift,” because that’s exactly what it is. If there is no change in the way IT defines and builds solutions other than slapping a new technology on the same old stuff, we’re not going to even put a dent in the perceptions the rest of the organization has about IT, and are even at risk of making it worse.

The article went on to discuss Cigna Group Insurance and their success, after a previous failure. A new CIO emphasized the need for culture change, started with understanding the business. The speaker from Cigna, Chad Roberts, is quoted in Michael’s article as saying, “We had to be able to act and communicate like a business person.” He also said, “We stopped trying to build business cases for SOA, it wasn’t working. Instead use SOA to strengthen the existing business case.” I went back and re-read a previous post, that I thought made a similar point, but found that I wasn’t this clear. I think Chad nails it.

In a discussion about the article in the Yahoo SOA group, Anne followed up with a few additional nuggets of wisdom.

One thing I found really surprising was that the people from the

successful initiatives rarely talked about their infrastructure. I had

to explicitly solicit the information from them. From their

perspective, the technology was the least important aspect of their

initiative.

This is great to hear. While there are plenty of us out there that have stated again and again that SOA isn’t about applying WS-*/REST or buying an ESB, it still needs to be emphasized. A surprising comment, however, was this one:

They rarely talked about design-time governance — other

than improving their SDLC processes. They implemented governance via

better processes. Most of it was human-driven, although many use

repositories to manage artifacts and coordinate lifecycle. But again,

the governance effort was less important than the investment in social

capital.

I’m still committed to my assertion that governance is critical to a

successful SOA initiative–but only because governance is a means to

effect behavioral change. The true success factor is changing

behavior.

I think what we’re seeing here is the effects of governance becoming a marketing term. The telling statement is in Anne’s second paragraph- governance is a means to effect behavioral change. My definition of governance is the people, policies, and processes that an organization employs to achieve a desired behavior. It’s all about behavior change in my book. So, when the new Cigna CIO makes a mandate that IT will understand the business first and think about technology second, that’s a desired behavior. What are the policies that ensured this happened? I’m willing to bet that there were some significant changes to the way projects were initiated at Cigna as part of this. Were the policies that, if adhered to, would lead to a funded project documented and communicated? Did they educate first, and then only enforce where necessary? That sounds like governance to me, and guess what- it led to success!

Mentoring and Followup to Clarity of Purpose

Mentoring and Followup to Clarity of Purpose

James McGovern posted his own thoughts in response to my Clarity of Purpose post. In it, he asks a couple of questions of me.

“I wonder if Todd has observed that trust as a concept is fast declining.” I don’t know that I’d say it is declining, but I would definitely say that it is a key differentiator between well-functioning organizations and poorly functioning organizations. I think it’s natural that as an organization grows, you have to work harder to keep the trust in place. How many people in a small town say they trust their local government versus a big city, let alone the country? The same holds true for typical corporate IT. As James’ points out, trust gets eroded easily when things are over-promised and under-delivered. Specifically in the domain of enterprise architecture, we’re at particular risk because we often play the role of the salesperson, but the implementation is left to someone else. When things go bad, the customer directs their venom at the salesperson, rather than digging deep to understand root cause. We also too frequently look to point fingers rather than fix the problem. It’s unfortunate that too many organizations have a “heads must roll” approach which doesn’t allow people to make mistakes and learn. A single mistake is a learning opportunity. Making the same mistake over and over is a problem that must be dealt with.

“Maybe Todd can talk about his ideas around the importance of mentoring in a future blog entry as this is where EA collectively is weak and declining.” Personally, I think it’s a good practice to always have some amount of your enterprise architect’s time dedicated to project mentoring. Don’t assign them as a member of the project team where the project manager controls their tasks, rather, encourage them to actively work with the project team, keep up to date on what they are doing, and look for opportunities where you can help. The most important thing, however, is to have an attitude of contributing the help that is needed, rather than contributing your own wisdom. If you come in pontificating, going off on tangents, and expressing an “I know better” attitude, you’ll only get resentment. If, instead, you seek first to understand, as Stephen Covey suggests, you’ll have much better luck. While I was working as a consultant, I had a client who indicated that what they really needed was a mentor. For some consultants, this would have been perceived as the kiss of death, because it can result in an open-ended, warm body engagement, without clear expectations and deliverables. There’s a lot of risk when expectations aren’t clear and can change on a moment’s notice. In reality, the engagement was simply to listen and then offer suggestions and advice to either confirm what they already knew but lacked confidence to go after with conviction, or to suggest things that they might not have thought about. It’s not an easy task to do, but it is absolutely critical. I think an architect who is willing to stand by his or her strategy and see it through to completion, not necessarily from a hands-on perspective, but from a mentoring and guidance perspective, can build far more trust.

Think Orchestration, not BPEL

Think Orchestration, not BPEL

I was made aware of this response from Alex Neihaus of Active Endpoints on the VOSibilities blog to a podcast and post from David Linthicum. VOS stands for Visual Orchestration System. Alex took Dave to task for some of the “core issues” that Dave had listed in his post.

I read both posts and listened to Dave’s podcast, and as is always the case, there are elements of truth on both sides. Ultimately, I feel that the wrong question was being asked. Dave’s original post has a title of “Is BPEL irrelevant?” and the second paragraph states:

OK, perhaps it’s just me but I don’t see BPEL that much these days, either around its use within SOA problem domains I’m tracking, or a part of larger SOA strategies within enterprises. Understand, however, that my data points are limited, but I think they are pretty far-reaching relative to most industry analysts’.

To me, the question is not whether BPEL is relevant or not. The question is how relevant is orchestration? When I first learned about BPEL, I thought, “I need a checkbox on my RFP/RFI’s for this to make import/export is supported,” but that was it. I knew the people working with these systems would not be hand-editing the XML for BPEL, they’d be working with a graphical model. To that end, the BPMN discussion was much more relevant than BPEL.

Back to the question, though. If we start talking about orchestration, we get into two major scenarios:

- The orchestration tool is viewed as a highly-productive development environment. The goal here is not to externalize processes, but rather, to optimize the time it takes to build particular solutions. Many of the visual orchestration tools leverage significant amount of “actions” or “adapters” that provide a visual metaphor for very common operations such as data retrieval or ERP integration. The potential exists for significant productivity gains. At the same time, many of the things that fall into this category aren’t what I would call frequently changing processes. The whole value add of being able to change the process definition more efficiently really doesn’t apply.

- The orchestration tool is viewed as a facility for process externalization. Here’s the scenario where the primary goal is flexibility in implementing process changes rather than in developer productivity. I haven’t seen this scenario as often. In other words, the space of “rapidly changing business processes” is debatable. I certainly have seen changes to business rules, but not necessarily to the project itself. Of course, on the other hand, many processes are defined to begin with, so the culture is merely reacting to change. We can’t say what we’re changing from or to, but we know that something in the environment is different.

So what’s my opinion? I still don’t get terribly excited about BPEL, but I definitely think orchestration tools are needed for two reasons:

- Developer productivity

- Integrated metrics and visibility

Most of the orchestration tools out there are part of a larger BPM suite, and the visibility that they provide on how long activities take is a big positive in my book (but I’ve always been passionate about instrumentation and management technologies). As for the process externalization, the jury is still out. I think there are some solid domains for it, just as there are for things like complex event processing, but it hasn’t hit mainstream yet at the business level. It will continue to grow outward from the developer productivity standpoint, but that path is heavily focused on IT system processes, not business processes (just like OO is widely used within development, but you don’t see non-IT staff designing object models very often). As for BPEL, it’s still a mandatory checkbox, and as we see separation of modeling and editing from execution engine, it’s need may become more important. At the same time, how many organizations have separate Java tooling for when they’re writing standalone code versus writing Java code for SAP? We’ve been dealing with that for far longer, so I’m not holding my breath waiting for a clean separation between tools and the execution environment.

The Real SOA Governance Dos and Don’ts

The Real SOA Governance Dos and Don’ts

Dave Linthicum had a recent post called SOA Governance Dos and Don’ts which should have been titled, “SOA Governance Technology Selection Dos and Don’ts.” If you use that as the subject, then there’s some good advice. But once again, I have to point out that technology selection is not the first step.

My definition of governance is that it is the people, policies, and processes that ensure desired behavior. SOA governance, therefore, is the people, policies, and processes the ensure desired behavior in your SOA efforts. So what are the dos and don’ts?

Do: Define what your desired behavior is. It must be measurable. You need to know whether you’re achieving the behavior or not. It also should also be more than one statement. It should address both behavior of your development staff as well as the run-time behavior of the services (e.g. we don’t one any one consumer to be able to starve out other consumers).

- Don’t: Skip that step.

- Do: Ensure that you have people involved with governance who can turn those behaviors into policies.

- Don’t: Expect that one set of people can set all policies. As you go deep in different areas, bring in appropriate domain experts to assist in policy definition.

- Do: Document your policies.

- Don’t: Rely on the people to be the policies. Your staff has to know what the policies are ahead of time. If they have to guess what some reviewer wants to see, odds are they’ll guess wrong, or the reviewer may be more concerned about flaunting authority rather than achieving desired behavior.

- Do: Focus on education on the desired behavior and the policies that make it possible.

- Don’t: Rely solely on a police force to ensure compliance with policies.

- Do: Make compliance the path of least resistance.

- Don’t: Expect technologies to define your desired behavior or policies that represent it.

- Do: Use technology where it can improve the efficiency of your governance practices.

There’s my take on it.

Gartner EA: EA and SOA

Gartner EA: EA and SOA

This is my last post from the summits (actually, I’m already at the airport). This morning, I participated in a panel discussion on EA and SOA as part of the EA Summit with Marty Colburn, Executive VP and CTO for FINRA; Maja Tibbling, Lead Enterprise Architect for Con-way; and John Williams, Enterprise Architect from QBE Regional Insurance. The panel was jointly moderated by Dr. Richard Soley of the OMG and SOA Consortium and Bruce Robertson of Gartner. It was another excellent session in my opinion. We all brought different perspectives on how we had approached SOA and EA, yet there was some apparent commonalities. Number one was the universal answer to what the most challenging things was with SOA adoption: culture change.

There were a large number of questions submitted, and unfortunately, we didn’t get to all of them. The conference director, Pascal Winckel (who did a great job by the way), has said he will try to get these posted onto the conference blog, and I will do my best to either answer them here on my blog or via comments on the Gartner blog. As always, if you have questions, feel free to send them to me here. I’d be happy to address them, and will keep all of the anonymous, if so desired.

Gartner EA: Case Study

Gartner EA: Case Study

I just attended a case study at the summit. The presenter requested that their slides not be made available, so I’m being cautious about what I write. There was one thing I wanted to call out, which was that the case study described some application portfolio analysis efforts and mapping of capabilities to the portfolio. I’ve recently been giving a lot of thought to the analysis side of SOA, and how an organization can enable themselves to build the “right” services. One of the techniques I thought made sense was exactly what he just described with the mapping of capabilities. Easier said than done, though. I think most of us would agree that performing analysis outside of the context of a project could provide great benefits, but the problem is that most organizations have all their resources focused on running the business and executing projects. This is a very tactical view, and the usual objection is that as a result, they can’t afford to do a more strategic analysis. It was nice to hear from an organization that could.