Archive for the ‘Governance’ Category

Governance: Are you on the left or the right?

Governance: Are you on the left or the right?

Dan Foody of Progress Software posted a followup to my last post entitled, “Less Governance is Good Governance.” Clearly, when it comes to SOA governance, Dan falls on the right. For those of you not in the US, the right, when used in the political sense, refers to the Republican party. The Republican party is typically the champion of less government. On the opposite side of the political fence, over on the left, are the Democrats. The Democratic party is typically associated with big government and more regulation. This post isn’t about US politics, however, it’s about SOA governance.

In line with his right-facing view, Dan’s final statement in his post says, “As much governance as is absolutely necessary, but no more.” Well, the United States has had successful times when Congress at times when each party had the majority, likewise for the presidency. So, I don’t think that it’s a uniform truth that less governance is good governance. Good governance is not defined by how quickly your team comes to consensus, it’s defined on whether or not you achieve your desired behavior. Sometimes, that may only require a few policies. Other times, it may require lots of policies, and lots of regulation. It is completely dependent on the current behavior of your organization, the behavior you need to have to be successful, and the degree to which everyone in the organization understands it and agrees with it, which is why communication is so important. Left? Right? Both can be successful, it’s all about where you are and where you need to go.

Governance does not imply Command and Control

Governance does not imply Command and Control

In a recent discussion I had on SOA Governance with Brenda Michelson, program director for the SOA Consortium, she passed along a link to this article from Business Week. It’s the story of Tom Coughlin, coach of the Super Bowl champion New York Giants, and how he had to change from his reputation of an “autocratic tyrant” in order for the team to ultimately succeed.

What does this have to do with governance? When you think of governance, what comes to mind? My suspicion is that for most people, it’s not a positive image. At its extreme worst, I’ve heard many people describe governance as a slow, painful process that requires investing significant time preparing for a review by people who are out of touch with what a project is trying to do that ultimately results in the reviewers flaunting authority, the project team taking their lumps, and then everybody goes back to doing what they were doing with no real change in behavior other than increased animosity in the organization. In other words, exactly the situation that Tom Coughlin had with previous teams.

The fact is that governance is a required activity of any organization. Governance is the way in which an organization leverages people, policies, and processes to achieve a desired behavior. In the case of the New York Giants, their desired behavior was winning the Super Bowl. In years past, only people involved with setting the policies was the coaching staff, and the processes consisted of yelling and screaming. It didn’t work. When the desired behavior wasn’t achieved, change was needed, and that change was made in the governance. Players became involved in setting policies through a leadership council. That same council also became part of the governance process in both educating other players about the policies as well as ensuring compliance.

Unfortunately, when governance gets discussed, people naturally assume a command and control structure. When an individual sees a new statement “Thou shall do this” or “Thou shall not do that,” they think command and control, especially if they weren’t involved with the setting of the policy. As we grow, this sentiment becomes increasingly important. How many of us that live in large countries feel our politicians are disconnected from us, even if they are elected representatives? Those policies still need to be set, however, and there will always be a need for some form of authority to establish the policies. The key is how the “authority” is established and how they then communicate with the rest of the organization. If the authority is established by edict, and only consists of one-way communication (down) from the authority, guess what? You have a dictatorship with a command-and-control structure. If you set up representative groups, whether formal or informal, and focus on bi-directional communication, you can conquer that command-and-control mentality. The risk with that approach is that the need for “authority” is forgotten. Decisions still must be made and policies must be set, and if the group-think can’t do that, there will still be problems. Morale may not impacted in the same negative way as a command-and-control approach, but the end result is that you’re still not achieving the desired behavior.

Good governance is necessary for success, and open communication and collaboration is necessary for good governance. My recommendation is that if you are an in a position of authority (a policy setter and/or enforcer), you must keep the lines of communication open with your constituents and help them to set policies, change policies that are doing more harm than good, and understand the reasons behind policies. If you are a constituent, you need to participate in the process. If a policy is causing more harm than good in your opinion, make it known to the authorities. Sometimes that may result in a policy change. Sometimes it may result in you changing your expectations and seeing the reasons why compliance is necessary.

Governance and SOA Success

Governance and SOA Success

Michael Meehan, Editor-in-Chief of SearchSOA.com, posted a summary of a talk from Anne Thomas Manes of the Burton Group given at Burton’s Catalyst conference in late June. In it, Anne presented the findings of a survey that she did with colleague Chris Haddad on SOA adoption.

Michael stated:

Manes repeatedly returned to the issues of trust and culture. She placed the burden for creating that trust on the shoulders of the IT department. “You’re going to have to create some kind of culture shift,” she said. “And you know what? You’ve been breaking their hearts for so many years, it’s up to you to take the first step.”

I’m very glad that Anne used the term “culture shift,” because that’s exactly what it is. If there is no change in the way IT defines and builds solutions other than slapping a new technology on the same old stuff, we’re not going to even put a dent in the perceptions the rest of the organization has about IT, and are even at risk of making it worse.

The article went on to discuss Cigna Group Insurance and their success, after a previous failure. A new CIO emphasized the need for culture change, started with understanding the business. The speaker from Cigna, Chad Roberts, is quoted in Michael’s article as saying, “We had to be able to act and communicate like a business person.” He also said, “We stopped trying to build business cases for SOA, it wasn’t working. Instead use SOA to strengthen the existing business case.” I went back and re-read a previous post, that I thought made a similar point, but found that I wasn’t this clear. I think Chad nails it.

In a discussion about the article in the Yahoo SOA group, Anne followed up with a few additional nuggets of wisdom.

One thing I found really surprising was that the people from the

successful initiatives rarely talked about their infrastructure. I had

to explicitly solicit the information from them. From their

perspective, the technology was the least important aspect of their

initiative.

This is great to hear. While there are plenty of us out there that have stated again and again that SOA isn’t about applying WS-*/REST or buying an ESB, it still needs to be emphasized. A surprising comment, however, was this one:

They rarely talked about design-time governance — other

than improving their SDLC processes. They implemented governance via

better processes. Most of it was human-driven, although many use

repositories to manage artifacts and coordinate lifecycle. But again,

the governance effort was less important than the investment in social

capital.

I’m still committed to my assertion that governance is critical to a

successful SOA initiative–but only because governance is a means to

effect behavioral change. The true success factor is changing

behavior.

I think what we’re seeing here is the effects of governance becoming a marketing term. The telling statement is in Anne’s second paragraph- governance is a means to effect behavioral change. My definition of governance is the people, policies, and processes that an organization employs to achieve a desired behavior. It’s all about behavior change in my book. So, when the new Cigna CIO makes a mandate that IT will understand the business first and think about technology second, that’s a desired behavior. What are the policies that ensured this happened? I’m willing to bet that there were some significant changes to the way projects were initiated at Cigna as part of this. Were the policies that, if adhered to, would lead to a funded project documented and communicated? Did they educate first, and then only enforce where necessary? That sounds like governance to me, and guess what- it led to success!

Comments on TUCON 2008 Podcast

Comments on TUCON 2008 Podcast

Dana Gardner moderated a panel discussion at Tibco’s User Conference (TUCON) on Service Performance Management and SOA. There were some great nuggets in this session, I encourage you to listen to the podcast or read the transcript. The panelists were Sandy Rogers of IDC, Joe McKendrick, Anthony Abbattista of Allstate, and Rourke McNamara of TIBCO.

First, Sandy Rogers of IDC commented that what she finds interesting “is that even if you have one service that you have deployed, you need to have as much information as possible around how it is being used and how the trending is happening regarding the up-tick in the consumption of the service across different applications, across different processes.” I couldn’t agree more on this item. I have seen first hand the value in collecting this information and making it available. Unfortunately, all too often, the need for this is missed when people are looking for funding. Funding is focused on building the service and getting it out the door on-time and on-budget, and operation concerns are left to classic up/down monitoring that never leaves the walls of IT operations. We need to adjust the culture so that monitoring of the usage is a key part of the project success. How can we make any statements on the value of a service, or any IT solution for that matter, if we aren’t monitoring how that service is being used? For example, I frequently see projects that are proposed to make some manual process more efficient. If that’s the value play, are we currently measuring the cost of the manual activity, and how are we quantifying the cost of doing it the new way? Looking at the end database probably isn’t good enough, because that only shows the end results of processing, not the pace of processing. Automated a process enables you to process more, but if demand is stable, the end result will still look the same. The difference lies in the fact that people (and systems) have more time available for other activities.

Sandy went on to state:

They (organizations) need a lot more visibility and an understanding of the strains that are happening on the system, and they need to really build up a level of trust. Once they can add on to the amount of individuals that have that visibility, that trust starts to develop, more reuse starts to happen, and it starts to take off.

Joe picked on this stating “that the foundation of SOA is trust.” No arguments here. If the culture of the organization is one of distrust, I see them of having very slim chances of having any success with SOA. Joe correctly called out that a lot of this hinges on governance. I personally believe that governance is how an organization changes behavior and culture. Lack of trust is a behavior and trust issue. Only by clearly stating what the desired behavior is and establishing policies that create that behavior can culture change happen.

Anthony provided a great anecdote from the roll-out of their ESB stating that they spent 18 months justifying its use and dealing with every outage starting with someone saying, “TIBCO is down.” In reality, it was usually some back end service or component being down, but since the TIBCO ESB was the new thing, everyone blamed it. By having great measurements and monitoring, they were able to get to root cause. I had the exact same situation at a prior company, and it was fun watching the shift as people blamed the new infrastructure, and I would say, “No, it’s up, and the metrics it has collected makes me think the problem is here.”

A bit later in the podcast, Joe mentioned a conversation with Rourke earlier in the day, commenting that “predictive analytics, which is a subset of business intelligence (BI), is now moving into the systems management space.” This sounds very familiar…

Rourke also made a great comment when referring to a customer who said “their biggest fear is that their SOA initiative will be a victim of its own success.” He went on to say:

That could make SOA a victim of its own success. They will have successfully sold the service, had it reused over and over and over and over again. But, then, because of that reuse, because they were successful in achieving the SOA dream, they now are going to suffer. All that business users will see from that is that “SOA is bad,” it makes my applications more fragile, it makes my applications slow down because so many people are using the same stuff.

That was a great point. SOA, if it is successful, should result in an increase in the number of dependencies associated with an IT solution. Many people shudder at that statement, but the important thing is that there should be those dependencies. What’s bad is when those dependencies aren’t effectively managed and monitored. The lack of effective management results in complicated, ad hoc processes that give the perceive that the technology landscape is overly complex.

This was one of the better panel discussion I’ve heard in a while. I encourage you to give it a listen.

Integration Competency Centers and SOA

Integration Competency Centers and SOA

Lorraine Lawson of IT Business Edge had a post last week that linked to my previous posts on Centers of Excellence and Competency Centers entitled, “The Best Practice That Companies Ignore.” In this article, she references an eBizQ survey that revealed that only 9% of respondents had a competency center or center of excellence. While she wasn’t surprised at this, she was surprised at recent comments from Ken Vollmer of Forrester that said the same is true for Integration Competency Centers, a concept that has been around for several years. In her discussion with Ken, she states he indicated that “any organization with mid-to-high-level integration issues could benefit from an ICC.” My take on the discussion was that Ken feels that every mid to large organization should have one (my opinion, neither he nor Lorraine stated this).

The real issue I had with some of the justifications for having an ICC was an underlying assumption that intergration is a specialized discipline. While this was the case 8-10 years ago, I think we’ve made significant progress. I actually think there is a specific detriment that an ICC can have to an SOA effort. When an ICC exists, integration is now someone else’s problem. I worry about my world, and I leave it up to the integration experts to make my world accessible to everyone else. It’s this type of thinking that will doom an SOA effort, because everyone’s first concern is themselves, not everyone else. To do SOA right, your service teams should be consumer-focused first.

Regarding ICCs, the reason I don’t think there is broad adoption of the concept is that majority of companies, even large enterprises, only have one or two major systems that represent 80% of the integration effort, typically either mainframe integration or ERP integration. Companies that have grown via acquisition may have a much more difficult problem with multiple mainframes, multiple ERP systems, etc., and for them, ICCs are a good fit. I just don’t think that’s 80% of the mid-to-large businesses.

The last piece of the message, and where she linked to my posts, deals with whether or not the ICC should temporary or not. Ken’s comment was that there are always new integration tools coming out, and the ICC should be responsible for them. I don’t agree with this. There are also new development tools coming out, and I don’t see companies with a development competency center. Someone does have to be responsible for integration technologies, but this could easily be part of the responsibilities for a middleware technology architect.

Applying the same argument to SOA, again, if it’s technology-focused, I don’t buy it. If we get into the space of SOA Advocacy and Adoption, then I think there’s some value. Clearly, individual projects building services does not constitute SOA. Given that, who is guiding the broader SOA effort? Perhaps what is ultimately needed is a SOA Advocacy Center or SOA Adoption Center that is repsonsible for seeing it forward. There’s no formula for this, though. A person dedicated to being the SOA Champion with excellent relationships in the organization could potentially do this on their own. Ultimately, this become just like any other strategic initiative. To acheive the strategy, the organization must put proper leadership in place. If it’s one person, great. If it’s a standing committee, great. Just as long as it is positioned for success. Putting one person in charge who lacks the relationships won’t cut it, but putting a committee together to establish those relationships will. Whether it’s permanent or not is dependent on whether the activities can become standard practice, or if there is a continual need for leadership, guidance, and governance.

The Real SOA Governance Dos and Don’ts

The Real SOA Governance Dos and Don’ts

Dave Linthicum had a recent post called SOA Governance Dos and Don’ts which should have been titled, “SOA Governance Technology Selection Dos and Don’ts.” If you use that as the subject, then there’s some good advice. But once again, I have to point out that technology selection is not the first step.

My definition of governance is that it is the people, policies, and processes that ensure desired behavior. SOA governance, therefore, is the people, policies, and processes the ensure desired behavior in your SOA efforts. So what are the dos and don’ts?

Do: Define what your desired behavior is. It must be measurable. You need to know whether you’re achieving the behavior or not. It also should also be more than one statement. It should address both behavior of your development staff as well as the run-time behavior of the services (e.g. we don’t one any one consumer to be able to starve out other consumers).

- Don’t: Skip that step.

- Do: Ensure that you have people involved with governance who can turn those behaviors into policies.

- Don’t: Expect that one set of people can set all policies. As you go deep in different areas, bring in appropriate domain experts to assist in policy definition.

- Do: Document your policies.

- Don’t: Rely on the people to be the policies. Your staff has to know what the policies are ahead of time. If they have to guess what some reviewer wants to see, odds are they’ll guess wrong, or the reviewer may be more concerned about flaunting authority rather than achieving desired behavior.

- Do: Focus on education on the desired behavior and the policies that make it possible.

- Don’t: Rely solely on a police force to ensure compliance with policies.

- Do: Make compliance the path of least resistance.

- Don’t: Expect technologies to define your desired behavior or policies that represent it.

- Do: Use technology where it can improve the efficiency of your governance practices.

There’s my take on it.

Gartner AADI: Application Strategies

Gartner AADI: Application Strategies

Presenter: Andy Kyte

The title of this session is, “If You Had an Application Strategy, What Would It Look Like?” So far, I think I’m going to like it, because he’s emphasizing the need to manage the application lifecycle. That’s application lifecycle, not application development lifecycle. The application lifecycle ends when the last version of the application is removed from production. He’s emphasizing that an application is both an asset and a liability, with the liability being all of the people, technologies, and skills required to sustain it.

He’s now getting a ton of laughs by using a puppy/dog metaphor. He stated that we don’t buy a puppy, we buy a dog. It may be all cute and playful when we get it, but it will grow in a dog that sheds, eats, etc. Applications are the same way. Great quote just now: “Business cases are focused on putting applications in, and not on what to do after it. We are contributed to the problem by not addressing this.” He emphasizes that an application strategy should cover the next seven years, or half the expected remaining life of the application, whichever is the greater.

He’s now talking about stakeholder management and hitting on all the points I usually mention when talking about Service Lifecycle Management. The application is an asset, it must have an individual who is responsible for it, lifecycle decisions should be transparent, and all stakeholders (i.e. users of the application) must be identified and actively encouraged to play some role in the governance of the application.

The rest of the session is focusing in on the creation of the strategy document and making sure that it is a living, useful document. He’s emphasized that it is a plan, but also stressed the first law of planning: “No plan survives contact with the enemy.” It’s recognized that the plan is based on assumptions about the future. By documenting those assumptions, including leading indicators, leading contra-indicators, and expected timings, we can continually monitor and change the plan.

Overall, this is a very, very good session. What’s great about this is it is promoting a genuine change in thinking in the way that probably 90% of the companies here operate. Add a great speaker to the mix, and you’ve got a very good talk.

Gartner AADI: Application and SOA Governance

Gartner AADI: Application and SOA Governance

Presenter: Matt Hotle

“Governance is the key to predictable results” is the title of his first slide. At first glance, I disagreed with this statement, but as he explained it, he emphasized the use of policies and how they guide decision making. That I agree with. I don’t know if I’m comfortable going so far as to saying it’s the key to predictable results. I think it’s the term “predictable” that’s causing my discomfort. As I’ve said before, governance is about achieving a desired behavior, which is a slightly different view than predictable results. When I hear “desired behavior,” I don’t think of standard processes, when I hear “predictable results,” I do. Perhaps I’m nitpicking since I’m somewhat passionate about this space.

BTW, 92 minutes into the Steve Jobs’ keynote, iPhone 3G has just been announced. Are they selling them anywhere in the Orlando World Center Marriott? No? Rats. Now back to governance.

The slide he’s showing now has one very good nugget. He states, “SOA Governance needs… a funding model to maintain services as assets (service portfolio management/chargeback).” I’ve always felt that if an organization wants to do services the right way (see Service Lifecycle Management), it would inevitably wind up challenging the typical project-based funding model in most IT shops. Matt is now talking about the term “application” and while he didn’t go as far as I have in the past (see The End of the Application), he did make it clear that the notion of “application” needs to change. He just hit another key point with me! He said that what he’s outlining is “product management” and most IT shops don’t have a clue how to do product management. Boy does that sound very familiar (see this, this, this, this, and listen to this).

Gartner AADI: World Class Governance

Gartner AADI: World Class Governance

Susan Landry and Matt Hotle are giving this presentation.

Matt had a couple good quotes in the introduction:

- Governance is a framework that allows management to be successful.

- Governance is the set of processes that allow us to safely move from the past to the present and into the future.

Susan just covered a key topic, which was to perform stakeholder analysis. Each stakeholder has their own interests, political agendas, etc. I can agree with this. I define governance as the people, policies, and processes an organization puts in place to achieve desired behavior. A huge problem will ensue if there isn’t agreement among the stakeholders on what the desired behavior is, so getting them all on the same page and understanding their differences is very important.

The session is now over. One thing I disagreed with a bit was in their definition of governance, they limited it to the policies and processes associated with the efficient allocation of resources. I think this is only one aspect of it. As I called out earlier, it’s about achieving desired behaviors. The efficient allocation of resources is only one contributor to it.

Center of Excellence, Part II

Center of Excellence, Part II

I should know better than to blog late at night. I will never be classified as a night person. Anyway, in reading back over my post yesterday on Centers of Excellence or Competency Centers, I never quite finished my train of thought, but a post from the SOA Consortium blogs by Brenda Michelson refreshed my memory. This post recaps a podcast from their March meeting that discussed the topic of SOA Centers of Excellence. In the post, she called out that the panelists articulated the skills required to operate a SOA Center, which included:

- Project/Portfolio Management

- Service Design

- Business Knowledge

- Technical Aptitude

- Communication

- Teaching

- Governance

This list reminded me of a different view on COEs and Competency Centers. A challenge with SOA adoption is leadership. Who drives the organization’s adoption of SOA? If you’re like me, and don’t view SOA as just a technical thing, there’s no easy answer to the question. While enterprise architecture has the appropriate scope of visibility and influence on the technical side, they’re not the best group for handling organizational decisions. From the organizational side, there may an IT leadership group or committee that could handle it, but what about the technical aspects? This is where a cross-functional group may make a lot of sense and could be quite long lived. I know I’ve always thought of SOA as at least a 5 year effort, and looking back now from 5 years after I first said that in an organization, 5 years was clearly too optimistic.

Even given this long-term commitment, I still think the people running the SOA Center should always plan on having a time-limited lifecycle. SOA should become part of the normal way IT operates, not something that requires a special group to manage. While a cross-functional group will be required at the beginning, at some point, individual managers and technical leaders must take responsibility for their parts in contributing to the overall goals of SOA. It may not occur until sufficient organizational change (restructuring, not necessarily people) has taken place, but the goal of the program must be to make the behaviors associated with SOA normal practice, rather than something that must be explicitly enforced because it’s still outside of the norm.

Lobbyists and Governance

Lobbyists and Governance

I’ve had this topic on my list for some time now. I’ve used analogies to municipal/local/state/federal governance in past posts, and in a conversation someone made a comment that they thought I was going to continue the analogy on to include lobbyists. I made a mental note, because I knew there were definitely some parallels that could make for good blog fodder.

So, in a typical government, what do lobbyists do? In a nutshell, they do whatever they can to influence the policy makers to establish policies that are benefits the lobbyists or whoever they represent. In general, I think most individual voters probably have a negative view of lobbyists, except those whose beliefs happen to align with their own. So, are they a good thing or a bad thing?

Let’s come back to the whole purpose of governance. My definition of governance is that is the combination of people, policies, and processes that an entity utilizes to achieve a desired behavior. People set policies and processes ensure they are followed. As a reminder, enforcement processes are only one subset of processes that can be used. An organization could just as easily focus on education processes rather than enforcement and achieve the desired behavior. I stated earlier that lobbyists try to influence the policy makers (people) to establish policies in the interest of the lobbyists. Where this becomes a problem is when the people involved in governance lose sight of the objective of governance. Lobbyists are frequently associated with or simply referred to as “special interests.” By that term alone, there’s an obvious risk. Policies should be set to achieve the desired behavior of the organization, not the desired behavior of any special interest.

This is actually a frequent problem in the typical corporate enterprise. The first potential scenario is when the desired behavior of the enterprise isn’t well defined. Therefore, the policy makers won’t base their policies on enterprise behavior, but rather on the desired behavior of the people in the organization who have their ear (the lobbyists). This can go down a really bad path, because it’s likely to lead to infighting within the governance structure, and most likely ineffective governance.

The second scenario is when the desired behavior of the organization is well known to the policy makers, but not to the rest of the organization. Once again, the rest of the organization will operate like a bunch of lobbyists, trying to sway policy in their direction so they can do what they think is best. The governance team will likely be perceived as being in an ivory tower and out of touch. The real problem in this scenarion is that the constituents in the enterprise don’t know what the desired behavior is, and as a result, they’re guessing. Some will be right, many will be wrong, and all will be unhappy.

A third scenario, which can’t be forgotten is the role of vendors and other third parties. Once again, their vested interest is not in your desired behavior, but theirs. Buy our products, buy our services. You need to be in control of the desired behavior and choose vendors and services that are in alignment, rather than letting them try to change your policies to something more amenable to them.

The whole point of this is that the presence of lobbyists in the entity being governed has the potential for problems. If you see a lot of lobbying in your organization, the first place to go back to is your desired behavior. If that behavior is well understood by the organization, your need for active enforcement should be far less because people understand and want to do the right thing. If the desired behavior isn’t known by the governors or the constituents, you’ve open the doors to outside influence and controversy. This doesn’t imply that a governor shouldn’t have advisors, but the first question that should always be asked is, “Is this action consistent with the desired behavior we want?”

Some recent podcasts

Some recent podcasts

I wanted to call attention to four good podcasts that I listened to recently. The first is from IT Conversations and the Interviews with Innovators series hosted by Jon Udell. In this one, he speaks with Raymond Yee of UC Berkeley, discussing mashups. I especially liked to discussion about public events, and getting feeds from the local YMCA. I always wind up putting in all my kids games into iCal from their various sports teams, it would be great if I could simply subscribe from somewhere on the internet. Jon himself called out the emphasis on this in the podcast in his own blog.

The next two are both from Dana Gardner’s Briefings Direct series. The first was a panel discussion from his aptly-renamed Analyst’s Insight series (it used to be SOA Insights when I was able to participate, but even then, the topics were starting to go beyond SOA), that discussed the recent posts regarding SOA and WOA. It was an interesting listen, but I have to admit, for the first half of the conversation, I was reminded of my last post. Throughout the discussion, they kept implying that SOA was equivalent to adopting SOAP and WS-*, and then using that angle to compare it to “WOA” which they implied was the least common denominator of HTTP, along with either POX or REST. Many people have picked up on one comment which I believe was from Phil Wainewright, who said, “WOA is SOA that works.” Once again, I don’t think this was a fair characterization. First off, if we look at a company that is leveraging a SaaS provider like Salesforce.com, Salesforce.com is, at best, a service provider within their SOA. If the company is simply using the web-based front end, then Salesforce.com isn’t even a service provider in their SOA, it’s an application provider. Now, you can certainly argue that services from Amazon and Google are service providers, and that there’s some decent examples of small companies successfully leveraging these services, we’re still a far cry away from having an enterprise SOA that works, whichever technology you look at. So, I was a bit disappointed in this part of the discussion. The second half of the discussion got into the whole Microhoo arena, which wound up being much more interesting, in my opinion.

The second one from Dana was a sponsored podcast from HP, with Dana discussing their ISSM (Information Security Service Management) approach with Tari Schreider. The really interesting thing in this one was to hear about his concept of the 5 P’s, which was very familiar to me, because the first three were People, Policies, and Process (read this and this). The remaining two P’s were Products and Proof. I’ve stated that products are used to support the process, if needed, typically making it more efficient. Proof was a good addition, which is basically saying that you need a feedback loop to make sure everything is doing what you intended it to. I’ll have to keep this in mind in my future discussions.

The last one is again from IT Conversations, this time from the O’Reilly Open Source Conference Series. It is a “conversation” between Eben Moglen and Tim O’Reilly. If nothing else, it was entertaining, but I have to admit, I was left thinking, “What a jerk.” Now clearly, Eben isn’t a very smart individual, but just as he said that Richard Stallman would have come across as to ideological, he did the exact same thing. When asked to give specific recommendations on what to do, Eben didn’t provide any decent answer, instead he said, “Here’s your answer: you’ve got another 10 years to figure it out.”

Piloting within IT

Piloting within IT

Something I’ve seen at multiple organizations is problems with the initial implementation of new technology. In the perfect world, every new technology would be implemented using a carefully controlled pilot that exercised the technology appropriately, allowed repeatable processes to be identified and implemented, and added business value. Unfortunately, it’s that list item that always seems to do us in. Any project that has business value tends to operate under the same approach that any project for the business does, which usually means schedule first, everything else second. As a result, sacrifices are made, and the project doesn’t have the appropriate buffers to account for the lack of experience the organization has. Even if professional services are leveraged, there’s still a knowledge gap that relates the product capabilities to the business need.

One suggestion I’ve made is to look inside of IT for potential pilots. This can be a chicken versus the egg situation, because sometimes funding can not be obtained unless the purchase is tied to a business initiative. IT is part of the business, however, and some funding should be reserved for operating efficiency improvements within IT, just as the same should be done for other non-revenue producing areas, such as HR.

BPM technology is probably the best example to discuss this. In order to fully leverage BPM technology, you have to have a deep understanding of the business process. If you don’t understand the processes, there’s no tool that you can buy that will give you that knowledge. There are packaged and SaaS solutions available that will give you their process, but odds are that your own processes are different. Who is the keeper of knowledge about business processes? While IT may have some knowledge, odds are this knowledge resides within the business itself, creating the challenge of working across departments when trying to apply the new technology. These communication gaps can pose large risks to a BPM adoption effort.

Wouldn’t it make more sense to apply BPM technology to processes that IT is familiar with? I’m sure nearly every large organization purchases servers and installs them in its data center. I’m also quite positive that many organizations complain about how long this process takes. Why not do some process modeling, orchestration, and execution using BPM technologies in our own backyard? The communication barriers are far less, the risk is less, and value can still be demonstrated through the improved operational efficiencies.

My advice if you are piloting new technology? Look for an opportunity within IT first, if at all possible. Make your mistakes on that effort, fine tune your processes, and then take it to the business with confidence that the effort will go smoothly.

Dealing with committees

Dealing with committees

If you work in a typical large IT enterprise, it’s very likely that there are one or more committees that frequently receive presentations from various people in the organizations. These can be some of the most painful meetings for an organization, or they can be some of the most productive. Here are some of my thoughts on how to keep them productive.

First, if you are a member of one of these committees, you need to understand your purpose. If you are part of the approval pipeline, then it should be clear. Your job is to approve or deny, period. If you can’t make that decision, then your job is tell the presenter what information they need to come back with so you can either approve or deny. Unfortunately, many committee members often forget this as they get caught up in the power that they wield. Rather than focusing on their job, they instead focus on pointing out all the things that the presenter did or did not do, regardless of whether those things have any impact on the decision.

Second, the committee should make things as clear as possible for the incoming presenters. I’ve had to endure my fair share of architecture and design reviews where my guidance was, “You need to have an architecture/design review.” At that point, the presenter is left playing a guessing game on what needs to be presented, and it’s likely to be wrong. Nobody likes being stuck in a meetings all day long, so give the people the information they need to ensure that your time in that weekly approval meeting is well spent.

From the perspective of the presenter, you need to know what you want from the committee, even if it should be obvious. As I’ve stated before, the committee members may have easily lost sight of what their job is, so as the presenter, it’s your job to remind them. Tell them up front that you’re looking for approval, looking for resources, looking for whatever. Then, at the end of your presentation, explicitly ask them for it. Make sure you leave enough time to do so. It’s your job to watch the clock and make sure you are able to get your question answered, even if it means cutting off questions. Obviously, you should recognize that there is some debate, and ask appropriately. In the typical approval scenario, you should walk out of the meeting with one of three possibilities:

- You receive approval and can proceed (make sure you have all the information you need to take the next step, such as the names of people that will be involved)

- You are denied.

- The decision is deferred. In this scenario, you must walk out of the meeting knowing exactly what information you need to bring back to the committee to get a decision at your next appearance. Otherwise, you’re at the risk of creating an endless circle of meeting appearances with no progress.

I hope you find these tidbits useful. They may seem obvious, but personally, I find them useful to revisit when I’m in either situation (reviewer or presenter).

Service Lifecycle Management

Service Lifecycle Management

I attended a presentation from Raul Camacho from the SOA Solutions group of Microsoft Consulting Services yesterday. The talk provided some good details on some of the technical challenges associated with lifecycle events associated with services, such as the inevitable changes to the interface, adding new consumers, etc., but I actually thought it was pretty weak on the topic. I thought for sure that I had a blog post on the subject, but I was surprised to find that I didn’t. Some time ago (January of 2007, to be specific) I indicated that I would have a dedicated post, but the only thing I found was my post on product centered development versus project-centered development. While that post has many of the same elements, I thought I’d put together a more focused post.

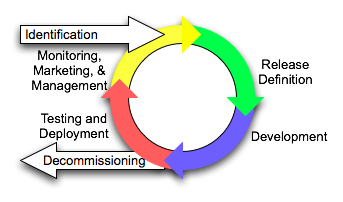

The first point, and a very important point, is that the service lifecycle is not a project lifecycle or just the SDLC for a service. It is a continuous process that begins when a service is identified and ends when the last version of a service is decommissioned from production. In between, you’ll have many SDLC efforts associated with each version of the service. I presented this in my previous post like this.

Raul’s talk gave a very similar view, again presenting the lifecycle as a circle with an on-ramp of service identification. The activities he mentioned in his talk were:

- Service Identification

- Service Development

- Service Provisioning

- Service Consumption

- Service Management

There’s many similarities between these two. We both had the same on-ramp. I broke out the SDLC into a bit more detail with steps of release definition, development, testing, and deployment, while Raul bundled these into two steps of development and provisioning. We both had management steps, although mine labeled it as the triple-M of monitoring, marketing, and management. The one key difference was Raul’s inclusion of a step of service consumption. I didn’t include this in my lifecycle, since, in my opinion, service consumption is associated with the lifecycle of the consumer, not with the lifecycle of the service. That being said, service consumption can not be ignored, and is often the event that will trigger a new release of the service, so I can understand why one may want to include it in the picture.

The thing that Raul did not mention in his talk, which I feel is an important aspect, is the role of the Service Manager. We don’t need Service Lifecycle Management if there isn’t a Service Manager to manage it. Things fall apart when there isn’t clear lines of responsibility for all things about a service. For example, a frequent occurrence is where the first version of a service is built by a team that is responsible for both the initial consumer and the service. If service ownership and management falls to this team, it is at best a secondary interest, because their real focus was on the delivery of the service consumer, not of the service itself. A second approach that many organizations may take is to create a centralized service team. While this ensures that the ownership question will get resolved, it has scalability problems because centralized teams are usually created based upon technical expertise. Service ownership and management has more to do with the business capability being provided by the service than it does with the underlying technologies used to implement it. So, once again, there are risks that a centralized group will lack the business domain knowledge required for effective management. My recommendation is to align service management along functional domains. A service manager will likely manage multiple services, simply because there will be services that change frequently and some that change infrequently, so having one manager per service won’t make for an even distribution of work. The one thing to avoid, however, is to have the same person managing both the service and the consumer of a service. Even where there is very high confidence that a service will only have one consumer for the foreseeable future, I think it’s preferable to set the standard of separation of consumer from provider.

So, beyond managing the releases of each service, what does a service manager do? Well, again, it comes back to the three M’s: Monitor, Market, and Manage. Monitoring is about keeping an eye on how the service is behaving and being used by consumers. It has to be a daily practice, not one that only happens when a red light goes off indicating a problem. Marketing is about seeking out new consumers of the service. Whether it’s for internal or external use, marketing is a critical factor in achieving reuse of the service. If no one knows about it, it’s unlikely to be reused. Finally, the manage component is all about the consumer-provider relationship. It will involve bringing on new consumers found through marketing and communication which may result in a new version, provisioning of additional capacity, or minimally the configuration of the infrastructure to enforce the policies of the service contract. It will also involve discussions with existing consumers based on the trends observed through the monitoring activity. What’s bad is when there is no management. I always like to ask people to think about using an external service provider. Even in cases where the service is a commodity with very predictable performance, such as getting electricity into your house, there still needs to be some communication to keep the relationship healthy.

I hope this gives you an idea of my take on service lifecycle management. If there’s more that you’d like to hear, don’t hesitate to leave me a comment or send me an email.