Archive for the ‘Governance’ Category

Horizontal and Vertical Thinking

Horizontal and Vertical Thinking

I’ve been meaning to post on this subject for some time, so it’s good that I got to the airport a little earlier than normal today. There’s nothing like blogging at 5:30 in the morning.

As I mentioned in my last entry, I just finished listening to a podcast from IT Conversations on the drive to the airport which was a discussion on user experience with Irene Au, Director of User Experience for Google. One of the questions she took from the audience dealt with the notion of having a centralized group for User Experience, or whether it should be a decentralized activity. This question is one that frequently comes up in SOA discussions, as well. Should you have a centralized service development, or should your efforts be decentralized? There’s no right or wrong answer to this question, however, it’s certainly true that your choices can impact your success. In the podcast, Irene discussed the matrixed approach at Yahoo, and how everything would up being funded by business units. This made it difficult to do activities for the greater good, such as style guides, etc. The business unit wanted to maximize their investment and have those resources focused on their activities, not someone else’s. Putting this same topic in the context of SOA, this would be the same as having user-facing application teams developing services. The challenge is that the business unit wants that user-facing application, and they want it yesterday. How do we create services that aren’t solely of value to just that application. At the opposite extreme, things can be centralized. Irene discussed the culture of open office hours at Google, and how she’ll have a line of people outside her office with their user experience questions in hand. While this may allow her to maintain a greater level of consistency, resource management can be a big challenge, as you are being pulled in multiple directions. Again, putting this in the SOA context, the risk is that in the quest for the perfect enterprise service, you can put individual project schedules at risk as they wait for the service they are dependent on. These are both extreme positions, and seldom is an organization at one extreme or the other, but usually somewhere in the middle.

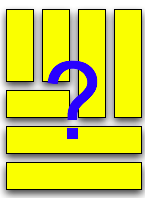

In trying to tackle this problem, it’s useful to think of things as either horizontal or vertical. Horizontal concepts are ones where breadth is the more important concern. For example, infrastructure is most frequently presented as a horizontal concern. I can take a four CPU server and run just about anything I’d like on it these days, meaning it provides broad coverage across a variety of functional domains. A term frequently used these days is commodity hardware, and the notion of commoditization is a characteristic of horizontal domains. When breadth becomes more important that depth, there’s usually not many opportunities for innovation. Largely, activities become more focused on reducing the cost by leveraging economies of scale. Commoditization and standardization go hand in hand, as it’s difficult to classify something as a commodity that doesn’t meet some standard criteria. In the business world, these horizontal areas are also ones that are frequently outsourced, as all companies typically do it the same way meaning there is little room for competitive differentiation.

In trying to tackle this problem, it’s useful to think of things as either horizontal or vertical. Horizontal concepts are ones where breadth is the more important concern. For example, infrastructure is most frequently presented as a horizontal concern. I can take a four CPU server and run just about anything I’d like on it these days, meaning it provides broad coverage across a variety of functional domains. A term frequently used these days is commodity hardware, and the notion of commoditization is a characteristic of horizontal domains. When breadth becomes more important that depth, there’s usually not many opportunities for innovation. Largely, activities become more focused on reducing the cost by leveraging economies of scale. Commoditization and standardization go hand in hand, as it’s difficult to classify something as a commodity that doesn’t meet some standard criteria. In the business world, these horizontal areas are also ones that are frequently outsourced, as all companies typically do it the same way meaning there is little room for competitive differentiation.

Vertical concepts are ones where depth is the more important concern. In contrast to the commoditization associated with horizontal concerns, vertical items are ones where innovation can occur and where companies can set themselves apart from their competitors. User experience is still an area where significant differentiation can occur, so most user-facing applications fall into this category. Business knowledge, customer experience (preferably at a partnership level to have them involved in the process), are keys at this level.

By nature, vertical and horizontal concerns are orthogonal and can create tension. I have a friend who works as a user experience consultant and he once asked me about how to balance the concerns that may come from a user experience focus, clearly rooted in the vertical domains with the concerns of SOA, which are arguably focused on more horizontal concerns. There’s no easy answer to this. Just as the business must make decisions over time on where to commoditize and where to specialize, the same holds true for IT. Apple is a great example to look at, as their decision to not commoditize in their early days clearly resulted in them being relegated to niche (but still relevant) status in computer sales. Those same principles, however, to remain more vertically-focused with tight top-to-bottom controls have now resulted in their successes with their computers, iTunes, Apple TV, the iPod, and the forthcoming iPhone. There are a number of ways to be successful, although far fewer ways than there are to be unsuccessful.

When trying to slice up your functional domains into domains of services, you must certainly align it with the business goals. If there is an area of the business where they are trying to create competitive differentiation, this is probably not the best area to look for services that will have broad enterprise reuse, although it is very dependent on whether technology plays a direct role in that differentiation or an indirect role, such as whether the business to consumer interaction is solely through a website, or if it is through a company representative (e.g. a financial advisor). These areas that are closest to the end user are likely to require some degree of verticality to allow for tighter controls and differentiation. That’s not to say they own the entire solution, top to bottom, however, which would be a monolith.

As we go deeper into the stack, you should look for areas where commoditization and standardization outweighs the benefits of customization. It may begin at a domain level, such as integration across a suite of applications for a single business unit, with each successive level increasing the breadth of coverage. There is no point where the vertical solutions stop, and everything beneath it has enterprise breadth. Rather, it is a continuum of decreasing emphasis on depth and increasing emphasis on breadth. A Internet company may try to differentiate themselves in their end-user facing systems that the users interact with, allowing a large degree of autonomy for each product line. The supporting services behind those user interfaces will increase in the breadth of their scope, but still may require some degree of specialization, such as having to deal with a particular region of a country or even the world for global organizations. We then bleed into the area of “applistructure” and solutions that fall more into the support arena. A CRM system will have close ties to the end-user facing sales portal. The breadth of CRM coverage may need to span multiple lines of business, unlike the sales portal, where specialization could occur. Going deeper, we have applications that may have no ties to the end-user facing systems, but are still necessary to run a business, such as Human Resources. Interestingly, if you’re following my logic you may be thinking that these things should all be outsourced, but the truth is that many of these areas are still far from being commoditized. That’s because they involve user facing components, which brings us back to those vertical domains where customization can add value. An organization that outsources the technology side of HR, but doesn’t have an associated reduction in HR staff may have a potential conflict when they want to have specialized HR processes, but are dealing with commodity interfaces and systems. Put simply, you can’t have it both ways.

The trend continues on down the stack to the infrastructure and the world of the individual developer. If you’re truly wanting to adopt SOA from top to bottom, there should be a high degree of commoditization and standardization at this level. Organizations where solutions are still built top-to-bottom, with customized hardware platforms, source code management, programming languages, etc. are going to struggle with SOA, because their culture is vertically-oriented to an extreme.

While the speed of change, business decisions on what things are core competencies and what things are not do not change overnight. Taking an organization where each product group had its own staff (vertically-oriented) and switching it to a centralized sales organization (horizontally-oriented) is a significant cultural change, and often doesn’t go smoothly. You only need to look at the number of mergers and acquisitions that have been deemed successful (less than 50%) to understand the difficulty. Switching from vertically-focused IT solutions to horizontally-focused IT solutions is just as difficult, if not more difficult. Humans are significantly more adaptable than technology solutions, which at the core, are binary, yes/no environments. The important thing is to recognize when misalignment is occurring and take action to fix it. That’s all about governance. If users are trying to apply significant customization to a technology area that has been deemed as a commodity by the business, push back needs to occur to emphasis that the greater good takes precedence over individual needs. If IT is taking far too long to deliver a solution in an area where time to market and competitive differentiation is critical, remove the barriers and give that group more control over the solution, at the expense of broader applicability. If you don’t know what your priorities and principles are, however, for each domain, you’ll end up in and endless sequence of meetings that are rooted in opinions, rather than documented principles and behaviors desired by the organization.

Challenge of Centralized Service Teams

Challenge of Centralized Service Teams

Vilas recently posted on centralized service delivery teams (SDTs), and invited others to share their experiences. I haven’t posted on this subject in some time, and as I thought about it, I realized that my opinions have evolved.

Why would an organization consider a centralized team? Frankly, I think it comes down to governance. It’s far easier to achieve consistency within a single group than it is across many groups. But what are the downsides to this approach? Probably the biggest one, and the one of most concern when adopting SOA, is the that of business domain knowledge. While it’s probably relatively easy to pick and choose from among the development staff to get a set of people with broad technical knowledge, it will probably be more difficult to find people with broad business knowledge. What will be more important to your SOA efforts? Technical consistency (i.e. all services are Web Services) or business consistency? The answer will probably vary based on whether you see SOA as primarily as a technology integration solution, or if you see SOA as a business agility solution.

Personally, if I had to pick one over the other, I’m more interested in the business agility aspects. I hope I don’t have to pick one, however, because the technology integration aspects are not something to be dismissed. What does that mean? It means I’d take the key experts on the business domain for a service rather than having the technical experts. Given this preference, it certainly means that a staff augmentation model for service development may make the most sense, rather than an outsourcing model. In this approach, we’d have a pool of technical experts that would be allocated to service development efforts, with the work managed by those projects, rather than by a manager of the pool. In this way, service ownership can be established from the beginning, and that owner will be the person who understands the business domain and the needs of the consumers of that domain. This is very important, as we need to shift the organization from being concerned about development lifecycles (end with the project) to service lifecycles (end when service is decommissioned). An outsourcing model of development is focused on the development lifecycle only, and can easily result in services going into limbo after the initial development effort.

Now there is one caveat to this. Organizations that may be considering a centralized service development team may not have any understanding at all of service ownership. It’s likely that the project that needs the service is building the service consumer. Assigning a resource to this project to perform service development does not do what I’ve described, because the project is not the service owner. The project manager is not the service manager. Who is it, then? Well, that has to be determined, and if the organization doesn’t have any teams that are prepared to act in this capacity, they’ll need to create one. It’s important to define this team according to business domains not technology domains, presuming we’re talking about business services and not technology services (like Security Services). This is where the needs may challenge the current organizational structure. New teams will likely need to be formed. Are these “centralized” teams? These teams may wind up living in the organization for a long time, unlike a Competency Center or Center of Excellence type approach. If anything, the Center of Excellence may be the group that is the one making these organizational and ownership decisions and thus beginning the organizational transformation that will occur. The COE may not be the group building the services, but they may be the group with the appropriate breadth of experience and knowledge to make the necessary decisions to set the organization in the right direction for the future. If there are five teams that all conceivably be the service owner, which one do you pick?

As these decisions are made, the center of excellence will disappear, but the new service development organization will now be in place, where there are groups dedicated to service creation as well as groups dedicated to the creation of user-facing systems. How many organizations that have “adopted SOA” have reached this point of maturity in their efforts?

Automate what part of SOA Governance

Automate what part of SOA Governance

I must be in a bad mood this week (which is unusual for me, I consider myself an optimist), as this will be my second post that could be considered a rant. I just read David Kelly’s article on eBizQ, “Improving Processes With Automated SOA Governance.” For whatever reason, that title alone struck a nerve. Clearly, the whole topic of automation points to tooling, which points to vendors. Once again, this is putting the cart before the horse.

The primary area for automation in SOA Governance is policy enforcement. Governance isn’t just about enforcement, however. Governance is about people, policies, and process. People (the legislators) set policy. Policy is enforced through process. That may be a gross oversimplification, but it’s the way it needs to work. Often times, enforcement fails because the people, the policies, or both are not recognized. Even if you automate enforcement, if the policies and the authority of the people setting the policies aren’t recognized, your governance efforts are less likely to be successful.

Let’s use the common metaphor of city government. Suppose the new mayor and the city council want to cut down on the number of serious accidents involving cars and pedestrians that have been occurring. Clearly, we have people involved: the mayor and the city council. The city council proposes a new policy, and in this case, it’s lowering the speed limit on side streets from 30 mph to 20 mph. The mayor approves it and it becomes law. If the town has a problem with speeders, and they don’t recognize the authority of the city council to establish speed limits, they’re probably not going to slow down. Enforcement takes three forms: posted signs, police patrols, and a few of those “How fast you’re going” machines that have an embedded radar gun and a camera. Posted signs are the most passive form of enforcement, and the least likely to make a difference. Police patrols are the most active form of enforcement, but come at a significant cost. The radar gun/camera system may be less costly, but is not as active of an enforcement mechanism. A ticket may show in the mail. Clearly, the best way to do enforce it would be to wire in some radio transmitter into the street signs that communicates with cars and doesn’t allow them to go over the speed limit in the first place, but that’s a bit too intrusive and could have complications. Of the three enforcement solutions, none of them address the problem of someone who does not recognize the authority of the people and the validity of the policies. This is a cultural issue. Tools can make non-compliance more painful, but they can’t change the culture. If you’re having problems with your governance, I’d look at your people and policies first. If you’ve got recognition on the authorities and the policies, now you can look into minimizing the cost of your enforcement through tooling. It is certainly likely that automated tools will be less costly in the long run than having to schedule two hour meetings with your key legislators for every service review.

SOA and GCM (Governance and Compliance)

SOA and GCM (Governance and Compliance)

I just listened to the latest Briefings Direct: SOA Insights podcast from Dana Gardner and friends. In this edition, the bulk of the time was spent discussing the relationship between SOA Governance and tools in the Governance and Compliance market (GCM).

I found this discussion very interesting, even if they didn’t make too many connections to the products classifying themselves as “SOA Governance” solutions. That’s not surprising though, because there’s no doubt that the marketers jumped all over the term governance in an effort to increase sales. Truth be told, there is a long, long way to go in connecting the two sets of technologies.

I’m not all that familiar with the GCM space, but the discussion did help to educate me. The GCM space is focused on corporate governance, clearly targeting the Sarbanes-Oxley space. There is no doubt that many, many dollars are spent within organizations in staying compliant with local, state, and federal (or your area’s equivalent) regulations. Executives are required to sign off that appropriate controls are in place. I’ve had experience in the financial services industry, and there’s no shortage of regulations that deal with handling investor’s assets, and no shortage of lawsuits when someone feels that their investment intent has not been followed. Corporate governance doesn’t end there, however. In addition to the external regulations, there are also the internal principles of the organization that govern how the company utilizes its resources. Controls must be put in place to provide documented assurances that resources are being used in the way they were intended. This frequently takes the form of someone reviewing some report or request for approval and signing their name on the dotted line. For these scenarios, there’s a natural relationship between analytics, business intelligence, and data warehouse products, and the GCM space appears to have ties to this area.

So where does SOA governance fit into this space? Clearly, the tools that are claiming to be players in the governance space don’t have strong ties to corporate governance. While automated checking of a WSDL file for WS-I adherence is a good thing, I don’t think it’s something that will need to show up in a SOX report anytime soon. Don’t get me wrong, I’m a fan of what these tools can offer but be cautious in thinking that the governance they claim has strong ties to your corporate governance. Even if we look at the financial aspect of projects, the tools still have a long way to go. Where do most organizations get the financial information? Probably from their project management and time accounting system. Is there integration between these tools, your source code management system, and your registry/repository? I know that BEA AquaLogic Enterprise Repository (Flashline) had the ability to track asset development costs and asset integration costs to provide an ROI for individual assets, but where do these cost numbers come from? Are they manually entered, or are they pulled directly from the systems of record?

Ultimately, the relationship between SOA Governance and Corporate Governance will come down to data. In a couple recent posts, I discussed the challenges that organizations may face with the metadata associated with SOA, as well as the management continuum. This is where these two worlds come together. I mentioned earlier that a lot of corporate governance is associated with the right people reviewing and signing off on reports. A challenge with systems of the past is their monolithic nature. Are we able to collect the right data from these systems to properly maintain appropriate controls? Clearly, SOA should break down these monoliths and increase the visibility into the technology component of the business processes. The management architecture must allow metrics and other metadata to be collected, analyzed, and reported to allow the controllers to make better decisions.

One final comment that I didn’t want to get lost. Neil Macehiter brought up Identity Management a couple times in the discussion, and I want to do my part to ensure it isn’t forgotten. I’ve mentioned “signoff” a couple times in this entry. Obviously, signoff requires identity. Where compliance checks are supported by a service-enabled GCM product, having identity on those service calls is critical. One of the things the controller needs to see is who did what. If I’m relying on metadata from my IT infrastructure to provide this information, I need to ensure that the appropriate identity stays with those activities. While there’s no shortage of rants against WS-*, we clearly will need a transport-independent way of sharing identity as it flows through the various technology components of tomorrow’s solutions.

The vendor carousel continues to spin…

The vendor carousel continues to spin…

It’s not an acquisition this time, but a rebranding/reselling agreement between BEA and AmberPoint. I was head down in my work and hadn’t seen this announcement until Google Alerts kindly informed me of a new link from James Urquhart, a blogger on Service Level Automation whose writings I follow. He asked what I think of this announcement, so I thought I’d oblige.

I’ve never been an industry analyst in the formal sense, so I don’t get invited to briefings, receive press releases, or whatever the other normal mechanisms (if there are any) that analysts use. I am best thought of as an industry observer, offering my own opinions based on my experience. I have some experience with both BEA and AmberPoint, so I do have some opinions on this. 🙂

Clearly, BEA must have customers asking about SOA management solutions. BEA doesn’t have an enterprise management solution like HP or IBM. Even if we just consider BEA products themselves, I don’t know whether they have a unified management solution across all of their products. So, there’s definitely the potential for AmberPoint technology to provide benefits to the BEA platform and customers must be asking about it. If this is the case, you may be wondering why didn’t BEA just acquire AmberPoint? First, AmberPoint has always had a strong relationship with Microsoft. I have no idea how much this results in sales for them, but clearly an outright acquisition by BEA could jeopardize that channel. Second, as I mentioned, BEA doesn’t have an enterprise management offering of which I’m aware. AmberPoint can be considered a niche management solution. It provides excellent service/SOA management, but it’s not going to allow you to also manage you physical servers and network infrastructure. So, this doesn’t wouldn’t sense on either side. BEA wouldn’t gain entry into that market, and AmberPoint would be at risk of losing customers as their message could get diluted by the rest of the BEA offerings.

As a case in point, you don’t see a lot of press about Oracle Web Services Manager these days. Oracle acquired this technology when they acquired Oblix (who acquired it when they acquired Confluent). I don’t consider Oracle a player in enterprise systems management, and as a result, I don’t think people think of Oracle when they’re thinking about Web Services Management. They’re probably more likely to think of the big boys (HP, IBM, CA) and the specialty players (AmberPoint, Progress Actional).

So, who’s getting the best out of this deal? Personally, I think this is great win for AmberPoint. It extends a sales channel for them, and is consistent with the approach they’ve taken in the past. Reselling agreements can provide strength to these smaller companies, as it builds on a perception of the smaller company as either being a market leader, having great technology, or both. On the BEA side, it does allow them to offer one-stop solutions directly in response to SOA-related RFPs, and I presume that BEA hopes it will result in more services work. BEA’s governance solution is certainly not going to work out of the box since it consists of two rebranded products (AmberPoint and HP/Mercury/Systinet) and one recently acquired product (Flashline). All of that would need to be integrated with their core execution platform. It will help BEA with existing customers who don’t want to deal with another vendor but desire an SOA management solution, but BEA has to ensure that there are integration benefits rather than just having the BEA brand.

New Greg the Architect

New Greg the Architect

Boy, YouTube’s blog posting feature takes a long time to show up. I tried it for the first time to create blog entries with embedded videos, but it still hasn’t shown up. Given that the majority of my readers have probably already seen it courtesy of links on ZDNet and InfoWorld, I’m caving and just posting direct links to YouTube.

The first video, released some time ago, can be viewed here. Watch this one first, if you’ve never seen it before.

The second video, just recently released and dealing with Greg’s ROI experience, can be found here.

Enjoy.

The Reuse Marketplace

The Reuse Marketplace

Marcia Kaufman, a partner with Hurwitz & Associates, posted an article on IT-Director.com entitled “The Risks and Rewards of Reuse.” It’s a good article, and the three recommendations can really be summed up in one word: governance. While governance is certainly important, the article misses out on another important, perhaps more important, factor: marketing.

When discussing reuse, I always refer back to a presentation I heard at SD East way back in 1998. Unfortunately, I don’t recall the speaker, but he had established reuse programs at a variety of enterprises, some successful and some not successful. He indicated that the factor that influenced success the most was marketing. If the groups that had reusable components/services/whatever were able to do an effective job in marketing their goods and getting the word out, the reuse program as a whole would be more successful.

Focusing in on governance alone still means those service owners are sitting back and waiting for customers to show up. While the architectural governance committee will hopefully catch a good number of potential customers and send them in the direction of the service owner, that committee should be striving to reach “rubber stamp” status, meaning the project teams should have already sought out potential services for reuse. This means that the service owners need to be marketing their services effectively so that they get found in the first place. I imagine the potential customer using Google searches on the service catalog, but then within the service catalog, you’d have a very Amazon-like feel that may say things like “30% of other customers found this service interesting…” Service owners would be monitoring this data to understand why consumers are or are not using their services. They’d be able to see why particular searches matched, what information the customer looked at, and know whether the customer eventually decided to use the service/resource or not. Interestingly, this is exactly what companies like Flashline and ComponentSource were trying to do back in the 2000 timeframe, with Flashline having a product to establish your own internal “marketplace” while ComponentSource was much more of a hosted solution intended at a community broader than the enterprise. With the potential to utilize hosted services always on the rise, this makes it even more interesting, because you may want your service catalog to show you both internally created solutions, as well as potential hosted solutions. Think of it as amazon.com on the inside + with amazon partner content integrated from the outside. I don’t know how easily one could go about doing this, however. While there are vendors looking at UDDI federation, what I’ve seen has been focused on internal federation within an enterprise. Have any of these vendors worked with say, StrikeIron, so that hosted services show up in their repository (if the customer has configured it to allow them)? Again, it would be very similar to amazon.com. When you search for something on Amazon, you get some items that come from amazon’s inventory. You also get links to Amazon partners that have the same products, or even products that are only available from partners.

This is a great conceptual model, however, I do need to be a realist regarding the potential of such a robust tool today. How many enterprises have a service library large enough to warrant establishing this rich of a marketplace-like infrastructure? Fortunately, I do think this can work. Reuse is about much more than services. If all of your reuse is targeted at services, you’re taking a big risk with your overall performance. A reuse program should address not only service reuse, but also reuse of component libraries, whether internal corporate libraries or third-party libraries, and even shared infrastructure. If your program addresses all IT resources that have the potential for reuse, now the inventory may be large enough to warrant an investment in such a marketplace. Just make sure that it’s more than just a big catalog. It should provide benefit not only for the consumer, but for the provider as well.

Master Metadata/Policy Management

Master Metadata/Policy Management

Courtesy of Dana Gardner’s blog, I found out that IONA has announced a registry/repository product, Artix Registry/Repository.

I’m curious if this is indicative of a broader trend. First, you had acquisitions of the two most prominent players in the registry/repository space: Systinet by Mercury who was then acquired by HP, and Infravio by webMethods. For the record, Flashline was also acquired by BEA. You’ve had announcements of registry/repository solutions as part of a broader suite by IBM (WebSphere Registry/Repository), SOA Software (Registry), and now IONA. There’s also Fujitsu’s/Software AG CentraSite and LogicLibrary Logidex that are still primarily independent players. What I’m wondering is whether or not the registry/repository marketplace simply can’t make it as an independent purchase, but will always be a mandatory add-on to any SOA infrastructure stack.

All SOA infrastructure products have some form of internal repository. Whether we’re talking about a WSM system, an XML/Web Services gateway, or an ESB, they all maintain some internal configuration that governs what they do. You could even lump application servers and BPM engines into that mix if you so desire. Given the absence of domain specific policy languages for service metadata, this isn’t surprising. So, given that every piece of infrastructure has its own internal store, how do you pick one to be the “metadata master” of your policy information? Would someone buy a standalone product solely for that purpose? Or are they going to pick a product that works with the majority of their infrastructure, and then focus on integration with the rest. For the smaller vendors, it will mean that they have to add interoperability/federation capabilities with the platform players, because that’s what customers will demand. The risk for the consumer, however, is that this won’t happen. This means that the consumer will be the one to bear the brunt of the integration costs.

I worry that the SOA policy/metadata management space will become no better than the Identity Management space. How many vendor products still maintain proprietary identity stores rather than allowing identity to be managed externally through the use of ActiveDirectory/LDAP and some Identity Management and Provisioning solution? This results in expensive synchronization and replication problems that keep the IT staff from being focused on things that make a difference to the business. Federation and interoperability across these registry/repository platforms must be more than a checkbox on someone’s marketing sheet, it must be demonstrable, supported, and demanded by customers as part of their product selection process. The last thing we need is a scenario where Master Data Management technologies are required to manage the policies and metadata of services. Let’s get it right from the beginning.

SOA Politics

SOA Politics

In the most recent Briefings Direct SOA Insights podcast (transcript here), the panel (Dana Gardner, Steve Garone, Joe McKendrick, Jim Kobielus, and special guest Miko Matsumura) discussed failures, governance, policies, and politics. There were a number of interesting tidbits in this discussion.

First, on the topic of failures, Miko Matsumura of webMethods called out that we may see some “catastrophic failures” in addition to some successes. Perhaps it’s because I don’t believe that there should be a distinct SOA project or program, but I don’t see this happening. I’m not suggesting that SOA efforts can’t failure, rather, if it fails, things will still be business as usual. If anything, that’s what the problem is. Unless you’ve already embraced SOA and don’t realize it, SOA adoption should be challenging the status quo. While I don’t have formal analysis to back it up, I believe that most IT organizations still primarily have application development efforts. This implies the creation of top-to-bottom vertical solutions whose primary concern is not playing well in the broader ecosystem, but rather, getting solutions to their stakeholders. That balance needs to shift to where the needs of the stakeholder of the application are equal to the needs of the enterprise. The pendulum needs to stay in the middle, balancing short term gains and long term benefits. Shifting completely to the long term view of services probably won’t be successful, either. I suppose that it is entirely possible that organizations may throw a lot of money at an SOA effort, treating it as a project, which is prone to spectacular failure. The problem began at the beginning however, when it was made a project to begin with. A project will not create the culture change needed throughout the organization. The effort must influence all projects to be successful in the long term. The biggest risk in this type of an approach, however, is that IT spends a whole bunch of money changing the way they work but they still wind up with the same systems they have today. They may not be seeing reuse of services or improved productivity. My suspicion is that the problem here lies with the IT-business relationship. IT can only go so far on its own. If the business is still defining projects based upon silo-based application thinking, you’re not going to leverage SOA to its fullest potential.

Steve Garone pointed out that two potential causes of these failures are “a difficulty in nailing down requirements for an application, the other is corporate backing for that particular effort going on.” When I apply this to SOA, it’s certainly true that corporate backing can be a problem, since that points the IT centric view I just mentioned. On the requirements side, this is equally important. The requirements are the business strategy. These are what will guide you to the important areas for service creation. If your company is growing through mergers and acquisitions, what services are critical to maximize the benefits associated with that activity? Clearly, the more quickly and cost effectively you can integrate the core business functionality of the two organizations, the more benefit you’ll get out of the effort. If your focus is on cost reduction, are their redundant systems that are adding unnecessary costs to the bottom line? Put services in place to allow the

migration to a single source for that functionality.

The later half of the podcast talked about politics and governments, using world governments as a metaphor for the governance mechanisms that go on within an enterprise. This is not a new discussion for me, as this was one of my early blog entries that was reasonably popular. It is important to make your SOA governance processes fit in within your corporate culture and governance processes, provided that the current governance process is effective. If the current processes are ineffective, then clearly a change is in order. If the culture is used to a more decentralized approach to governance and has been successful in meeting the company’s goals based upon that approach, then a decentralized approach may work just fine. Keep in mind, however, that the corporate governance processes may not ripple down to IT. It’s certainly possible to have decentralized corporate governance, but centralized IT governance. The key question to ask is whether the current processes are working or not. If they are, it’s simply a matter of adding the necessary policies for SOA governance into the existing approach. If the current processes are not working, it’s unlikely that adding SOA policies to the mix is going to fix anything. Only a change to the processes will fix it. Largely, this comes down to architectural governance. If you don’t have anyone with the responsibility for this, you may need to augment your governance groups to include architectural representation.

SOA Consortium

SOA Consortium

The SOA Consortium recently gave a webinar that presented their Top 5 Insights based upon a series of executive summaries they conducted. Richard Mark Soley, Executive Director of the SOA Consortium, and Brenda Michelson of Elemental Links were the presenters.

A little background. The SOA Consortium is a new SOA advocacy group. As Richard Soley put it during the webinar, they are not a standards body, however, they could be considered a source of requirements for the standards organizations. I’m certainly a big fan of SOA advocacy and sharing information, if that wasn’t already apparent. Interestingly, they are a time-boxed organization, and have set an end date of 2010. That’s a very interesting approach, especially for a group focused on advocacy. It makes sense, however, as the time box represents a commitment. 12 practitioners have publicly stated their membership, along with the four founding sponsors, and two analyst firms.

What makes this group interesting is that they are interested in promoting business-driven SOA, and dispelling the notion that SOA is just another IT thing. Richard had a great anecdote of one CIO that had just finished telling the CEO not to worry about SOA, that it was an IT thing and he would handle it, only to attend one of their executive summits and change course.

The Top 5 insights were:

- No artificial separation of SOA and BPM. The quote shown in the slides was, “SOA, BPM, Lean, Six Sigma are all basically on thing (business strategy & structure) that must work side by side.” They are right on the button on this one. The disconnect between BPM efforts and SOA efforts within organizations has always mystified me. I’ve always felt that the two go hand in hand. It makes no sense to separate them.

- Success requires business and IT collaboration. The slide deck presented a before and after view, with the after view showing a four-way, bi-directional relationship between business strategy, IT strategy, Enterprise Architecture, and Business Architecture. Two for two. Admittedly, as a big SOA (especially business-driven SOA) advocate, this is a bit of preaching to the choir, but it’s great to see a bunch of CIOs and CTOs getting together and publicly stating this for others to share.

- On the IT side, SOA must permeate the organization. They recommend the use of a Center of Excellence at startup, which over times shifts from a “doing” responsibility to a “mentoring” responsibility, eventually dissolving. Interestingly, that’s exactly what the consortium is trying to do. They’re starting out with a bunch of people who have had significant success with SOA, who are now trying to share their lessons learned and mentor others, knowing that they’ll disband in 2010. I think Centers of Excellence can be very powerful, especially in something that requires the kind of cultural change that SOA will. Which leads to the next point.

- There are substantial operational impacts, but little industry emphasis. As we’ve heard time and time again, SOA is something you do, not something you buy. There are some great quotes in the deck. I especially liked the one that discussed the horizontal nature of SOA operations, in contrast to the vertical nature (think monolithic application) of IT operations today. The things concerning these executives are not building services, but versioning, testing, change management, etc. I’ve blogged a number of times on the importance of these factors in SOA, and it was great to hear others say the same thing.

- SOA is game changing for application providers. We’ve certainly already seen this in action with activities by SAP, Oracle, and others. What was particularly interesting in the webinar was that while everyone had their own opinion on how the game will change, all agreed that it will change. Personally, I thought these comments were very consistent with a post I made regarding outsourcing a while back. My main point was that SOA, on its own, may not increase or decrease outsourcing, but it should allow more appropriate decisions and hopefully, a higher rate of success. I think this applies to both outsourcing, as well as to the use of packaged solutions installed within the firewall.

Overall, this was a very interesting and insightful webinar. You can register and listen to a replay of it here. I look forward to more things to come from this group.

Reference Architectures and Governance

Reference Architectures and Governance

In the March 5th issue of InfoWorld, I was quoted in Phil Windley’s article, “Teaming Up For SOA.” One of the quotes he used was the following:

Biske also makes a strong argument for reference architectures as part of the review process. “Architectural reviews tie the project back to the reference architecture, but if there’s no documentation that project can be judged against, the architectural review won’t have much impact.”

My thinking on this actually came from a past colleague. We were discussing governance, and he correctly pointed out that it is unrealistic to expect benefits from a review process when the groups presented have no idea what they are being reviewed against. The policies need to be stated in advance. Imagine if your town had no speed limit signs, yet the police enforced a speed limit. What would be your chances of getting a ticket? What if your town had a building code, but the only place it existed was in the building inspector’s head. Would you expect your building to pass inspections? What would be your feelings toward the police or the inspector after you received a ticket or were told to make significant structural changes?

If you’re going to have reviews that actually represent an approval to go forward, you need to have documented policies. At the architectural level, this is typically done through the use of reference architectures. The challenge is that there is no accepted norm for what a reference architecture should contain. Rather than get into a semantic debate over the differences between a reference architecture, a reference model, a conceptual architecture, and any number of other terms that are used, I prefer to focus on what is important- giving guidance to the people that need it.

I use the term solution architect to refer to the person on a project that is responsible for the architecture of the solution. This is the primary audience for your reference material. There are two primary things to consider with this audience:

- What policies need to be enforced?

- What deliverable(s) does the solution architect need to produce?

Governance does begin with policies, and I put that one first for a reason. I worked with an organization that was using the 4+1 view model from Philippe Kruchten for modeling architecture. The problem I had was that the projects using this model were not adequately able to convey the services that would be exposed/utilized by their solution. The policy that I wanted enforced at the architecture review was that candidate services had been clearly identified, and potential owners of those services had been assigned. If the solution architecture can’t convey this, it’s a problem with the solution architecture format, not the policy. If you know your policies, you should then create your sample artifacts to ensure that those policies can be easily enforced through a review of the deliverable(s). This is where the reference material comes into play, as well. In this scenario, the purpose of the reference material is to assist the solution architect in building the required deliverable(s). Many architects may create future state diagrams that may be accurate representations, but wind up being very difficult to use in a solution architecture context. The projects are the efforts that will get you to that future state, so if the reference material doesn’t make sense to them, it’s just going to get tossed aside. This doesn’t bode well for the EA group, as it will increase the likelihood that they are seen as an ivory tower.

When creating this material, keep in mind that there are multiple views of a system, and a solution architect is concerned with all of them. I mentioned the 4+1 view model. There’s 5 views right there, and one view it doesn’t include is a management view (operational management of the solution). That’s at least 6 distinct views. If your reference material consists of one master Visio diagram, you’re probably trying to convey too much with it, and as a result, you’re not conveying anything other than complexity to the people that need it. Create as many diagrams and views as necessary to ensure compliance. Governance is not about minimizing the number of artifacts involved, it’s about achieving the desired behavior. If achieving desired behavior requires a number of diagrams and models, so be it.

Finally, architectural governance is not just about enforcing policies on projects. In Phil’s articles some of my quotes also alluded to the project initiation process. EA teams have the challenge of trying to get the architectural goals achieved, but often times without the ability to directly create and fund projects themselves. In order to achieve their goals, the current state and future state architectures must also be conveyed to the people responsible for IT Governance. This is an entirely different audience, one whom the reference architectures created for the solution architects may be far too technical in nature. While it would minimize the work of the architecture team to have one set of reference material that would work for everyone, that simply won’t be the case. Again, the reference material needs to fit the audience. Just as a good service should fit the needs of its consumers, the reference material produced by EA must do the same.

ROI and SOA

ROI and SOA

Two ZDNet analysts, Dana Gardner and Joe McKendrick, have had recent posts (I’ve linked their names to the specific posts) regarding ROI and SOA. This isn’t something I’ve blogged on in the past, so I thought I’d offer a few thoughts.

First, let’s look at the whole reason for ROI in the first place. Simply put, it’s a measurement to justify investment. Investment is typically quanitified in dollars. That’s great, now we need to associate dollars with activities. Your staff has salaries or bill rates, so this shouldn’t be difficult, either. Now is where things get complicated, however. Activities are associated with projects. SOA is not a project. An architecture is a set of constraints and principles that guide an approach to a particular problem, but it’s not the solution itself. Asking for ROI on SOA is similar to asking for ROI on Enterprise Architecture, and I haven’t seen much debate on that. That being said, many organizations still don’t have EA groups, so there are plenty of CIOs that may still question the need for it as a formal job classification. Getting back to the topic, we can and do estimate costs associated with a project. What is difficult, however, is determining the cost at a fine-grained level. Can you determine the cost of developing services in support of that project accurately? In my past experience, trying to use a single set of fine-grained activities for both project management and time accounting was very difficult. Invariably, the project staff spent time that was focused on interactions that were needed to determine what the next step was. These actions never map easily into a standard task-based project plan, and as a result, caused problems when trying to charge time. (Aside: For an understanding on this, read Keith Harrison-Broninski’s book Human Interactions or check out his eBizQ blog.) Therefore, it’s going to be very difficult to put a cost on just the services component of a project, unless it’s entire scope of the project, which typically isn’t the case.

Looking at the benefits side of the equation, it is certainly possible to quantify some expected benefits of the project, but again, only a certain level. If you’re strictly looking at IT, your only hope of coming up with ROI is to focus on cost reduction. IT is typically a cost center, with at best, an indirect impact on revenue generation. How are costs reduced? This is primarily done by reducing maintenance costs. The most common approach is through a reduction in the number of vendor products involved and/or a reduction in the number of vendors involved. More stuff from fewer vendors typically means more bundling and greater discounts. There are other options, such as using open source products with no licensing fees, or at least discounted fees. You may be asking, “What about improved productivity?” This is an indirect benefit, at best. Why? Unless there is a reduction in headcount, the cost to the organization is fixed. If a company is paying a developer $75,000 / year, that developer gets that money regardless of how many projects get done and what technologies are used. Theoretically, however, if more projects are completed within a given time, you’d expect that there is a greater potential for revenue. That revenue is not based upon whether SOA was used or not, it’s based upon the relevance of that project to business efforts.

So now we’re back to the promise of IT – Business agility. For a given project, ROI should be about measuring the overall project cost (not specific actions within it) plus any ongoing costs (maintenance) against business benefits (revenue gain) and ongoing cost reduction. So where will we get the best ROI? We’ll get the best ROI by picking projects with the best business ROI. If you choose a project that simply rebuilds an existing system using service technologies, all you’ve done is incurred cost unless those services now create the potential for new revenue sources (a business problem, not a technology problem), or cost consolidation. Cost consolidation can come from IT on its own through reduction in maintenance costs, although if you’re replacing one homegrown system with another, you only reduce costs if you reduce staff. If you get rid of redundant vendor systems, clearly there should be a reduction in maintenance fees. If you’re shooting for revenue gain, however, the burden falls not to IT, but to the business. IT can only control the IT component of the project cost and we should always be striving to reduce that through reuse and improved tooling. Ultimately, however, the return is the responsibility of the business. If the effort doesn’t produce the revenue gain due to inaccurate market analysis, poor timing, or anything else, that’s not the fault of SOA.

There are two last points I want to make, even though this entry has gone longer than I expected. First, Dana made the following statement in his post:

So in a pilot project, or for projects driven at the departmental level, SOA can and should show financial hard and soft benefits over traditional brittle approaches for applications that need integration and easy extensibility (and which don’t these days?).

I would never expect a positive ROI on a pilot project. Pilots should be run with the expectation that there are still unknowns that will cause hiccups in the project, causing it to run at a higher cost that a normal project. A pilot will then result in a more standardized approach for subsequent projects (the extend phase in my maturity model discussions) where the potential can be realized. Pilots should be a showcase for the potential, but they may not be the project that realizes it, so be careful in what you promise.

Dana goes on to discuss the importance of incremental gains from every project, and this I agree with. As he states, it shouldn’t be an “if we build it, they will come” bet. The services you choose to build in initial projects should be ones that you have a high degree of confidence that they will either be reused, or, that they will be modified in the future but where the more fine-grained boundaries allow those modifications to be performed at a lower cost than previously the case.

Second, SOA is an exercise in strategic planning. Every organization has staff that isn’t doing project work, and some subset of that staff is doing strategic planning, whether formally or informally. Without the strategic plan, you’ll be hard pressed to have accurate predictions on future gains, thus making all of your ROI work pure speculation, at best. There’s always an element of speculation in any estimate, but it shouldn’t be complete speculation. So, the question then is not about separate funding for SOA. It’s about looking at what your strategic planners are actually doing. Within IT, this should fall to Enterprise Architecture. If they’re not planning around SOA, then what are they planning? If there are higher priority strategic activities that they are focused on, fine. SOA will come later. If not, then get to work. If you don’t have enterprise architecture, then who in IT is responsible for strategic planning? Put the burden on them to establish the SOA direction, at no increase in cost (presuming you feel it is higher priority than their other activities). If no one is responsible, then your problem is not just SOA, it’s going to be anything of a strategic nature.

Models of EA

Models of EA

One thing that I’ve noticed recently is that there is no standard approach to Enterprise Architecture. Some organizations may have Enterprise Architecture on the organizational chart, other organizations may merely have an architectural committee. One architecture team may be all about strategic planning, while another is all about project architecture. Some EA teams may strictly be an approval body. I think the lack of a consistent approach is an indicator of the relative immaturity of the discipline. While groups like Shared Insights have been putting on Enterprise Architecture conferences for 10 years now, there are still many enterprises that don’t even have an EA team.

So what is the right approach to Enterprise Architecture? As always, there is no one model. The formation of an EA team is often associated with some pain point in the enterprise. In some organizations, there may be a skills gap necessitating the formation of an architecture group that can fan out across multiple projects, providing project guidance. A very common pain point is “technology spaghetti.â€? That is, over time the organization has acquired or developed so many technology solutions that the organization may have significant redundancy and complexity. This pain point can typically result in one of two approaches. The first is an architecture review board. The purpose of the board is to ensure that new solutions don’t make the situation any worse, and if possible, they make it better. The second approach is the formation of an Enterprise Architecture group. The former doesn’t appear on the organization chart. The latter does, meaning it needs day to day responsibilities, rather than just meeting when an approval decision is needed. Those day to day activities can be the formation of reference architectures and guiding principles, or they could be project architecture activities like the first scenario discussed. Even in these scenarios, however, Enterprise Architecture still doesn’t have the teeth it needs. Reference architectures and/or guiding principles may have been created, but these end state views will only be reached if a path is created to get there. This is where strategic planning comes into play. If the EA team isn’t involved in the strategic planning process, then they are at the mercy of the project portfolio in achieving the architectural goals. It’s like being the coach or manager of a professional sports team but having no say whatsoever in the player personnel decisions. The coach will do the best they can, but if they were handed players who are incompatible in the clubhouse or missing key skills necessary to reach the playoffs, they won’t get there.

You may be thinking, “Why would anyone ever want an EA committee over a team?â€? Obviously, organizational size can play a factor. If you’re going to form a team, there needs to enough work to sustain that team. If there isn’t, then EA becomes a responsibility of key individuals that they perform along with their other activities. Another scenario where a committee may make sense is where the enterprise technology is largely based on one vendor, such as SAP. In this case, the reference architecture is likely to be rooted in the vendor’s reference architecture. This results in a reduction of work for Enterprise Architecture, which again, has the potential to point to a responsibility model rather than a job classification.

All in all, regardless of what model you choose for your organization, I think an important thing to keep in mind is balance. An EA organization that is completely focused on reference architecture and strategic planning runs the risk of becoming an ivory tower. They will become disconnected from the projects that actually make the architecture a reality. The organization runs a risk that a rift will form between the “practitionersâ€? and the “strategists.â€? Even if the strategists have a big hammer for enforcing policy, that still doesn’t fix the cultural problems which can lead to job dissatisfaction and staff turnover. On the flip slide, if the EA organization is completely tactical in nature, the communication that must occur between the architects to ensure consistency will be at risk. Furthermore, there will still be no strategic plan for the architecture, so decisions will likely be made according to short term needs dominated by individual projects. The right approach, in my opinion, is to maintain a balance of strategic thinking and tactical execution within your approach to architecture. If the “officialâ€? EA organization is focused on strategic planning and reference architecture, they must come up with an engagement model that allows bi-directional communication with the tactical solution architects to occur often. If the EA team is primarily tasked with tactical solution architecture, then they must establish an engagement model with the IT governance managers to ensure that they have a presence at the strategic planning table.

How many versions?

How many versions?

While heading back to the airport from a recent engagement, Alex Rosen and I had a brief discussion on service versioning. He said, “you should blog on that.” So, thanks for idea Alex.

Sooner or later, as you build up your service library, you’re going to have to make a change to the service. Agility is one of the frequently touted benefits of SOA, and one way of looking at it is the ability to respond to change. When this situation arises, you will need to deal with versioning. In order to remain agile, you should prepare for this situation in advance.

There are two extreme viewpoints on versioning, and not surprisingly, they match up with the two endpoints associated with a service interchange- the service consumer and the service provider. From the consumers point of view, the extreme stance would be that the number of versions of a service remain uncapped. In this way, systems that are working fine today don’t need to be touched if a change is made that they don’t care about. This is great for the consumer, but it can become a nightmare for the provider. The number of independent implementations of the service that must be managed by the provider is continually growing, increasing the management costs thereby reducing the potential gains that SOA was intended to achieve. In a worst-case scenario, each consumer would have their own version of the service, resulting in the same monolithic architectures we have today, except with some XML thrown in.

From the providers point of view, the extreme stance would be that only one service implementation ever exists in production. While this minimizes the management cost, it also requires that all consumers move in lock step with the service, which is very unlikely to happen when there are more than one consumer involved.

In both of these extreme examples, I’m deliberately not getting into the discussion of what the change is. While backwards compatibility can have influence on this, regardless of whether the service provider claims 100% backwards compatibility or not, my experiences have shown that both the consumer and the provider should be executing regression tests. My father was an electrician, and I worked with him for a summer after my freshman year in college. He showed me how to use a “wiggy” (a portable voltage tester) for checking whether power was running to an outlet, and told me “If you’re going to work an outlet, always check if it’s hot. Even if one of the other electricians or even me tells you the power is off, you still check if it’s hot.” Simply put, you don’t want to get burned. Therefore, there will always be a burden on the service consumers when the service changes. The provider should provide as much information as possible so that the effort of the consumer is minimized, but the consumer should never implicitly trust that what the provider says is accurate without testing.

Back to the discussion- if we have these two extremes, the right answer is somewhere in the middle. Choosing an arbitrary number isn’t necessarily a good approach. For example, suppose the service provider states that no more than 3 versions of a service will be maintained in a production. Based upon high demand, if that service changes every 3 months, that means that the version released in January will be decommissioned in September. If the consumer of that first version is only touched every 12 months, you’ve got a problem. You’re now burdening that consumer team with additional work that did not fit into their normal release cycle.

In order to come up with a number that works, you need to look at both the release cycle of the consuming systems as well as the release cycle of the providers and find a number that allows consumers to migrate to new versions as part of their normal development efforts. If you read that carefully, however, you’ll see the assumption. This approach assumes that a “normal release cycle” actually exists. Many enterprises I’ve seen don’t have this. Systems may be released and not touched for years. Unfortunately, there’s no good answer for this one. This may be a symptom of an organization that is still maturing in their development processes, continually putting out fires and addressing pain points, rather than reaching a point of continuous improvement. This is representative of probably the most difficult part of SOA- the culture change. My advice for organizations in this situation is to begin to migrate to a culture of change. Rather than putting an arbitrary cap on the number of service versions, you should put a cap on how long a system can go without having a release. Even if it’s just a collection of minor enhancements and bug fixes, you should ensure that all systems get touched on a regular basis. When the culture knows that regular refreshes are part of the standard way of doing business, funding can be allocated off the top, rather than having to be continually justified against major initiatives that will always win out. It’s like our health- are you better off having regular preventative visits to your doctor in addition to the visits when something is clearly wrong? Clearly. Treat your IT systems the same way.

Teaming Up for SOA

Teaming Up for SOA

I recently “teamed up” with Phil Windley. He interviewed me for his latest InfoWorld article on SOA Governance which is now available online, and is in the March 5th print issue. Give it a read and let me know what you think. I think Phil did a great job in articulating a lot of the governance challenges that organizations run into. Of the areas where I was quoted, the one that I think is a significant culture change is the funding challenge. It’s not just about getting funding for shared services which is a challenge on its own. It’s also a challenge of changing the way that organizations make decisions to include architectural elements in the decision. Many organizations that I have dealt with all tend to be schedule driven. That is, the least flexible element of the project is schedule. Conversely, the thing that always gives is scope. Unfortunately, it’s not usually visible scope, it’s usually the difference in taking the quickest path (tactical) versus the best path (strategic). If you’re one of many organizations trying to do grass roots SOA, this type of IT governance makes life very difficult as the culture rewards schedule success, not architectural success. It’s a big culture shift. Does your Chief Architect have a seat at the IT Governance table?

Anyway, I hope you enjoy the article. Feel free to post your questions here, and I’d be happy to followup.