Governance in the Clouds? No thank you.

Governance in the Clouds? No thank you.

I’m sure David Linthicum is growing to expect a follow up blog from me after his “SOA Governance Monday” posts. True to form, I couldn’t let this week’s entry go by without commenting. There’s a good reason for that, however. Thanks to the good people over at Packt Publishing, my first book, SOA Governance, is now available for pre-order from Packt, with expected availability in October. It will also be available from your favorite online bookseller. Anyway, back to Dave’s blog entry.

The theme of this entry is SOA Governance as a service, which Dave states could be “a complete design time and runtime SOA governance system delivered out of the cloud.” I couldn’t help but think of the animation from Monty Python’s Flying Circus with the hand (or more frequently, foot) of God emerging from the clouds and squishing something beneath it. All humor aside, my first thoughts immediately came back to my usual comments on Dave’s past governance posts, which is that he’s focusing too much on the tools, and not on governance. His post probably should have been titled “Registry/Repository as a Service.” Of course, that’s what the original authors of UDDI tried to push, and that never came close to realizing that vision, but admittedly, the landscape has changed.

I have no issues with providing a registry/repository as a service. Certainly, the querying interface must be available as a service for any company exposing service outside their firewall. Likewise, if I’m consuming many services from the cloud, it would be great to let someone else handle putting all of those services into a common, queryable location, rather than me having to establish some form of federation or synchronization between my internal registry/repository and the registries/repositories of the service providers in the cloud. This is no different than the integration problem faced by a company that builds some services from scratch, but gets others from third party products like SAP that may have their own registry/repository, like SAP ESR.

Exposing the publishing interface is another story. If we get into the public service marketplace, we’d need this, but I think that’s a niche market today. Even if we consider a scenario where I deploy services into the cloud, I would argue that the publishing service is internal to the cloud provider. In short, the registry/repository capabilities would be part of the service hosting service (is that confusing or what?) made available by the cloud provider.

Back to governance. My constant theme on SOA governance is it is about people, policies, and process. The only role of tools is to make the processes more efficient. The cloud can only provide tooling. The degree to which you will need a registry/repository in the cloud will be completely dependent on the degree to which the rest of your tooling is in the cloud. If your services are deployed in the cloud, then it follows that the cloud must provide policy-driven run-time capabilities, such as request throttling, security, metric collection, etc. If your services are developed in the cloud, then the cloud must also provide metrics, code inspection, automated testing capabilities, and the same things that the design time tools used inside the enterprise provide. The cloud can not provide people, although I guess if a company wanted to outsource all of IT, and take a crowd-based governance model where everything they did would be open to the scrutiny of the developer community at large, then putting it all in the cloud would be a necessity.

The one upside to this notion of a registry/repository and all of the associated metadata associated with consumer/provider relationship out there in the cloud is that some large company with massive data centers (i.e. Google) could run their magic over all of that data and begin to extract out best practices for governing consumer/provider relationship, codifying policies, etc. Dave did call this out in his post, stating:

As the services are revised so are the design artifacts and the policies, both shared and private. In short, you’re taking advantage of the community aspect of SOA governance delivered as a service to do most of the work for youÖ100,000 heads are better than one.

While I don’t think the majority of large enterprises would be willing to allow their data to be analyzed in that manner today, it won’t surprise me at all if it happens in the future. If we think about it, this type of analysis on vendor contracts with enterprises is already done by companies like Gartner, so why shouldn’t a company like Google do the same for consumer/provider interactions with SOA? Even if that happens, it’s still not governance, it’s only analysis, which at best, provides better tools information for having good governance, but it won’t govern for you unless you choose to let it, at which point, you’re stating that the utilization of technology is a complete commodity for you, and you’ll just take whatever the big brother, be it Gartner, Google, or anyone else, tells you is the norm.

Best of Breed or Best Fit?

Best of Breed or Best Fit?

I saw the press release from SoftwareAG that announced their “strategic OEM partnership” with Progress Software for their Actional products. Â While I’m not going to comment on that particular arrangement, I did want to comment on the challenge that we industry practitioners face when trying to leverage vendor technologies these days.

There has been a tremendous amount of consolidation in the SOA space. Â There’s also been a lot of consolidation in the Systems Management space, another area where I pay a lot of attention. Unfortunately, the challenge still comes down to an integration problem. The smaller companies may be able to be more nimble and add desired capabilities. Â This approach is commonly referred to as a “best of breed” approach, where you pick the product that is the best for the immediate needs in a somewhat narrow area. Â Eventually, you will need to integrate those systems into something larger. Â This is where a “best fit” approach sometimes comes into play. Â Here, the desire is to focus more on breadth of capability than on depth of capability.

The definition of what is appropriate breadth is always changing, which is why many of the “best fit” vendors have grown by acquisition rather than continued enhancements and additions to their own solutions. Â Unfortunately, this approach doesn’t necessarily make the integration challenges go away. Â Sometimes it only means that a vendor is well positioned to offer consulting services as part of their offering, rather than having to go through a third party systems integrator. Â It does mean that the customer has a “single throat to choke,” but I don’t know about you, I’d much rather have it all work and not have to choke anyone.

This recent announcement is yet another example of the relationships between vendors that can occur. Â OEM relationships, rebranding, partnerships, etc. Â Does it mean that we as end users get a more integrated product? Â I think the answer is a firm maybe.

The only way that makes sense to me is to always retain control of your architecture. Â It doesn’t do any good to ask the questions, “Does your product integrate with foobar?” or “How easy is it to integrate with such-and-such?” Â You need to know the specifics of where and how you want these systems to integrate, and then compare that to what the vendors have to say, whether it’s all within their own suite of branded products or involves partners and OEM agreements. Â The more specifics you have the better. Â You may find that highly integrated suites, perhaps are integrated in name only, or maybe you’ll find that the suite really does operate as a well-oiled machine. Â Perhaps you’ll see a small vendor that has worked their tail off to integrate seamlessly into a larger ecosystem, and perhaps you’ll find a small vendor that is best left as an island in the environment.

Then, after getting answers, go through a POC effort to actually prove it out and get your hands dirty (you execute the POC, not the vendor). Â There are many choices involved in integrating these systems, such as what the message schemas will be, and the mechanisms of the integration itself- are you integrating “at the glass” via cut and paste between applications? Â Are you integrating in the middle via service interactions in the business tier? Â Or are you integrating at the data layer, either through direction database access or through some data integration/MDM-like layer? Â Just those questions alone can cause significant differences in your architecture. Â The only way you’ll see what’s really involved with the integration effort is to sit down and try it out, just do so after first defining how you’d like it to work through a reference architecture, then questioning the vendors on how well they map to your reference architecture, and finally by getting your hands dirty in a POC and actually trying to make it work as advertised in those discussions.

Say what? Focus on “what” rather than “how”

Say what? Focus on “what” rather than “how”

I just read Joe McKendrick’s post called “Debate rages over SOA’s ‘cloudy’ future.” It continues on the seemingly endless debate these days on WS-* vs. REST, WOA vs. SOA, etc. For the most part, I’ve avoided this discussion but I finally decided to actually make a statement on it. While there is plenty of value in these discussions, I’d argue that their focus has been on how IT implements solutions. What they don’t focus on is what solutions IT builds, which is where I believe there is much more value to be gained. Are there opportunities for incremental gains in using WS-*/REST/etc.? Absolutely. But is there a change in the solutions that we are delivering other than the implementation under the covers? Make the wrong decisions on what gets built (or bought), and it won’t matter what technology was used to build it, because that will be far more of a limiting factor than whether it was built using Java, C#, Python, WS-*, REST, or anything else. It’s disappointing that so much of the discussion on SOA has focused on the “how” rather than on “what.” Unfortunately, as a corporate IT practitioner, we’re frequently only asked to solve the “how,” and not involved with the “what,” so I’m not at all surprised that this is the state we find ourselves in. We need to continue to work to understand the business that our solutions support in order to be able to challenge the “what” and demonstrate the business value that can be provided by redefining “what,” eventually leading to sitting at the table as part of the team that decides “what.”

More on ITIL and SOA

More on ITIL and SOA

In his “links” post, James McGovern was nice enough to call additional attention to my recent ITIL and SOA post, but as usual, James challenged me to add additional value. Here’s what he had to say:

Todd Biske provides insight into how ITIL can benefit SOA but misses an opportunity to provide even more value. While it is somewhat cliche to talk about continual process improvement, it would be highly valuable to outline what types of feedback do operations types observe that could benefit the software development side of the house.

I thought about this, and it actually came down to one word: measurement. You can’t improve what you’re not measuring. It’s debatable as to whether or not operations does any better than software development in measuring the service they provide, but operations is probably in a better position to do so. Why? There is less ambiguity about the service being provided. For example, a common service from operations in big IT shops is building servers. They can measure how many servers they’ve built, how quickly they’ve been built, and they and how correctly they’ve been built, among other things.

In the case of softwre development, is the service being provided software development, or is the capability provided by the software? I’d argue that most shops are focused on the former. If you measure the “software development” service, you’ll probably measure whether the effort was completed on time and on budget. If, instead, you measure based on the capability provided by the software, it now becomes about the business value being provided by the software, which, in my opinion, is the more important factor. Taking this latter view also positions the organization for future modifications to the solutions. If my focus is solely on time and budget, why wouldn’t I disband the team when the project is done? The team has no vested interest in adding additional value. They may be challenged on some other project to improve their delivery time or budget accuracy, but there’s no connection to business value. Putting it very simply, what good does it do to deliver an application on time and on budget that no one uses?

So, back to the question, what can we learn from the ops side of the world. If ops has drunk the ITIL kool-aid, then they should be measuring their service performance, the goals for it should be reflected in the individual goals of the operations team, and it should be something that allows for improvement over time. If the measurement falls into the “one-time” measurement category, like delivering on-time and on-budget, that should be a dead giveaway that you may be measuring the wrong thing, or not taking a service-based view on your efforts.

ITIL and SOA

ITIL and SOA

I’ve been involved in some discussions recently around the topic of ITIL Service Management. While I’m no ITIL expert, the little bit of information that I’ve gleaned from these discussions has shown me that there are strong parallels between SOA and ITIL Service Management.

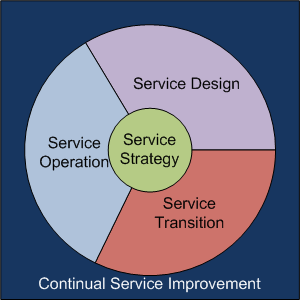

There’s the obvious connection that both ITIL Service Management and SOA contain the term “service,” but it goes much deeper than that (otherwise, this wouldn’t be worth posting). There are five major domains associated with ITIL: service strategy, service design, service transition, service operation, and continual service improvement. Here’s a picture that I’ve drawn that tries to represent them:

Keeping it very simple, service strategy is all about defining the services. In fact, there’s even a process within that domain called “Service Portfolio Management.” Service Design, Service Transition, and Service Operation are analogous to the tradition software development lifecycle (SDLC): service design is where the service gets defined, service transition is where the service is implemented, and service operation is where the service gets used. Continual Service Improvement is about watching all aspects of each of these domains and striving to improve it.

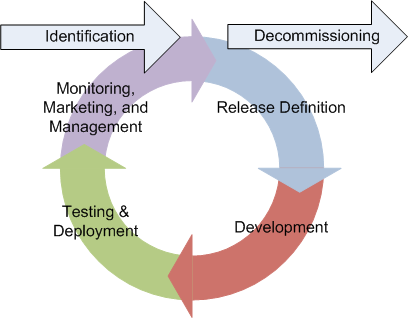

Now back to SOA side of the equation. I’ve previously posted on the change to the development process that is possible with SOA, most recently, this one. The essence of it is that SOA can shift the thinking from a traditional linear lifecycle that ends when a project goes live to a circular lifecycle that begins with identification of the service and ends with the decommissioning of the service. Instead, the lifecycle looks like this:

The steps of release definition, development, testing, and deployment are the normal SDLC activities, but added to this is what I call the triple-M activity: monitoring, marketing, and management. We need to do the normal “keep the lights on” activities associated with monitoring, but we also need to market the service and ensure its continued use over time, as well as manage its current use and ensure that it is delivering the intended business value. If improvements can be made through either improvements in delivery or by delivering additional functionality, the cycle begins again. This is analogous to the ITIL Service Management Continual Service Improvement processes. While not shown, clearly there is some strategic process that guides the identification and decommissioning activities associated with services, such as application portfolio management. Given this, these two processes have striking similarities.

What’s the point?

The message that I want you to take away is that we should be thinking about application and “web” service delivery in the same way that we are thinking about ITIL service delivery. Many people think ITIL is only about IT operations and the infrastructure, but that’s not the case. If you’re a developer, it equally applies to the applications that you are building and delivering. Think of them in terms of services and the business value that they deliver. When the project for version 1 ends, don’t stop thinking about it. Put in place appropriate metrics and reporting to ensure that it is delivering the intended value and watch it on a regular basis. Understand who your “users” are (it could be other systems and the people repsonsible for them), make sure they’re happy, and seek out new ones. Adopt a culture of continuous improvement, rather than simply focus on meeting the schedule and the budget and then waiting for the next project assignment.

Governance: Are you on the left or the right?

Governance: Are you on the left or the right?

Dan Foody of Progress Software posted a followup to my last post entitled, “Less Governance is Good Governance.” Clearly, when it comes to SOA governance, Dan falls on the right. For those of you not in the US, the right, when used in the political sense, refers to the Republican party. The Republican party is typically the champion of less government. On the opposite side of the political fence, over on the left, are the Democrats. The Democratic party is typically associated with big government and more regulation. This post isn’t about US politics, however, it’s about SOA governance.

In line with his right-facing view, Dan’s final statement in his post says, “As much governance as is absolutely necessary, but no more.” Well, the United States has had successful times when Congress at times when each party had the majority, likewise for the presidency. So, I don’t think that it’s a uniform truth that less governance is good governance. Good governance is not defined by how quickly your team comes to consensus, it’s defined on whether or not you achieve your desired behavior. Sometimes, that may only require a few policies. Other times, it may require lots of policies, and lots of regulation. It is completely dependent on the current behavior of your organization, the behavior you need to have to be successful, and the degree to which everyone in the organization understands it and agrees with it, which is why communication is so important. Left? Right? Both can be successful, it’s all about where you are and where you need to go.

Governance does not imply Command and Control

Governance does not imply Command and Control

In a recent discussion I had on SOA Governance with Brenda Michelson, program director for the SOA Consortium, she passed along a link to this article from Business Week. It’s the story of Tom Coughlin, coach of the Super Bowl champion New York Giants, and how he had to change from his reputation of an “autocratic tyrant” in order for the team to ultimately succeed.

What does this have to do with governance? When you think of governance, what comes to mind? My suspicion is that for most people, it’s not a positive image. At its extreme worst, I’ve heard many people describe governance as a slow, painful process that requires investing significant time preparing for a review by people who are out of touch with what a project is trying to do that ultimately results in the reviewers flaunting authority, the project team taking their lumps, and then everybody goes back to doing what they were doing with no real change in behavior other than increased animosity in the organization. In other words, exactly the situation that Tom Coughlin had with previous teams.

The fact is that governance is a required activity of any organization. Governance is the way in which an organization leverages people, policies, and processes to achieve a desired behavior. In the case of the New York Giants, their desired behavior was winning the Super Bowl. In years past, only people involved with setting the policies was the coaching staff, and the processes consisted of yelling and screaming. It didn’t work. When the desired behavior wasn’t achieved, change was needed, and that change was made in the governance. Players became involved in setting policies through a leadership council. That same council also became part of the governance process in both educating other players about the policies as well as ensuring compliance.

Unfortunately, when governance gets discussed, people naturally assume a command and control structure. When an individual sees a new statement “Thou shall do this” or “Thou shall not do that,” they think command and control, especially if they weren’t involved with the setting of the policy. As we grow, this sentiment becomes increasingly important. How many of us that live in large countries feel our politicians are disconnected from us, even if they are elected representatives? Those policies still need to be set, however, and there will always be a need for some form of authority to establish the policies. The key is how the “authority” is established and how they then communicate with the rest of the organization. If the authority is established by edict, and only consists of one-way communication (down) from the authority, guess what? You have a dictatorship with a command-and-control structure. If you set up representative groups, whether formal or informal, and focus on bi-directional communication, you can conquer that command-and-control mentality. The risk with that approach is that the need for “authority” is forgotten. Decisions still must be made and policies must be set, and if the group-think can’t do that, there will still be problems. Morale may not impacted in the same negative way as a command-and-control approach, but the end result is that you’re still not achieving the desired behavior.

Good governance is necessary for success, and open communication and collaboration is necessary for good governance. My recommendation is that if you are an in a position of authority (a policy setter and/or enforcer), you must keep the lines of communication open with your constituents and help them to set policies, change policies that are doing more harm than good, and understand the reasons behind policies. If you are a constituent, you need to participate in the process. If a policy is causing more harm than good in your opinion, make it known to the authorities. Sometimes that may result in a policy change. Sometimes it may result in you changing your expectations and seeing the reasons why compliance is necessary.

Cloud versus Grid

Cloud versus Grid

I am back from vacation and trying to catch up on my podcasts. In an IT Conversations Technometria Podcast, Phil Windley spoke with Rich Polski. Rich is working on Eucalyptus, an open source implementation of the Amazon EC2 interface.

Rich gave a great definition of the difference between grid computing and cloud computing. Grid computing typically involves a small number of users requesting big chunks of resources from a homogenous environment. Cloud computing typically involves a large number of users with relatively low resource requirements from a heterogenous environment.

Rich and Phil went on to discuss the opportunities for academic research in the cloud computing and virtualization spaces. If you are considering when and how to leverage these technologies, give it a listen.

Vacation

Vacation

No blogs for the next two weeks, most likely, unless I decide to post a picture from Colorado. We’re going on a two week family vacation. It will be a good break, as I’ve been spending a lot of time working on a book, and the first draft has just been completed. More on that to come! Thanks for reading, as always!

Information in the Enterprise

Information in the Enterprise

A thought occurred to me today as I was walking into work. Why is it that the first place I go, and probably most of my coworkers, when I want to find out something is the Google search box in my browser? Why don’t I use the search box on our corporate intranet?

There’s certainly no doubt that there’s a wealth of information out there on the internet. What’s interesting is that one of the reasons I started this blog back almost 3 years ago is I thought there were things I was encountering in my research for work that others might find valuable. It was probably about 6 months after I started blogging that someone at work did a Google search on something SOA or governance related, and up popped my blog in his results. It seems rather silly that a colleague at work had to go out to the public internet to find something that I had to say.

Being an Enterprise Architect, I create my fair share of Visio diagrams, PowerPoint presentations, and Word documents. In this day and age, there’s absolutely no reason that these things can’t be put into something like SharePoint and indexed so there are available via the search box on the intranet page. Unfortunately, not everything I do at work winds up in one of those documents, so I need to apply the same principles I used in creating this blog to what I do at work, and start making some of those discussions available internally, as well. What’s nice about internal blogs is they can be put into your RSS reader right along side external feeds. That won’t happen with search, unfortunately. This is actually one situation where I think it could be useful to have a company-specific version of the major browsers that would first direct searches in the default search box to the internal engine, and then follow that up with general search results from Google or somewhere else.

I encourage all of my readers who work in enterprises to think about how they can make more of their knowledge available to their co-workers through their intranets. If you don’t have internal support for blogging, wikis, and a decent search engine, it may be time to make the investment.

Blogging from my iPhone

Blogging from my iPhone

I’m testing out the new WordPress iPhone application. Outside of it not handling categories properly, (My mistake during my WordPress upgrade) It seems very tolerable for a quick post now and then. Cool!

A Key Challenge of Context Driven Architecture

A Key Challenge of Context Driven Architecture

The idea of context-driven architecture, as coined by Gartner, has been bouncing around my head since the Gartner AADI and EA Summits I attended in June. It’s a catch phrase that basically means that we need to design our applications to take into account as much as possible of the context in which it is executed. It’s especially true for mobile applications, where the whole notion of location awareness has people thinking about new and exciting things, albeit more so in the consumer space than in the enterprise. While I expect to have a number of additional posts on this subject in the future, a recent discussion with a colleague on Data Warehousing inspired this post.

In the data warehousing/business intelligence space, there is certainly a maturity curve to how well an enterprise can leverage the technology. In its most basic form, there’s a clear separation between the OLTP world and the DW/BI world. OLTP handles the day to day stuff, some ETL (extract-transform-load) job gets run to put it into DW, and then some user leverages the BI tools to do analytics or run reports. These tools enable the user to look for trends, clusters, or other data mining type of activities. Now, think of a company like Amazon. Amazon incorporates these trends and clusters into the recommendations it makes for you when you log in. Does Amazon’s back-end run some sophisticated analytics process every time I log in? I’d be surprised if it did, since performing that data mining is an expensive operation. I’m guessing (and it is a guess, I have no idea on the technical details inside of Amazon) that the analytics go on in the background somewhere. If this is the case, then this means that the incorporation of the results of the analytical processing (not the actual analytics itself) is coded into the OLTP application that we use when we log in.

So what does this have to do with context-driven architecture? Well, what I realized is that it all comes down to figuring out what’s important. We can run a BI tool on the DW, get a visual representation, and quickly spot clusters, etc. Humans are good at that. How do you tell a machine to do that, though? We can’t just expect to hook our customer facing applications up to a DW and expect magic to happen. Odds are, we need to take the first step of having a real person look at the information, decide what is relevant or not with the assistance of analytical tools. Only then can we set up some regular jobs to execute those analytics and store the results somewhere that is easily accessible by an OLTP application. In other words, we need to do analysis to figure out what the “right” context is. Once we’ve established the correlation, now we can begin to leverage that context in a way that’s suitable for the typical OLTP application.

So, if you’re like me, and just starting to noodle on this notion, I’d first take a look at your maturity around your data warehousing and business intelligence tools. If you’re relatively mature in that space, that I expect that a leap toward context-driven applications probably won’t be a big stretch for you. If you’re relatively immature, you may want to focus on building that maturity up, rather than jumping into context-driven applications before you’re ready.

Perspective

Perspective

I had to share this.

The Use of Incentives

The Use of Incentives

As usual, I had David Linthicum’s Real World SOA Podcast on during my drive into work today. I’m not going to pick on Dave today, however, as he was just the messenger. In fact, I liked that this week’s podcast mentioned the need for systemic change several times, which is a message that we need to continue to send out. See my last post for more on that subject. Anyway, Dave walked through this post from Joe McKendrick of ZDNet, entitled “Ten ways to tell it’s not SOA.” I hadn’t read this yet, but one item in particular stuck out when Dave mentioned it:

5) If developers and integrators are not being incented or persuaded to reuse services and interfaces, it’s not SOA. Without incentives or disincentives, they will keep building their own stuff.

The word in there that concerns me is “incented.” Many advocates for reuse also recommend some form of incentive program. Clearly, incentives are a possible tool to leverage for behavior change, but we’re much smarter than Pavlov’s dogs. Sometimes, people get too focused on the incentive, and not enough on the behavior. How many times do professional athletes put up big numbers in the last year of a contract because they’re “incented” by the free agent market in the upcoming off-season, only to then flop back down to their career .237 average after signing their multi-million dollar deal. Years ago, I was on a tiger team investigating what it would take to achieve reuse at our organization, and a co-worker would simply say, “Their incentive is that they get to keep their job.” Too often, incentives focus on one-time behaviors, rather than on changes that we want to become normal behavior.

As a very specific example on the risk associated with incentives, I’ve previously posted on how we need to provide context on the degree to which a service might be applicable in my horizontal and vertical thinking post. If you are a developer working in a “vertical” domain, the opportunity to write shared services simply doesn’t exist. Should that developer be penalized for not producing a reusable service? An incentive focused on writing shared services is meaningless for that developer. It’s like making a blanket statement to a baseball team that anyone who hits 20 home runs in a season will get an extra million dollars. You don’t want everyone swinging for the fences every at bat. Sometimes you need to lay down a sacrifice bunt. What about the pinch hitter who only get 100 at bats the whole season? Should they be unfairly penalized?

For me, there’s simply too a big of a risk of having incentives based on something that’s easy to quantify, rather than the actual desired behavior, and when applied to a broad audience, leaves some people out in the cold with no chance of getting the incentive through no fault of their own. Incentives are best used where a one-time change in behavior is needed due to extenuating circumstances, they should not be used to create behavior that should be the norm to begin with. As my colleague said, for normal behavior, your incentive is that you get to keep your job.

Governance and SOA Success

Governance and SOA Success

Michael Meehan, Editor-in-Chief of SearchSOA.com, posted a summary of a talk from Anne Thomas Manes of the Burton Group given at Burton’s Catalyst conference in late June. In it, Anne presented the findings of a survey that she did with colleague Chris Haddad on SOA adoption.

Michael stated:

Manes repeatedly returned to the issues of trust and culture. She placed the burden for creating that trust on the shoulders of the IT department. “You’re going to have to create some kind of culture shift,” she said. “And you know what? You’ve been breaking their hearts for so many years, it’s up to you to take the first step.”

I’m very glad that Anne used the term “culture shift,” because that’s exactly what it is. If there is no change in the way IT defines and builds solutions other than slapping a new technology on the same old stuff, we’re not going to even put a dent in the perceptions the rest of the organization has about IT, and are even at risk of making it worse.

The article went on to discuss Cigna Group Insurance and their success, after a previous failure. A new CIO emphasized the need for culture change, started with understanding the business. The speaker from Cigna, Chad Roberts, is quoted in Michael’s article as saying, “We had to be able to act and communicate like a business person.” He also said, “We stopped trying to build business cases for SOA, it wasn’t working. Instead use SOA to strengthen the existing business case.” I went back and re-read a previous post, that I thought made a similar point, but found that I wasn’t this clear. I think Chad nails it.

In a discussion about the article in the Yahoo SOA group, Anne followed up with a few additional nuggets of wisdom.

One thing I found really surprising was that the people from the

successful initiatives rarely talked about their infrastructure. I had

to explicitly solicit the information from them. From their

perspective, the technology was the least important aspect of their

initiative.

This is great to hear. While there are plenty of us out there that have stated again and again that SOA isn’t about applying WS-*/REST or buying an ESB, it still needs to be emphasized. A surprising comment, however, was this one:

They rarely talked about design-time governance — other

than improving their SDLC processes. They implemented governance via

better processes. Most of it was human-driven, although many use

repositories to manage artifacts and coordinate lifecycle. But again,

the governance effort was less important than the investment in social

capital.

I’m still committed to my assertion that governance is critical to a

successful SOA initiative–but only because governance is a means to

effect behavioral change. The true success factor is changing

behavior.

I think what we’re seeing here is the effects of governance becoming a marketing term. The telling statement is in Anne’s second paragraph- governance is a means to effect behavioral change. My definition of governance is the people, policies, and processes that an organization employs to achieve a desired behavior. It’s all about behavior change in my book. So, when the new Cigna CIO makes a mandate that IT will understand the business first and think about technology second, that’s a desired behavior. What are the policies that ensured this happened? I’m willing to bet that there were some significant changes to the way projects were initiated at Cigna as part of this. Were the policies that, if adhered to, would lead to a funded project documented and communicated? Did they educate first, and then only enforce where necessary? That sounds like governance to me, and guess what- it led to success!