Setting SOA Expectations

Setting SOA Expectations

It’s been a while since I’ve blogged (a really nasty sinus infection contributed to that), so this comic seemed appropriate.

Anyway, I, like many other people, did a double-take after I read this blog post from Anne Thomas Manes of the Burton Group. In it, Anne states:

It has become clear to me that SOA is not working in most organizations. … I’ve talked to many companies that have implemented stunningly beautiful SOA infrastructures … deploying the best technology the industry has to offer … And yet these SOA initiatives invariably stall out. … They have yet to demonstrate how all this infrastructure yields any business value. More to the point, the techies have not been able to explain to the business units why they should adopt a better attitude about sharing and collaboration. … Thus far I have interviewed only one company that I would classify as a SOA success story.

I’ve always believed that the changes associated with SOA adoption were a long term effort, at least 5 years or so, but it was very surprising to only see Anne only find one success story, although further comments indicated she had only talked to 7 companies. 1 out of 7 certainly feels right to me. Things became even more interesting, though, with some comments on the post which indicated that Anne’s definition of success was, “Has the initiative delivered any of the benefits specified as the goals of the initiative?” She indicated that every company said that their goals were cost reduction and increased agility. My question is how did they measure this?

Another recent post that weighs into this was Mike Kavis’ post on EA, SOA, and change. Mike quotes Kotter’s 8 steps for transformation which include creating a vision and communicating a vision. Taking this back to Anne’s comments, if a company’s SOA goals are increased agility, my first question is how do you measure your agility today? Then, where do you want it to be at some point in the future (creating the vision)? If you can’t quantify what agility is, how can you ever claim success? Questions about success then are completely subjective, rendering surveys that go across organizations somewhat meaningless. While cost reduction may appear to be more easily quantified, I’d argue that many organizations aren’t currenlty collecting metrics that can give an accurate picture of development costs other than at a very coarse-grained level. It would be very difficult to attribute any cost reduction (or lack thereof) to SOA or anything else that changed in the development process.

This brings me to the heart of this post- setting expectations for your SOA efforts and measuring the success of your SOA journey is not an easy thing to do. Broad, qualitative things like “increased agility” and “reduced costs” may be very difficult to attribute directly to an SOA effort and may also fail to address the real challenge with SOA, which is culture change. If culture change is now your goal, then you need to work to describe the before and after states, as well as some tactical steps to get there. In a comment I made on one of my own posts from quite some time ago, I spoke of three questions that all projects should be asked:

- What services does your solution use / expose?

- What events does your solution consume / publish?

- What business process(es) does your solution support?

The point of those three questions was that I felt that many projects today probably couldn’t provide answers to those questions. That behavior needs to change. Now while these were very tactical, they were also easily digestible by an organization. The first step of change was simply to get people thinking about these things. The future state certainly had solutions leveraging reusable services, but I didn’t expect projects to do so out of the gate. I did expect projects to be able to tell me what services and events they were exposing and publishing, though.

This type of process can then lead to one of the success factors that Anne called out in her comments which was the creation of a portfolio of services. Rather than starting out with a goal of “cost reduction” or “increased agility”, start out with a goal of “create a service portfolio.” This sets the organization up for an appropriate milestone on the journey rather than exclusively focusing on the end state. Without interim, achievable milestones, that end state will simply remain as an ever-elusive pot of gold at the end of the rainbow.

New Feature on The Conversations Network

New Feature on The Conversations Network

First, I have to admit that I’m part of the 99.8% of IT Conversations subscribers that currently aren’t donating, but that will be changing in the very near future. Given that I listen to at least 3 or 4 programs from them per week, I have no excuse for not donating.

I was happy to hear on Doug Kaye’s message today that they’ve added a smart playlist function. I had tried their personal playlist function previously, and just as Doug pointed out, I didn’t use it due to the need to actively manage it. It was far easier for me to download everything and just fast forward through the programs that didn’t interest me. Now, I can simply enter the topics I’m interested in and the series I regularly listen to, like Phil Windley’s Technometria, Moria Gunn’s TechNation and BiotechNation series, and Jon Udell’s Interviews with Innovators. This is great addition, so thank you IT Conversations and The Conversations Network. My membership donation will be coming shortly.

The misunderstood blogger

The misunderstood blogger

Thankfully, this has never happened to me.

Thanks to my father-in-law for passing this one along.

More on Service Lifecycle Management

More on Service Lifecycle Management

I received two questions in my email regarding my previous post on Service Lifecycle Management, specifically:

- Who within an organization would be a service manager?

- To whom would the service manager market services?

These are both excellent questions, and really hit at the heart of the culture change. If you look at the typical IT organization today, there may not be anyone that actually plays the role of a service manager. At its core, the service manager is a relationship manager- managing the interactions with all of the service consumers. What makes this interesting is when you think about exposing services externally. Using the concept of relationship management, it is very unlikely that the service manager would be someone from IT, rather, it’s probably someone from a business unit that “owns” the relationship with partners. IT is certainly involved, and it’s likely that technical details of the service interaction are left to the IT staff of each company, but the overall relationship is owned by the business. So, if we only consider internal services, does the natural tendency to keep service management within IT make sense? This approach has certain risks associated with it, because now IT is left to figure out the right direction through potentially competing requirements from multiple consumers, all the while having the respective business units breathing down their neck saying, “Where’s our solution?” At the same time, it’s also very unlikely that business is structured in such a way to support internal service management. Many people would say that IT is often better positioned to see the cross-cutting concerns of many of these elements. So, there are really two answers to the question. The first answer is someone. Not having a service owner is even more problematic than choosing someone from either IT or the business who may have a very difficult task ahead of them. The second answer is that the right person is going to vary by organization. I would expect that organizations whose SOA efforts are very IT driven, which I suspect is the vast lot of them, would pick someone within IT to be the service manager. I would expect that person to have an analyst and project management background, rather than a technical background. After all, this person needs to manage the consumer relationship and understand their requirements, but they also must plan the release schedule for service development. For organizations whose SOA efforts are driven jointly with the business, having a service manager within a business organization will probably make more sense, depending on the organizational structure. Also, don’t forget about the business of IT. There will be a class of services, typically in the infrastructure domains, such as authentication and authorization services, that will probably always be managed out of IT.

On question number two, I’m going to take a different approach to my answer. Clearly, I could just say, “Potential service consumers, of course” and provide no help at all. Why is that no help? Because we don’t know who represents those service consumers. Jumping on a common theme in this blog, most organizations are very project-driven, not service or product driven. When looking for potential service consumers, if everything is project driven, those consumers that don’t exist in the form of a project can’t be found! I don’t have a background in marketing, but I have to believe that there are probably some techniques from general product marketing that can applied within the halls of the business to properly identify the appropriate segment for a service. The real point that needs to be made is that a service manager can not take the field of dreams approach of simply building it, putting some information into the repository, and then hoping consumers find it. They have to hit the pavement and go talk to people. Talk to other IT managers whom you know use the same underlying data that your service does. Talk to your buddies at the lunch table. Build your network and get the word out. At a minimum, when a service is first identified, send a blast out to current project managers and their associated tech leads, as well as those that are in the project approval pipeline. This will at least generate some just-in-time consumers. While this may not yield the best service, it’s a start. Once some higher level analysts efforts have taken place to segment the business into business domains, then the right marketing targets may be more clearly understood.

Is Identity Your Enabler or Your Anchor?

Is Identity Your Enabler or Your Anchor?

I actually had to think harder than normal for a title for this entry because as I suspected, I had a previous post that had the title of “Importance of Identity.” That post merely talked about the need to get identity on your service messages and some of the challenges associated with defining what that identity should be. This post, however, discusses identity in a different light.

It occurred to me recently that we’re on a path where having an accurate representation of the organization will be absolutely critical to IT success. Organizations that can’t keep ActiveDirectory or their favorite LDAP up to date with the organizational changes that are always occurring with find themselves saddled with a boat anchor. Organizations that are able to keep their identity stores accurate and up to date will find themselves with a significant advantage. An accurate identity store is critical to the successful adoption of BPM technology. While that may be more emerging, think about your operations staff and the need for accurate roles associated with the support of your applications and infrastructure. One reorg of operations and the whole thing could fall apart with escalation paths no longer in existence, incorrect reporting paths, and more.

So, before you go gung-ho with BPM adoption, take a good look at your identity stores and make sure that you’ve got good processes in place to keep it up to date. Perhaps that should be the first place you look to leverage the BPM technology itself!

ActiveVOS BUnit

ActiveVOS BUnit

While I don’t normally comment on press releases that I occasionally receive in email, one tidbit in a release from Active Endpoints about ActiveVOS™ 5.0 caught my eye:

Active Endpoints, Inc. (www.activevos.com), inventor of visual orchestration systems (VOS), today announced the general availability of ActiveVOS™ 5.0. …

Scenario testing and remote debugging. ActiveVOS 5.0 fundamentally and completely solves a major pain experienced by all developers: the question of how to adequately test loosely-coupled, message-based applications. ActiveVOS 5.0 includes a new BUnit (or “BPEL unit test”) function, which allows developers to simulate the entire orchestration offline, including the ability to insert sample data into the application. A BUnit can be created by simply recording a simulation in the ActiveVOS 5.0 Designer. Multiple BUnits can be combined into BSuites, or collections of smaller simulations, to build up entire test suites. Once deployed into a production environment, ActiveVOS 5.0 delivers precisely the same experience for testing and debugging a production orchestration as it does for an application in development. Remote debugging includes the ability to inspect and/or alter message input and output, dynamically change endpoint references and alter people assignments in the application.

Back in November, in my post titled Test Driven Model Development, I lamented the fact that when a new development paradigm comes along, like the graphical environments common in BPM tooling, we run the risk of taking one or more steps backward in our SDLC technologies. I used the example of test-driven development as an example. As a result, I’m very happy to see a vendor in this space emphasizing this capability in their product. While it may not make a big difference in the business solutions out there, things like this can go a long way in getting some of the hard-core Java programmers to actually give some of these model-driven tools a shot.

Architect title

Architect title

For all of my architect readers, give today’s Dilbert a glance. 🙂

March Events

March Events

Here are the SOA, BPM, and EA events coming up in March. If you want your events to be included, please send me the information at soaevents at biske dot com. I also try to include events that I receive in my normal email accounts as a result of all of the marketing lists I’m already on. For the most up to date list as well as the details and registration links, please consult my events page. This is just the beginning of the month summary that I post to keep it fresh in people’s minds.

- 3/3: ZapThink Practical SOA: Pharmaceutical and Health Care

- 3/4: Webinar: Implementing Information as a Service

- 3/6: Global 360/Corticon Seminar: Best Practices for Optimizing Business Processes

- 3/10 – 3/13: OMG / SOA Consortium Technical Meeting, Washington DC

- 3/10: Webinar: Telelogic Best Practices in EA and Business Process Analysis

- 3/11: BPM Round Table, Washington DC

- 3/12 – 3/14: ZapThink LZA Bootcamp, Sydney, Australia

- 3/13: Webinar: Information Integrity in SOA

- 3/16 – 3/20: DAMA International Symposium, San Diego, CA

- 3/18: ZapThink Practical SOA, Australia

- 3/18: Webinar: BDM with BPM and SOA

- 3/19: Webinar: Pega, 5 Principles for Success with Model-Driven Development

- 3/19: Webinar: AIIM Webinar: Records Retention

- 3/19: Webinar: What is Business Architecture and Why Has It Become So Important?

- 3/19: Webinar: Live Roundtable: SOA and Web 2.0

- 3/20: Webinar: Best Practices for Building BPM and SOA Centers of Excellence

- 3/24: Webinar: Telelogic Best Practices in EA and Business Process Analysis

- 3/25: ZapThink Practical SOA: Governance, Quality, and Management, New York, NY

- 3/26: Webinar: AIIM Webinar: Proactive eDiscovery

- 3/31 – 4/2: BPM Iberia – Lisbon

Beaming into Social Networks

Beaming into Social Networks

I’m listening to Jon Udell’s latest innovator conversation, this time with Valdis Krebs, courtesy of IT Conversations. Valdis is a researcher in the area of social networks and he and Jon are discussing sites like Facebook, LinkedIn, Plaxo, MySpace, etc. One of the interesting points that Valdis makes is that social networking has always been a peer-to-peer process. Two people engage in some form of personal, direct communication to form a “connection.” This is predominant form of building a network, rather than joining a club. The model of virtually all the social networking sites is one of “joining”.

The discussion brought me back to the late 90’s when I had purchased a PalmPilot. I actually owned one that had the U.S. Robotics logo on it versus the 3Com or Palm logos that came later. One of the features that came along later (I think it was when I upgraded to a Handspring device) was the ability to “beam” contact information to other Palm owners. The goal was to do away with business cards and instead “beam” information electronically. While I thought the technology was pretty cool, it didn’t survive because the PDA didn’t survive. It all got morphed into mobile device technology, and with the multitude of devices out there now, the ability to quickly share information between two devices disappeared.

I think this would be a great technology to bring back. I attended a conference back in December, and of course walked away with a number of business cards. I then had to take the time to put those contacts into my address book. Thankfully, as an iPhone owner, I only had to put them in one place for my personal devices, but I also had to enter them into my contacts on my work PC. Then became the step of adding all of these people to my networks on LinkedIn (at a minimum). I actually didn’t do this, most of the people actually had already sent me requests for the various social networks.

In thinking about this, I have to admit that this was way too difficult. What we need is the ability to share contact information electronically with our handheld devices via some short range networking technology like Bluetooth, and have that electronic information be “social network aware” so that as a result of the exchange, contacts are automatically added to friends/contact lists on all social networks that the two parties in common. It should be an automatic add, rather than a trigger of email to each party of “do you want to add this person to your network?” An option would be to ask that question on the device at the time of the interchange, which would allow people to be added to appropriate networks as is supported by sites like Plaxo Pulse.

So, for all of you involved with social networking technology, here’s your idea to go run with and make it happen. I’ll be a happy consumer when it becomes a reality.

IT Conversations: OpenDNS

IT Conversations: OpenDNS

I listened to the latest Technometria podcast from IT Conversations yesterday, which was a conversation with David Ulevitch, CEO of OpenDNS. It was a great discussion about some of the things they’re trying to do to take DNS into the future. It certainly opened my eyes up to some things that can be done with a technology that every single one of us uses every day but probably takes for granted. Give it a listen.

Service Lifecycle Management

Service Lifecycle Management

I attended a presentation from Raul Camacho from the SOA Solutions group of Microsoft Consulting Services yesterday. The talk provided some good details on some of the technical challenges associated with lifecycle events associated with services, such as the inevitable changes to the interface, adding new consumers, etc., but I actually thought it was pretty weak on the topic. I thought for sure that I had a blog post on the subject, but I was surprised to find that I didn’t. Some time ago (January of 2007, to be specific) I indicated that I would have a dedicated post, but the only thing I found was my post on product centered development versus project-centered development. While that post has many of the same elements, I thought I’d put together a more focused post.

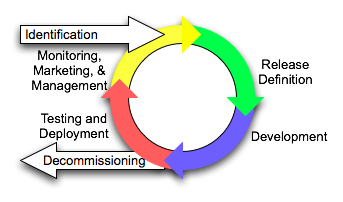

The first point, and a very important point, is that the service lifecycle is not a project lifecycle or just the SDLC for a service. It is a continuous process that begins when a service is identified and ends when the last version of a service is decommissioned from production. In between, you’ll have many SDLC efforts associated with each version of the service. I presented this in my previous post like this.

Raul’s talk gave a very similar view, again presenting the lifecycle as a circle with an on-ramp of service identification. The activities he mentioned in his talk were:

- Service Identification

- Service Development

- Service Provisioning

- Service Consumption

- Service Management

There’s many similarities between these two. We both had the same on-ramp. I broke out the SDLC into a bit more detail with steps of release definition, development, testing, and deployment, while Raul bundled these into two steps of development and provisioning. We both had management steps, although mine labeled it as the triple-M of monitoring, marketing, and management. The one key difference was Raul’s inclusion of a step of service consumption. I didn’t include this in my lifecycle, since, in my opinion, service consumption is associated with the lifecycle of the consumer, not with the lifecycle of the service. That being said, service consumption can not be ignored, and is often the event that will trigger a new release of the service, so I can understand why one may want to include it in the picture.

The thing that Raul did not mention in his talk, which I feel is an important aspect, is the role of the Service Manager. We don’t need Service Lifecycle Management if there isn’t a Service Manager to manage it. Things fall apart when there isn’t clear lines of responsibility for all things about a service. For example, a frequent occurrence is where the first version of a service is built by a team that is responsible for both the initial consumer and the service. If service ownership and management falls to this team, it is at best a secondary interest, because their real focus was on the delivery of the service consumer, not of the service itself. A second approach that many organizations may take is to create a centralized service team. While this ensures that the ownership question will get resolved, it has scalability problems because centralized teams are usually created based upon technical expertise. Service ownership and management has more to do with the business capability being provided by the service than it does with the underlying technologies used to implement it. So, once again, there are risks that a centralized group will lack the business domain knowledge required for effective management. My recommendation is to align service management along functional domains. A service manager will likely manage multiple services, simply because there will be services that change frequently and some that change infrequently, so having one manager per service won’t make for an even distribution of work. The one thing to avoid, however, is to have the same person managing both the service and the consumer of a service. Even where there is very high confidence that a service will only have one consumer for the foreseeable future, I think it’s preferable to set the standard of separation of consumer from provider.

So, beyond managing the releases of each service, what does a service manager do? Well, again, it comes back to the three M’s: Monitor, Market, and Manage. Monitoring is about keeping an eye on how the service is behaving and being used by consumers. It has to be a daily practice, not one that only happens when a red light goes off indicating a problem. Marketing is about seeking out new consumers of the service. Whether it’s for internal or external use, marketing is a critical factor in achieving reuse of the service. If no one knows about it, it’s unlikely to be reused. Finally, the manage component is all about the consumer-provider relationship. It will involve bringing on new consumers found through marketing and communication which may result in a new version, provisioning of additional capacity, or minimally the configuration of the infrastructure to enforce the policies of the service contract. It will also involve discussions with existing consumers based on the trends observed through the monitoring activity. What’s bad is when there is no management. I always like to ask people to think about using an external service provider. Even in cases where the service is a commodity with very predictable performance, such as getting electricity into your house, there still needs to be some communication to keep the relationship healthy.

I hope this gives you an idea of my take on service lifecycle management. If there’s more that you’d like to hear, don’t hesitate to leave me a comment or send me an email.

Perception Management

Perception Management

James McGovern frequently uses the term “perception management” in his blog, and there’s no doubt that it’s a function that most enterprise architects have to do. It’s an incredibly difficult task, however. Everyone is going to bring some amount of vested interests to the table, and when there’s conflict in those interests, that can create a challenge.

A recent effort I was involved in required me to facilitate a discussion around several options. I was putting together some background material for the discussion, and started grouping points into pros and cons. I quickly realized, however, that by doing so, I was potentially making subjective judgements on those points. What I may have considered a positive point, someone else may have considered it a negative point. If there isn’t agreement on what’s good and what’s bad, you’re going to have a hard time. In the end, I left things as pros and cons, since I had a high degree of confidence that the people involved had this shared understanding, but I made a mental note to be cautious about using this approach when the vested interests of the participants are an unknown.

This whole space of perception management is very interesting to me. More often than not, the people with strong, unwavering opinions tend to attract more attention. Just look at the political process. It’s very difficult for a moderate to gain a lot of attention, while someone who is far to the left or far to the right can easily attract it. At the same time, when the elections are over, the candidates typically have to move back toward the middle to get anything done. Candidates who are in the middle get accused of flip-flopping. Now, put this in the context of a discussion facilitator. The best facilitator is probably one who has no interests of his or her own, but who is able to see the interests of all involved, pointing out areas of commonality and contention. In other words, they’re the flip-floppers.

I like acting as a facilitator, because I feel like I’ve had a knack for putting myself in someone else’s shoes. I think it’s evident in the fact that you don’t see me putting too many bold, controversial statements up on this blog, but rather talking about the interesting challenges that exist in getting things done. At the same time, I really like participating in the discussions because it drives me nuts when people won’t take a position and just muddle along with indecision. It’s hard to participate and facilitate at the same time.

My parting words on the subject come from my Dad. Back in those fun, formative high school years as I struggled through all of the social dynamics of that age group, my Dad told me, “you can’t control what other people will do or think, you can only control your own thoughts or actions.” Now, while some may read this and think that this means you’re free to be an arrogant jerk and not give a hoot what anyone thinks about you, I took it a different way. First and foremost, you do have to be confident in your own thoughts and beliefs. This is important, because if you don’t have certain ideals and values on who you want to be, then you’re at risk for being someone that will sacrifice anything just to gain what it is you desire, and that’s not necessarily a good thing. Second, the only way to change people’s perception of you is by changing your own actions, not by doing the same thing the same way, and hoping they see the light. I can’t expect everyone to read the topics in this blog and suddenly change their IT departments. Some may read it and not get it at all. Some may. For those that don’t, I may need to pursue other options for demonstrating the principles and thus change their perceptions. At the same time, there will always be those who are set in their ways because they have a fundamental different set of values. Until they choose to change those values, your energy is best spent elsewhere.

Multi-tier Agreements from Nick Malik

Multi-tier Agreements from Nick Malik

Nick Malik posted a great followup comment to my last post on service contracts. For all of you who just follow my blog via the RSS feed, I thought I’d repost the comment here.

The fascinating thing about service contract standardization, a point that you hit on at the end of your post, is that it is not substantially different from the standardization of terms and conditions that occurs for legal agreements or sales agreements in an organization.

I am a SOA architect and a member of my Enterprise Architecture team, as you are, but I’m also intimately familiar with solutions that perform Contract Generation from Templates in the Legal and Sales agreements for a company. My employer sells over 80% of their products through the use of signed agreements. When you run $3B of revenue, per month, through agreements, standardization is not just useful. It is essential.

When you sign an agreement, you may sign more than one. They are called “multi-tier� agreements, in that an agreement requires that a prior one is signed, in a chain. There are also “associated agreements� that are brought together to form an “agreement package�. When you last bought a car, and you walked out with 10 different signed documents, you experienced the agreement package firsthand.

These two concepts can be leveraged for SOA governance in terms of agreements existing in a multi-tier environment, as well as services existing in an ecosystem of agreements that are part of an associated package.

For example, you could have one of four different supporting agreements that the deployment team must agree to as part of the package. All four could rely on the same “common terms and taxonomy� agreement that every development and deployment team signs (authored by Enterprise Architecture, of course). And you could have a pair of agreements that influence the service itself: one agreement that all consumers must sign that governs the behavioural aspects of the service for all consumers, and another agreement that can be customized that governs the information, load, and SLA issues for each provider-consumer pair.

If this kind of work is built using an automated agreement management system, then the metadata for an agreement package can easily be extracted and consumed by automated governance monitoring systems. We certainly feed our internal ERP system with metadata from our sales agreements.

Something to think about…

The Elusive Service Contract

The Elusive Service Contract

In an email exchange with David Linthicum and Jason Bloomberg of ZapThink in response to Dave’s last podcast (big thanks to Dave for the shout-out and the nice comments about me in the episode), I made some references to the role of the service contract and decided that it was a great topic for a blog entry.

In the context of SOA Governance, my opinion is that the service contract is the “container” of policy that governs behavior at both design-time and run-time. According to Merriam-Webster, a contract is “a binding agreement between two or more persons or parties; especially : one legally enforceable.” Another definition from Merriam-Webster is “an order or arrangement for a hired assassin to kill someone” which could certainly have implications on SOA efforts, but I’m going to use the first definition. The key part of the definition is “two or more persons or parties.” In the SOA world, this means that in order to have a service contract, I need both a service consumer and a service provider. Unfortunately, the conversations around “contract-first development” that were dominant in the early days caused people to focus on one party, the service provider, when discussing contracts. If we get back to the notion of a contract as a binding agreement between two parties, and going a step further by saying that the agreement is specified through policies, the relationship between the service contract and design and run time governance should become much clearer.

First, while I picked on “contract-first development” earlier, the functional interface is absolutely part of the contract. Rather than be an agreement between designers and developers, however, it’s an agreement on between a consumer and a provider on the structure of the messages. If I am a service provider and I have two consumers of the service, it’s entirely possible that I expose slightly different functional interfaces to those consumers. I may choose to hide certain operations or pieces of information from one consumer (which may certainly be the case where one consumer is internal and another consumer is external). These may have an impact at design-time, because there is a handoff from the functional interface policies in the service contract to the specifications given to a development team or an integration team. Beyond this, however, there are non-functional policies that must be in the contract. How will the service be secured? What’s the load that the consumer will place on the service? What’s the expected response time from the provider? What are the notification policies in the event of a service failure? What are the implications when a consumer exceeds its expected load? Clearly, many of these policies will be enforced through run-time infrastructure. Some policies aren’t enforced on each request, but have implications on what goes on in a request, such as usage reporting policies. My service contract should state what reports will be provided to a particular consumer. This now implies that the run-time infrastructure must be able to collect metrics on service usage, by consumer. Those policies may ripple into a business process that orchestrates the automated construction and distribution of those usage reports. Hopefully, it’s also clear that a service contract exists between a single consumer and a single provider. While each party may bring a template to the table, much as a lawyer may have a template for a legal document like a will, the specific policies will vary by consumer. One consumer may only send 10,000 requests a day, another consumer may send 10,000 requests an hour. Policies around expected load may then be enforced by your routing infrastructure for traffic prioritization, so that any significant deviation from these expected load don’t starve out the other consumers.

The last comment I’d like to make is that there are definitely policies that exist outside of the service contract that influence design-time and run-time, so don’t think that the service contract is the container of all policies. I ran into this while I was consulting when I was thinking that the service contract could be used as a handoff document between the development team and the deployment team in Operations. What became evident was that policies that govern service deployment in the enterprise were independent of any particular consumer. So, while an ESB or XML appliance may enforce the service contract policies around security, they also take care of load balancing requests across the multiple service endpoints that may exist. Since those endpoints process requests for any consumer, the policies that tell a deployment team how to configure the load balancing infrastructure aren’t tied to any particular service contract. This had now become a situation where the service contract was trying to do too much. In addition to being the policies that govern the consumer-provider relationship, it was also trying to be the container for turnover instructions between development and deployment, and a single document couldn’t do both well.

Where I think we need to get to is where we’ve got some abstractions between these things. We need to separate policy management (the definition and storage of policies) from policy enforcement/utilization. Policy enforcement requires that I group policies for a specific purpose, and some of those policies may be applicable in multiple domains. Getting to this separation of management from enforcement, however, will likely require standardization in how we define policies, and they simply don’t exist. Policies wind up being tightly coupled to the enforcement points, making it difficult to consume them for other purposes. Of course, the organizational culture needed to support this mentality is far behind the technology capabilities, so these efforts will be slow in coming, but as the dependencies increase in our solutions over time, we’ll see more and more progress in this space. To sum it up, my short term guidance is to always think of the service contract in terms of a single consumer and a single provider, and as a collection of policies that govern the interaction. If you start with that approach, you’ll be well positioned as we move forward.

Why is Governance a four-letter word?

Why is Governance a four-letter word?

Recent articles/blogs from Michael Meehan and Dan Foody both emphasized the typically negative connotations associated with governance. Michael compared governance to getting a colonoscopy with an IMAX camera, while Dan pointed out that “governance is about defining the box that you’re not allowed to think outside.”

I won’t argue that most people have a very negative reaction to the term, but there’s a key point that missing in the discussion. Governance is about the people, policies, and processes put in place to obtain desired behaviors. Governance does not have to be about command and control. If your desired behavior is complete freedom to try anything and everything, clearly, you don’t want rigid command and control structures. If your goal is to be a very innovative company, but your command and control structures prevent you from doing so in a timely fashion, that’s not a problem with governance per se, but with the governance model you’ve chosen. While the United States has clear separation between church and state, the same can’t be said for many other countries in the world. If the desired behavior for those countries is strict adherence to religious principles, then clearly there will be some challenges in applying the United States’ governance model to those countries. It doesn’t mean those countries don’t need governance, it means they need a different style of governance.

One of my earliest posts on the subject of governance emphasized that the most important thing is that your governance model match your corporate culture. If it doesn’t it’s not going to work. I’ll also add to it that your employees need to accept that corporate culture as well. If the people in the company don’t agree with the desired behaviors that the leaders have established, you’re going to have problems. We need to stop attacking governance, and instead educate our staff on the desired behaviors and why they’re important so that people will want to comply. It must be the path of least resistance. That’s still governance, though. It’s just governance done right.