Latest SOA Insights Podcast

Latest SOA Insights Podcast

Dana Gardner has posted the latest episode of his Briefings Direct: SOA Insights series. In this episode, the panelists (Tony Baer, Jim Kobielus, Brad Shimmin, and myself) along with guest Jim Ricotta, VP and General Manager of Appliances at IBM, discuss SOA Appliances and the recent announcements around the BPEL4People specification.

This conversation was particularly enjoyable for me, as I’ve spent a lot of time understanding the XML appliance space in the past. As I’ve blogged about in the past, there’s a natural convergence between software-based intermediaries like proxy servers and network appliances. I’ve learned a lot when working with my networking and security counterparts in trying to come up with the right solution. The other part of the conversation on BPEL4People was also fun, given my interests in human computer interaction. I encourage you to give it a listen, and feel free to send me any questions you may have, or suggestions for topics you’d like to see discussed.

Revisiting Service Categories

Revisiting Service Categories

Chris Haddad of the Burton Group recently had a post entitled, “What is a service?” on their Application Platform Strategies blog. In it, he points out that “it is useful to first categorize services according to the level of interaction purpose.” He goes on to call out the following service categories: Infrastructure, Integration, Primitive (with a sub-category of Data), Business Application, and Composite.

First off, I agree with Chris that categorization can be useful. The immediate question, however, is useful for what? Chris didn’t call this out, and it’s absolutely critical to the success of the categorization. I’ve seen my fair share of unguided categorization efforts that failed to provide anything of lasting value, just a lot of debate that only showed there any many ways to slice and dice something.

As I’ve continued to think about this, I keep coming back to two simple goals that categorizations should address. The first is all about technology, and ensuring that is is used appropriately. Technologies for implementing a service include (but certainly aren’t limited to) Java, C#, BPEL, EII/MDM technologies, EAI technologies, and more. Within those, you also have decisions regarding REST, SOAP, frameworks, XML Schemas, etc. I like to use the term “architecturally significant” when discussing this. While I could have a huge number of categories, the key question is whether or not a particular category is of significance from an architectural standpoint. If the category doesn’t introduce any new architectural constraints, it’s not providing any value for this goal, and is simply generating unnecessary work.

The second goal is about boundaries and ownership. Just as important as proper technology utilization, and probably even more important as far as SOA is concerned, is establishing appropriate boundaries for the service to guide ownership decisions. A service has its own independent lifecycle from its consumers and the other services on which it depends. If you break down the functional domains of your problem in the wrong way, you can wind up making things far worse by pushing for loose coupling in areas where it isn’t needed, and tightly coupling things that shouldn’t be.

The problem that I see too frequently is that companies try to come up with one categorization that does both. Take the categories mentioned by Chris. One category is data services. My personal opinion is this is a technology category. Things falling into this category point toward the EII/MDM space. It doesn’t really help much in the ownership domain. He also mentions infrastructure services and business application services. I’d argue that these are about ownership rather than technology. There’s nothing saying that my infrastructure service can’t use Java, BPEL, or any other technology, so it’s clearly not providing guidance for technology selection. The same holds true for business application services.

When performing categorization, it’s human nature to try to pigeonhole things into one box. If you can’t do that, odds are you’re trying to do too much with your categories. Decisions on whether something is a business service or an infrastructure service are important for ownership. Decisions on whether something is an orchestration service or a primitive service are important for technology selection. These are two separate decisions that must be made by the solution architect, and as a result, should have separate categorizations that help guide those decisions to the appropriate answer.

Is Apple in the home like Microsoft in the enterprise?

Is Apple in the home like Microsoft in the enterprise?

I was just having a discussion with someone regarding Apple’s recovery over the last ten years and what the future holds for them when it dawned on me that there are parallels (sorry, no pun intended) between Microsoft’s efforts in the server-side space in the enterprise and Apple’s efforts in the home.

There’s no doubt that Apple’s strategy has always been about having end-to-end control of the entire platform, from hardware to software. There are advantages and disadvantages to this, with the clear disadvantage being market share, but the advantages being user experience. On the Microsoft side, when they entered into the enterprise market, and this still holds true today, it’s really about getting as much Microsoft software there as possible. They would like to own the software platform from end-to-end.

The parallel in this is that when Microsoft moved beyond the desktop, where they had nearly all of the market share, they suddenly had to deal with a heterogeneous environment rather than a homogenous one. Microsoft’s strategy is not one of integration, however, it is about replacement. Over time, they’ve had to yield to the fact that integration will always be necessary, and that many infrastructures are too well established to incur the cost of a migration to an all Microsoft environment. That being said, Microsoft would be happy to take your money and do it, and they still continue to position their products so that thought is in the back of your mind. I don’t know of anyone who would argue with the statement that Microsoft solutions work best in an all-Microsoft environment. That’s not to say that it doesn’t work really well in a heterogeneous environment, it simply says that if you want the best Microsoft has to offer, you have to go 100% Microsoft.

Now let’s talk about Apple. I’d argue that the state of the market for the integrated, intelligent home is around the same point (maybe a bit less mature) that enterprise infrastructures were when the whole middleware rage occurred in the 90’s. Companies were just starting to realize the potential and the importance of integrating their disparate systems. Today, consumers are just starting to realize the potential of integrating the technology in their houses. I’m not going to make any predictions about when it will become mainstream, as they’re usually wrong, but I do think it’s safe to say that the uptake is definitely increasing in slope rather than remaining flat. Apple is in a very similar position to Microsoft. The home is a heterogeneous environment. Apple works best in an all Apple environment. Will Apple take a path similar to Microsoft to where they integrate where they have to, but are really focused on getting a foot in the door and then it’s all about more Apple? Or will there be careful decisions on where the strategy is about integration and where the strategy is about extending the platform? To date, I think they’ve done the latter. We don’t see an Apple-branded TV, instead we have a set top box that talks to TVs.

The biggest factor may not be what Apple does, but what everyone else does. Microsoft continues to gain market share in the enterprise because integration of heterogeneous environment is still a painful exercise. As look as there is pain in integration, there’s always opportunity for platform-based approaches to gain ground. Integration in consumer technologies is certainly a different beast, as there are standards and a certain level of status-quo. It’s not a painful effort to hook up stereo components from multiple vendors. At the same time, however, it’s ripe for improvements in the experience, case in point, the 100+ button remote control associated with most receivers. Likewise, the standards change all too often. Back when digital camcorders came out, Apple had a big win with integration with iMovie that no one else had. Over the past 8 years however, the digital camcorder manufacturers have changed formats to the point where you can’t say whether a digital camcorder will work with iMovie or not. It just shows that if you don’t control the platform end-to-end, your entire strategy can fall apart quickly based upon those pieces outside of your control.

I think Apple’s taking a very careful approach on what problems to tackle and when. The one thing I’m sure of is that Apple’s presence in the consumer will make the next 10 years in the home very exciting. While one could argue that the availability of the Internet in the home started the process of the demand increasing at a faster pace, I also think you can that Apple’s products, more so than any other consumer products company, have enabled that pace to continue to increase.

Is a competition model good for IT?

Is a competition model good for IT?

Back in February, Jason Bloomberg of ZapThink posted a ZapFlash entitled “Competitive SOA.” I didn’t blog about it at the time, but this topic was brought back to the forefront of my mind by this post from Ian Thomas, with some follow-on commentary from Joe McKendrick.

While I’m not one to take one side or the other strongly, I must admit that I have significant reservations about a competition model, whether it is internal competition as suggested in the initial ZapThink article, or it is competition between IT and outside providers of services. First, let’s get the easy part of this out of the way. Part of Ian’s article is about simply running IT as a business and having good cost accounting. I’m certainly not going to argue about this. This being said, there’s a big difference between being a division or department of a company versus a supplier to a company.

I believe strongly that a customer/supplier relationship between IT and the end users of IT in the business is a bad thing, in most cases. If IT moves to exclusively to that model, the business leaders should clearly always be considering outsourcing IT completely. In doing so, it definitely sends a clear message that technology usage is not going to be a competitive advantage for this company. I believe that outsourcing can make sense for horizontal domains, where cost management is the most important concern.

The right model, in my opinion, is to have IT be part of the business, not a supplier to the business. To be part of a business, you need to be a partner, not a supplier. Brenda Michelson posted some excerpts from a Wall Street Journal interview with three CIO’s: Meg McCarthy of Aetna, Inc., Frank Modruson of Accenture Ltd., and Steve Squeri of American Express Co. Some great quotes from this:

Ms. McCarthy: At Aenta, the IT Organization is critical to enabling the implementation of our business strategy. I report to the chairman of our company and I am a member of the executive committee. In that capacity, I participate in all of the key business conversations/decisions that impact the company strategy and the technology strategy.

Mr. Squeri: I believe that over the next 10 years, the CIO will get more involved in the overall business strategy of the company and see their role expand in importance. The CIO will be increasingly called upon not only to translate business strategies into capabilities but to become even more forward-looking to determine what capabilities the business will need in the future.

The days of tech leaders as relationship managers and “order takers” will go by the wayside and they will be called upon to create and drive technology strategies that drive business capabilities.

It’s great to hear these leaders calling out how IT is becoming a partner, rather than a supplier. While our business leaders are certainly more tech-savvy than they have been in the past, there is still significant value in having people that specialize in technology adoption and utilization on your leadership team, just as you have people who specialize in sales, marketing, operations, etc.

Ian suggests letting “self interest flourish within the bounds set by the organisational context as long as it delivers cost-effective services but punish it by outsourcing where it doesn’t.” Cost reduction is just one factor in a complex decision. Holding the threat of outsourcing over IT may certainly in a more efficient operation, but applying those principles to areas where the decision shouldn’t be based on cost efficiency, but strategic impact to the business is a risky proposition. Let the business, which includes IT, decide what’s right to outsource and what isn’t. It shouldn’t be a threat or a punishment, but a decision that all parties involved agree makes good business sense.

Focus on the consumer

Focus on the consumer

The latest Briefings Direct: SOA Insights podcast is now available. In this episode, we discussed semantic web technologies, among other things. One of my comments in the discussion was that I feel that these technologies have struggled to reach the mainstream because we haven’t figured out a way to make it relevant to the developers working on projects. I used this same argument in the panel discussion at The Open Group EA Practitioners Conference on July 23rd. In thinking about this, I realized that there is a strong connection in this thinking and SOA. Simply put, it is all about the consumer.

Back when my day-to-day responsibilities were programming, I had a strong interest in human-computer interaction and user interface design. The reason for this was that the users were the end consumer of the products I was producing. It never ceased to amaze me how many developers designed user interfaces as if they were the consumer of the application, and wound up giving the real consumer (the end user) a very lousy user experience.

This notion of a consumer-first view needs to be at the heart of everything we do. If you’re an application designer, it doesn’t bode well if you consumer hate using your application. Increasingly, more and more choices for getting things done are freely available on the Internet, and there’s no shortage of business workers that are leveraging these tools, most likely under the radar. If you want your users to use your systems, the best path is make it a pleasant experience for them.

If you’re an enterprise architect, you need to ask who the consumers of your deliverable are? If you create a reference architecture that is only of interest to your fellow enterprise architects, it’s not going to help the organization. If anything, it’s going to create tension between the architecture staff and the developers. Start with the consumer first, and provide material for what they need. A reference architecture should be used by the people coming up with a solution architecture for projects. If your reference architecture is not consumable by that audience, they’ll simply go off and do their own thing.

If you are developing a service, you need to put your effort into making sure it can be easily consumed if you want to achieve broad consumption. It is still more likely today that a project will build both service consumer and service provider. As a result, the likelihood is that the service will only be easily consumable by that first consumer, just as that user interface I mentioned earlier was only easily consumed by the developer that wrote it.

How do we avoid this? Simple: know your consumer. Spend some time on understanding your consumer first, rather than focusing all of your attention on knowing your service. Ultimately, your consumers define what the “right” service is, not you. You can look at any type of product on the market today, and you’ll see that the majority of products that are successful are the ones that are truly consumer friendly. Yes, there are successful products that are able to force their will on consumers due to market share that are not considered consumer friendly, but I’d venture a guess that these do not constitute the majority of successful products.

My advice to my readers is to always ask the question, “who needs to use this, and how can I make it easy for them?” There are many areas of IT that may not be directly involved with project activities. If you don’t make that work relevant to project activities, it will continue to sit off on an island. If you’re in a situation where you’re seen as an expert in some space, like semantic technologies, and the model for using those technologies on project is to have yourself personally involved with those projects, that doesn’t scale. Your efforts will not be successful. Instead, focus on how to make the technology relevant to the problems that your consumers need to solve, and do it in a way that your consumers want to use it, because it makes their life easier.

Measuring Your Success

Measuring Your Success

As I’ve mentioned previously, I’m a member of the SOA Consortium. In a recent conference call for them, the subject of maturity models came up, and I discussed some of the efforts that I’ve done on MomentumSI’s Maturity Model. Anyway, the end result of the discussion was an email exchange between myself and Ron Schmelzer of ZapThink discussing the whole purpose of maturity models. In my prior life working in an enterprise, I’ve seen my fair share of maturity models on various subjects and I know there were certainly some that I dismissed as marketing fluff. At the same time, there were others that peaked my interest, and even more interesting, I also spent time creating my own maturity models. So what gives? Are they good or bad?

The purpose of a maturity model is pretty straightforward. It’s an attempt to try to quantify where you fall on some continuum. Obviously, there are many ways that this can be problematic, but it usually falls into two categories. First, you may not even think the subject at hand is worth measuring, therefore, a maturity model is a waste of time. Second, you may agree that subject is worth measuring, but you may disagree with the measuring scale. Frequent readers of my blog know that I’m no stranger to that category, as I started a bit of stir with some comments regarding the maturity model that David Linthicum had posted on his blog.

For this entry, the real question to ask is whether or not SOA adoption is something worth measuring? If you answer yes, then you’re going to need some yardstick to do so. I certainly think that any SOA adoption effort must include some way of assessing your efforts. If you don’t have this, how can you ever claim success? A maturity model is one way of assessing it. A roadmap with goals attached to points in time can certainly be another way of doing it, as well. I believe that you’ll need both. A maturity model approach is good because it’s not based upon reaching a point in time, it’s based on more qualitative factors, such as how you do things. You don’t become more mature with SOA buy purchasing a product, for example. You become more mature by using a product appropriately, consistently, successfully and in a repeatable fashion. The challenge with maturity models, however, is that they are often created as a unit of comparison, rather than as a unit of assessment. This has everything to do with the yardstick of measurement. During the SOA Consortium discussion, someone brought up that some maturity models aren’t valuable because an organization may not be concerned with reaching the upper levels of maturity. When you create a maturity model that is intended as a comparison tool, you need a yardstick that works for all. For organizations that are content at level one or level two on your scale, there may not be much value. If you focus solely on a maturity model as an assessment tool, step one is to establish criteria for the levels that make sense for your organization. If you do this, now you can use it as a means of measuring your progress on your journey. You won’t be able to use it to compare yourself to your competitors, but that’s okay, because you’re measuring your activities, not theirs.

Roadmaps are good as well, because they are very execution-oriented. While a maturity model may call out some lofty, qualitative goals, it doesn’t articulate a path to get you there. Roadmaps, on the other hand, consist of activities and deadlines. At the same time, roadmaps can run a risk of being too focused on the what and when, and not on the how.

The important takeaway from this is that if you’re going to attempt to adopt SOA, you need a way of measuring it. As many have said, SOA is a journey. It’s not one project. My recommendation it consider using both a maturity model and a roadmap with concrete milestones as tools for measuring your efforts. The roadmap will keep you focused on the execution, and the maturity model will ensure that you can capture the more qualitative aspects, such as service consumer/service provider interactions in the development lifecycle. What are your thoughts? Are maturity models a good things or a bad thing? If they can be either, what makes for a good maturity model versus a lousy one?

Acronym Soup

Acronym Soup

The panel discussion I was involved with at The Open Group Enterprise Architecture Practitioner’s Conference went very well, at least in my opinion. We (myself, our moderator Dana Gardner, Beth Gold-Bernstein, Tony Baer, and Eric Knorr) covered a range of questions on the future of SOA, such as when will we know we’re there, will we still be discussing it 5 years from now or will it be subsumed by EA as a whole, etc.

In our preparations for the panel, one of the topics that was thrown out there was how SOA will play with BPM, EDA, BI, etc. I should point out that our prep call only set the basic framework of what would be discussed, we didn’t script anything. It was quite difficult biting my tongue on the prep call as I wanted to jump right into the debate. Anyway, because it didn’t get the depth of discussion that I was expecting, I thought I’d post some of my thoughts here.

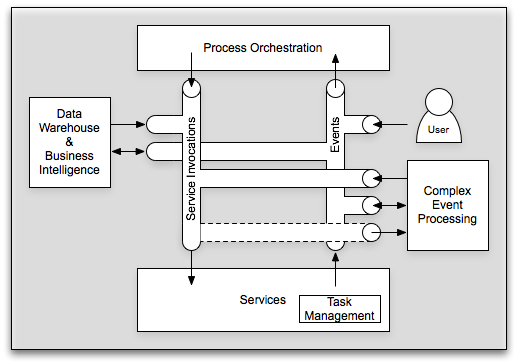

I’ve previously posted on the integration between SOA, BPM, Workflow, and EDA, or probably better stated, services, processes, and events. There are people who will argue that EDA is simply part of SOA, I’m not one of them, but that’s not a debate I’m looking to have here. It’s hard to argue that there are natural connections between services, processes, and events. I just recently posted on BI and SOA. So, it’s time to try to bring all of these together. Let’s start with a picture:

In its simplest form, I still like to begin with the three critical components: processes, services, and events. Services are explicitly invoked by sending a service invocation message. Processes are orchestrated through a sequence of events, whether human-generated or machine generated. Services can return responses, which in essence are a “special” event directed solely at the requestor, or they can publish events available for general listening. So, we’ve covered SOA, BPM, EDA, and workflow. To bring in the world of EDW (Enterprise Data Warehouse), BI (Business Intelligence), CEP (Complex Event Processing), and even BAM (Business Activity Monitoring, although not shown on the diagram), the key is using these messages for purposes other than which they were intended. CEP looks at all messages and is able to provide a mechanism for the creation of new events or service invocations based upon an analysis of the message flow. Likewise, take these same messages and let them flow into your data warehouse and allow your business intelligence to perform some complicated analytics on them. You can almost view CEP as a sort of analytical engine operating on a small window, while business intelligence can act as the analytical engine operating on a large window. Just with CEP, your EDW and BI system can (in addition to report) generate events and/or service invocations. Simply put, all of the technologies associated with all of these acronyms need to come together in a holistic vision. At the conference, Joe Hill from EDS pointed out that when many of these technologies solved 95% of the problem they were brought in for. Unfortunately, when your problem space is broadened to where it all needs to integrate, the laws of multiplication no longer apply. That is, if you have two solutions that solved 95% of their respective problems, they don’t solve 0.95 * 0.95 = 90.25% of the combined problem. Odds are that combined problem falls into the 5% that neither of them solved on their own.

It is the responsibility of enterprise architecture to start taking the broader perspective on these items. The bulk of the projects today are still going to be attacking point problems. While those still need to be solved, we need to ensure that these things fit into a broader context. I’m willing to bet that most service developers have never given thought to whether the service messages could be incorporated into a data warehouse. It’s just as unlikely that they’re publishing events and exposing some potentially useful information for other systems, even where their particular solution didn’t require any events. So, to answer the question of whether SOA will be a term we use 5 years from now, I certainly hope we’re still using it, however, I hope that it’s not still as some standalone initiative distinct from other enterprise-scoped efforts. It all does need to fall under the umbrella of enterprise architecture, but that doesn’t mean that the EA still doesn’t need to be talking about services, events, processes, etc.

Update: I redid the picture to make it clearer (hopefully).

Open Group EA 2007: Andres Carvallo

Open Group EA 2007: Andres Carvallo

Andres Carvallo is the CIO for Austin Energy. He was just speaking on how the Internet has changed the power industry. He brought up the point that we’ve all experienced, where we must call our local power company to tell them that the power is out. Take this in contrast to the things you can do with package delivery via the Internet, and it shows how the Internet age is changing customer expectations. While he didn’t go into this, my first reaction to this was that IT is much like the power company. It’s all too often that we only know a system is down because an end user has told us so.

This leads to discussion of something that is all too frequently overlooked, which is the management of our solutions. Visibility into what’s going on is all too often an afterthought. If you exclusively focus on outages, you’re missing the point. Yes, we do want to know when the .001% of downtime occurs. What makes things more important, however, is an understanding of what’s going on the other 99.999% of the time. It’s better to refer this as visibility rather than monitoring, because monitoring leads to narrow thinking around outages, rather than on the broader information set.

Keeping with the theme of the power industry, clearly, Austin Energy needs to deal with the varying demands of the consumers of their product. That may range from some of the major technology players in the Austin area versus your typical residential customer. Certainly, all consumers are not created equal. Think about the management infrastructure that must be in place to understand these different consumers. Do you have the same level of management in your IT solutions to understand different consumers of your services?

This is a very interesting discussion, especially given today’s context of HP’s acquisition of Opsware (InfoWorld report, commentary/analysis from Dana Gardner and Tony Baer).

Open Group EA 2007: Joe Hill

Open Group EA 2007: Joe Hill

Joe Hill from EDS is on the stage now. The title of his talk is on SOA and Outsourcing, although it’s far more broad than just an outsourcing discussion. It would be nice to have a copy of his slides, as he had some really good ways of visualizing SOA adoption along a timeline and the differences on how it should be approached. For example, using a chart reminiscent of Clayton Christensen’s “The Innovator’s Solution”, he showed that at the early phases where we have “performance undersupply,” the typical solution may need to be more tightly coupled, fewer vendors, and more use of proprietary techniques. The discussion around SOA is typically on the right hand side of the chart, emphasizing loose coupling, multiple vendors, open standards, etc. The problem that I felt Joe was emphasizing was that you need to recognize where you are and apply the right techniques at the right time, rather than on focusing too heavily on the end state on the right hand side of the diagram.

Open Group EA 2007: Rob High

Open Group EA 2007: Rob High

Rob High of IBM is on the stage now with a presentation titled “SOA Foundation” which runs the gamut of topics associated with SOA. One thing he took a lot of time to discuss was the notion of coherency and the importance of semantics to SOA. It was nice to hear some emphasis on this point, as I believe that an understanding of the semantics is a critical component in moving from SOA applied to applications to SOA applied to the enterprise. Just as with the rest of SOA, make sure you understand the semantics first before throwing any semantic technology at it. While there are evolving specs and tools in this space, none of that will do you any good if don’t first understand the semantics themselves and how that information can be leveraged in your project efforts.

Open Group EA 2007: David Linthicum

Open Group EA 2007: David Linthicum

I’m here at The Open Group Enterprise Architecture Practitioner’s Conference, and will try to blog where I can on the presentations. Right now, I’m sitting in David Linthicum’s keynote, which is on EA & SOA. He had an interesting quote, which was:

Five years from now, we won’t be talking about SOA… It will all be folded back into EA.

There’s some truth to this, but there’s also a lot of risk. One of the issues with EA (and many other efforts within IT), is that it can become disconnected from the project efforts that are going on. The term most frequently used with this is “ivory tower” where enterprise architects are simply viewed as paper pushers that know how to create a lot of PowerPoint slides. One of the side benefits of SOA is that it relates very well to the world of the development projects, with “service” being the point of common language. The enterprise architects may be modeling the enterprise in terms of the services that are needed, and projects can now utilize these models in their project architecture. This is easier said than done, however, as you need to ensure that the reference material containing these models (e.g. a reference architecture) is consumable at the project level. If the only group that can understand your models are fellow enterprise architects, that’s a problem.

So, will SOA be folded into EA as a whole? If EA can ensure that the artifacts it creates are consumable at the project level, then absolutely, SOA will be folded into EA. If EA is not creating artifacts that are consumable at the project level, then we have a problem. You’ll likely still have tension between EA and SOA, and likely not achieve the levels of success that organizations that have successfully bridged the world of EA and the project space. This doesn’t apply solely to SOA. This applies equally to any architectural domain. You may have information architects working on canonical or enterprise data models, performing data quality analysis, etc., but if it doesn’t find a way to become relevant to project efforts, it will exist on an island with continual struggles to achieve the objectives that were set out.

Blogging in the Corporate World

Blogging in the Corporate World

SearchCIO.com recently had an article discussing blogging in the corporate world. I recently discussed the use of blogs and wikis inside the enterprise, this entry will focus on blogs that are exposed to outside world.

As James McGovern has lamented in the past, and Brandon Satrom recently, there really aren’t a lot of enterprise architects working in typical corporate IT blogging. By typical corporate IT, I mean at companies whose primary business is not technology. I don’t know if this carries over to other roles within IT, but I suspect it does. So, is this is a bad thing? A good thing? Some companies may have formal policies regarding blogging, some may not. It can be a complicated issue, however, I do think that there is one basic factor that comes into play, and that is trust. For the purpose of this conversation, I’m going to restrict it to where people are blogging about the domain in which they work. So, if you’re an enterprise architect, this is relevant if you want to blog about enterprise architecture. If you want to blog about your favorite TV show, less of this discussion applies.

From the perspective of the blogger, what are the reasons you’d want to blog? For me personally, my blog served two purposes. First and foremost, there are constantly ideas that go fleeting through my brain, and I wanted to use blogging as a way to record my thoughts. Secondly, I wanted to share those thoughts with others and see what conversation came out of it. If others found it valuable, that’s great, however, I don’t go around trying to look for topics that I know may have high market value. This may not be the case for others. Some may go out trying to capture the spotlight as a primary goal. There are those that argue that everyone who blogs is seeking the spotlight, but I don’t believe that to be the case. One thing that is true, however, is that if you blog, you are building a brand, whether you planned to or not. This is where things can get complicated.

Most companies are usually very sensitive about how they are perceived in their respective marketplace, regardless of whether they have a formal branding or marketing initiative or not. The real danger comes when someone can make an association between you and a company, and as a result, make associations between the two. Take the current situation with Michael Vick. While the NFL and Atlanta Falcons had no control over his off the field activities, there are now significant problems with not only his personal brand, but the brand of the NFL and the Atlanta Falcons. While the blogosphere isn’t usually under the same level of scrutiny as professional athletes, the impacts can be very similar. As an employee of a company, you have to realize that as a privately held (even if publicly traded) institution, you must abide by their rules, even if it is activities that you are doing with your own equipment, and on your own time. I’m not a lawyer, but most organizations do have some form of personal conduct policy that can apply. If you’re not officially blogging for your company, it’s best to try to keep the worlds as separate as possible.

For me, I looked upon my topics like a water cooler conversation. If I felt that I could discuss a subject at a local user group meeting, or over lunch with colleagues at a conference, or even on a discussion group (keep in mind that Google can typically find all of those discussion group postings…), then those topics would be okay for a blog. Specifics about the internals of a company were (and still are) strictly off limits. I personally believe that we can learn a lot from our colleagues at other enterprises, and there’s far more we can share without comprising any competitive advantage, than there is at risk. For example, take a blog on something like service versioning. There really shouldn’t be any concern about competitive advantage when discussing something like this, something every enterprise will have to deal with, etc.

It is unlikely that your company has a policy that makes these things clear cut. It’s especially difficult when the company may come back with the question, “What’s in it for us?” It’s hard to put value on something like blogging and it will ultimately come down from trust. If you’re going to blog, get quoted in a magazine, speak at a conference, etc. there has to be a level of trust. The company has to trust that you will represent the company well, even if you’re only speaking about them in generalities. The fact is, you are part of that company and will be perceived as a representative of that company. If you’re a shock jock at night, that doesn’t bode well if someone finds out that your day job is with a very conservative company. Even if there’s no mention anywhere in your blog of the company you work for, it’s not very hard to figure it out. For example, I know where James McGovern works, even though you won’t find it on his blog.

The short of this is that there has to be a level of trust, and probably more so on the side of your employer, which is an unfortunate fact of our culture today. As I’ve mentioned, there’s a certain fear of airing your dirty laundry. As anyone who has worked in multiple enterprises know, there are plenty of things that need improvement, and many of them probably have nothing to do with competitive advantage. A little bit of transparency and a little bit of open discussion can go a long way in building trust. That being said, you’re better off focusing on building trust inside the enterprise first. If you want to open up a conversation on a topic, why not open it up inside the enterprise first and let your colleagues have a crack at it, even if it may not be their responsibility? Good productive communication will build trust, which in turn, will make the company more likely to trust that people know what can not be communicated to the outside world and what can.

Another great Technometria

Another great Technometria

This time the conversation is with Scott Berkun, author of “The Myths of Innovation.” To give you an idea on how entertaining this Technometria conversation was, Phil Windley’s two co-hosts, Ben Galbraith and Scott Lemon, both went online to Amazon during the call and purchased Scott’s book. The discussion focused on the human element of software development and things that contribute to success with innovation. Here’s the link to the IT Conversations page for it.

Internet Service Bus?

Internet Service Bus?

Joe McKendrick’s blog alerted me to Microsoft’s efforts around an “Internet Service Bus,” essentially a hosted version of BizTalk. Details are available in this Redmond Developer News article from Chris Kanaracus.

After reading both Joe’s blog and Chris’ article, this initially didn’t make much sense to me. I did the smart thing, and didn’t write a knee jerk reaction blog (only because I received a phone call), so now I’ve had time to ponder it a bit.

When I read Joe’s blog, my first thought was whether this would be a competitor to hosted integration players like Sterling Commerce. My limited understanding of one of Sterling’s offerings was essentially that it outsourced the mapping effort normally associated with B2B exchanges. They act as an external intermediary, connecting to your partners and then providing you with data in your preferred format(s) and integration technology. I then read Chris’ article and realized that this wasn’t at all what they were talking about. Quoting from a Microsoft whitepaper, Chris’ article stated:

“For example, when school is closed due to weather, a workflow kicks off. As part of that workflow, the system can notify parents, teachers, and bus drivers, as well as food service vendors, snow plow operators, and local police, using the ISB to traverse networks across these disparate organizations.”

Initially when I read this, I had my enterprise hat on and thought, “Why does this need to be hosted?” There’s absolutely no reason that I’d want hosted orchestration of services that exist inside my firewall, and there’s no reason that an internal orchestration engine can’t access services hosted outside of the firewall. Finally, however, the light bulb has come on, and it has to do with the specific example Microsoft used: a school.

I’ve previously blogged about SOA for schools (here and here). My father-in-law is a grade school principal, so I have the occasional conversation about the use of technology in school administration. Your average school is not going to be able to invest in BizTalk or any other orchestration engine, yet, as the example calls out, there’s certainly opportunities to apply orchestration. What this strategy really is a competitor to is something like Yahoo Pipes. There’s probably a broad market where significant efficiency gains can be made, but the cost of the infrastructure is not worth the investment. Is a school really going to buy BizTalk to automate a workflow that maybe occurs once or twice a year (depending on where you live)? No. This seems much better suited to a pay-per-use model. In this manner, the provider of the hosted workflow can have many, many workflows, any one of which is used infrequently at best. Think of it as the long tail of workflow. This model actually makes some sense to me. What are your thoughts?

Finally, it needs a new name. Internet Service Bus would be a disaster, because it’s not a bus, and it conveys this image of all service traffic on the Internet having to flow through it. Hosted integration doesn’t capture it either, because that’s already taken by the Sterling Commerce’s of the world. What we’re really talking about is Hosted Workflow or Hosted Orchestration. The latter would make a very bad acronym, however, just ask Don Imus. So, I’ll call it Hosted Workflow.

Looking back on SOA from the future

Looking back on SOA from the future

Joe McKendrick asked the question in his blog, “How will we look back on SOA in 2020?” This is actually something I’ve been thinking about (well, maybe not 2020, but certainly the future) in preparation for the panel discussion at The Open Group EA Practitioners Conference next week.

One of the things that I’m very interested in is the emerging companies of today, and what their technology will look like in the future. So many companies today are having to deal with existing systems, focusing on activities such as service enablement. Largely, these exercises are akin to turning a battleship on a dime. It’s not going to happen quickly. While I’m fully confident that in 2020 many of these companies will have successfully turned the battleship, I think it will be even more interesting to see what companies that had a blank slate look like.

Dana Gardner recently had a podcast with Annrai O’Toole of Cape Clear Software, where they discussed the experiences of Workday, a Cape Clear customer. Workday is a player in the HR software space, providing a SaaS solution, in contrast to packaged offerings from others. Workday isn’t the company I’d like to talk about however. The companies that I’m more interested in are the ones that are Workday customers. Workday is a good example for the discussion, because in the podcast, Dana and Annrai discuss how the integration problems of the enterprise, such as communication between HR and your payroll provider (e.g. ADP), are now Workday’s problems. By architecting for this integration from the very beginning, however, Workday is at a distinct advantage. I expect that emerging companies with a clean IT slate will likely leverage these SaaS solutions extensively, if for no other reason than the cost. They won’t have a legacy system that may have been a vertical solution 20 years ago, but is now a horizontal solution that looks like a big boat anchor on the bottom line.

One thing that I wanted to call out about the Workday discussion was their take on integration with third parties. As I mentioned, they’ve made that integration their problem. This is a key point that shouldn’t be glossed over, as it’s really what SOA is all about. SOA is about service. The people at Workday recognized that their customers will need their solution to speak to ADP and other third parties. They could easily have punted and told their customers, “Sorry, integration with that company is your problem, you’re the one who chose them.” Not only is that lousy service, but it also results in a breakdown of the boundaries that a good SOA should establish. In this scenario, a customer would have to jury rig some form of data extract process and act as a middleman in an integration that would be much better suited without one. You have potentially sensitive data flowing through more systems, increasing the risk.

The moral of this story is that there are very few times in a company’s history where the technology landscape is a blank slate. Companies that are just starting to build their IT landscape should keep this in mind. I’ve blogged in the past on how the project-based culture where schedule is king can be detrimental to SOA. An emerging company is probably under even more time-to-market pressure, so the risk is even greater to throw something together. If that’s the case, I fear that IT won’t look much different in 2020 than it does today, because the way we approach IT solutions won’t have changed at all. Fortunately, I’m an optimist, so I think things will look significantly different. More on that at (and after) the conference.