Looking back on SOA from the future

Looking back on SOA from the future

Joe McKendrick asked the question in his blog, “How will we look back on SOA in 2020?” This is actually something I’ve been thinking about (well, maybe not 2020, but certainly the future) in preparation for the panel discussion at The Open Group EA Practitioners Conference next week.

One of the things that I’m very interested in is the emerging companies of today, and what their technology will look like in the future. So many companies today are having to deal with existing systems, focusing on activities such as service enablement. Largely, these exercises are akin to turning a battleship on a dime. It’s not going to happen quickly. While I’m fully confident that in 2020 many of these companies will have successfully turned the battleship, I think it will be even more interesting to see what companies that had a blank slate look like.

Dana Gardner recently had a podcast with Annrai O’Toole of Cape Clear Software, where they discussed the experiences of Workday, a Cape Clear customer. Workday is a player in the HR software space, providing a SaaS solution, in contrast to packaged offerings from others. Workday isn’t the company I’d like to talk about however. The companies that I’m more interested in are the ones that are Workday customers. Workday is a good example for the discussion, because in the podcast, Dana and Annrai discuss how the integration problems of the enterprise, such as communication between HR and your payroll provider (e.g. ADP), are now Workday’s problems. By architecting for this integration from the very beginning, however, Workday is at a distinct advantage. I expect that emerging companies with a clean IT slate will likely leverage these SaaS solutions extensively, if for no other reason than the cost. They won’t have a legacy system that may have been a vertical solution 20 years ago, but is now a horizontal solution that looks like a big boat anchor on the bottom line.

One thing that I wanted to call out about the Workday discussion was their take on integration with third parties. As I mentioned, they’ve made that integration their problem. This is a key point that shouldn’t be glossed over, as it’s really what SOA is all about. SOA is about service. The people at Workday recognized that their customers will need their solution to speak to ADP and other third parties. They could easily have punted and told their customers, “Sorry, integration with that company is your problem, you’re the one who chose them.” Not only is that lousy service, but it also results in a breakdown of the boundaries that a good SOA should establish. In this scenario, a customer would have to jury rig some form of data extract process and act as a middleman in an integration that would be much better suited without one. You have potentially sensitive data flowing through more systems, increasing the risk.

The moral of this story is that there are very few times in a company’s history where the technology landscape is a blank slate. Companies that are just starting to build their IT landscape should keep this in mind. I’ve blogged in the past on how the project-based culture where schedule is king can be detrimental to SOA. An emerging company is probably under even more time-to-market pressure, so the risk is even greater to throw something together. If that’s the case, I fear that IT won’t look much different in 2020 than it does today, because the way we approach IT solutions won’t have changed at all. Fortunately, I’m an optimist, so I think things will look significantly different. More on that at (and after) the conference.

Dilbert Governance, Part 2

Dilbert Governance, Part 2

I’ll be giving a webinar on Policy-Driven SOA Infrastructure with Mike Masterson from IBM DataPower next week on Thursday at 1pm Eastern / 10am Pacific, and probably could find a way to tie in today’s Dilbert to it. Give it a read.

As for the webinar, it will discuss themes that I’ve previously blogged about here, including separation of non-functional concerns as policies, enforcing those policies through infrastructure, and the importance of it to SOA. Mike will cover the role of SOA appliances in this domain. You can register for it here.

BI, SOA, and EDA

BI, SOA, and EDA

InfoQ recently posted an article on Business Intelligence (BI) and SOA and it made some good points in the later half of the article. Hopefully readers get there, however, because the initial discussion around ETL and getting data originally gave me the impression that the article wasn’t going to get to the really important factors on the relationship between SOA and BI. It began the discussion by stating:

The road to BI usually starts with extract transform and laod (ETL).

I don’t have any disagreement with that statement, however, the next part of the article goes on to talk about the potential problems introduced by SOA to ETL. In essence, it states that because SOA is all about isolating internal data behind interfaces, it becomes problematic for BI systems rooted in ETL because of the need to intimately understand the data. The article seems to suggest that companies may try to use SOA to pull data into the data warehouse rather than an ETL approach. This would be a mistake, in my opinion. If the existing ETL processes work just fine, why change it? Typically service usage is more about transactional processing and not about the bulk data movement associated with ETL.

Where the discussion gets interesting is when we start looking at the future of BI. There are two areas for improvement. The first is in the timeliness of the information. BI processing that is dependent on ETL processing is typically going to happen as part of some scheduled job that occurs each night. The second is in the information itself.

To address the first area, a move toward an event-driven architecture for pushing information into the business intelligence system is necessary. The article does a very good job in addressing this, and even correctly calls out that advancing technologies in event stream processing such as CEP (complex event processing) will play a key role in this.

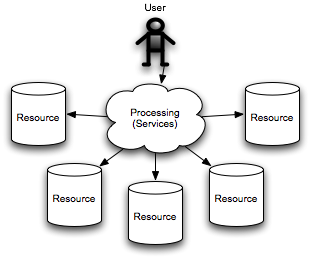

The second area is not as clearly addressed in the article. I am by no means an ETL and BI expert, but my limited experience with it indicated that it was largely based on the end results of processing. That is, for any given processing chain, you typically have nodes that represent and endpoint where that particular processing chain ends. Users typically begin the chain, and some resource (relational database, content management system, file system) typically ends the chain, if there’s any permanent record associated with the processing. Graphically, it looks like this:

Today, BI and its associated ETL jobs may focus only on relational databases that make a permanent record of a transaction. That’s only one resource in the chain. What about all of the intermediate steps along the way? Think of what Amazon does by not only looking at your purchase history but also in looking at the things you (and others) browse for, independent of whether or not you actually purchase it. Arguably, this represents a far better picture of your interests that your orders. This is where I feel SOA can really provide benefits. Adopting SOA should result in more individual components for any given solution, but by accessing those components in a standards-based manner, we’re still able to manage the associated increase in complexity. If we have more components, we have greater visibility into the information flowing in and out. Employ some standard service management technology, and you can easily extract the message flows in real time and centrally store them for extraction into your BI environment. Or, if you’re ready to do it all in real time, those same tools can simply publish those service messages out to an event bus for real-time extraction into your BI environment. This is where I think the potential lies. It’s not about getting at the same old information in an easier way, it’s about getting new information that can yield better intelligence. Consistency of service schemas is important to SOA, and if done right, the incorporation of this wealth of information sources into your BI system shouldn’t become a complex nightmare. This is yet another example of how we need to think outside the box, and realize that information can always be used in novel ways beyond the immediate consumer-provider interaction that is driven by the project at hand.

Turning Bottom-Up Upside Down

Turning Bottom-Up Upside Down

Joe McKendrick recently had a post regarding bottom-up approaches to SOA in response to this post from Nick Malik of Microsoft, which was then followed up with another post from Nick. I was going to post solely on this discussion, when along came another post from James McGovern discussing bottom-up and top-down initiatives. While James’ post didn’t mention any of the others, it certainly added to the debate. Interestingly, in his post, James put SOA in a “bottom-up” list. Out of this, it seems like there are at least three potential definitions of what bottom-up really is.

Joe’s discussion around “bottom-up” has a theme of starting small. In his followup post, Nick actually concurs with using this approach to SOA, however, he points out that this approach is not what he considers to be “bottom-up.” For the record, I agree with Nick on this one. When I think of bottom-up in the context of SOA, I think of individual project teams building whatever services they want. This puts the organization at great risk of just creating a bunch of services. While you’re guaranteed that your services will have at least one consumer by simply identifying them at the time they’re needed within a project, this is really the same way we’ve been building systems for years. If you change the approach to how you build the solution from the moment the service is identified, such as breaking it out as its own project with an appropriate scope increase to address broader concerns, you can still be successful with this approach, but it’s not easy. When I think of top-down, like Nick, it also starts to feel like a boil the ocean approach, which is probably at just as big of a risk of being unsuccessful.

Anyway, now bringing James’ post into the discussion, he had given a list of three “top-down” IT initiatives and three “bottom-up” initiatives. Top-down ones were outsourcing, implementing CMMi and/or PMI, and Identity Management within a SoX context. The bottom-up initiatives were SOA, Agile Methods, and Open Source. By my interpretation, James’ use of the terminology refers more to the driving force behind the effort when mapped to the organization chart. His top-down efforts are likely coming from the upper echelons of the chart, while the bottom-up efforts (although SOA is debatable) are driven from the lower levels of the chart. I can certainly agree with this use of the terminology, as well.

So is there any commonality? I think there is and it is all about scope. The use of the term top-down is typically associated with a broad but possibly shallow focus. The use of the term bottom-up is typically associated with a narrow but possibly deep focus. What’s best? Both. There’s no way you can be successful with solely a bottom-up or top-down approach. It’s like making financial decisions. If you live paycheck to paycheck without any notion of a budget, you’re always going to be struggling. If you develop an extensive budget down to the penny, but then never refer to it when you actually spend money, again, you’re always going to be struggling. Such is the case with SOA and Enterprise Architecture. If you don’t have appropriate planning in place to actually make decisions on what projects should be happening and how they should be scoped, you’re going to struggle. If the scope of those projects is continually sacrificed to meet some short term goal (typically some scheduling constraint), again, you’re going to struggle. The important thing, however, is that there does need to be a balance. There aren’t too many companies that can take a completely top-down approach. Even startups with a clean IT slate are probably under such time-to-market constraints that many strategic technology goals must be sacrificed in favor of the schedule. It’s far easier to fall in the trap of being overweighted on bottom-up efforts. To be successful, I think you need both. Enterprise architects, by definition, should be concerned about breadth of coverage, although not necessarily breadth of technology as specializations do exist, such as an Enterprise Security Architect. An application architect has narrower breadth than an enterprise architect, but probably more depth. A developer has an even narrower breadth, but significant depth. The combination of all of them are what will make efforts successful both in the short term and in the long term.

Blended iPhone

Blended iPhone

Okay, I’m an admitted iPhone lover. For those of you that are tired of me talking about it, you can get a good laugh courtesy of Tom Dickson, BlendTec blenders, and their “Will it Blend?” series. Go watch it now!

Blog is now iPhone friendly

Blog is now iPhone friendly

Courtesy of some php scripting advice posted by Mitch Cohen, I’ve now made my blog a bit more iPhone friendly. Obviously, it could be loaded previously, but you did need to do some zooming and panning. Now, if it detects that the request is from an iPhone, it applies a new style sheet. The sidebar information is pushed to the bottom, and the entire width of the page is used for content. Furthermore, by setting a viewport attribute, the iPhone knows it is only 320 pixels wide, rather than showing a page zoomed out. While this was purely a learning exercise for me, it does make things easier to read.

Internal Blogs and Wikis

Internal Blogs and Wikis

I was listening to an Inflection Point podcast from the Burton Group entitled Enterprise 2.0: Overcoming Fear of Blogs and thought that this was a subject that I hadn’t posted anything about. The topic of the podcast was the use of blogs and wikis internally within the enterprise, rather than enterprise workers blogging to the outside world, which is a different subject.

The interviewer, Mike Gotta, made the comment that blogs within the enterprise need to be purposeful, and that there’s a fear that they will simply be a soap box or a place to rant. To any manager or executive that has a fear about them becoming a place for employees to rant, you’re looking at the wrong issue. If employees feel a need to rant, they’ll be doing it in the hallways, break rooms, and cafeteria. If anything, blogging could bring some of this out in the open and allow something to be done about it. This is where I think there is real value for blogging, wikis, etc. in the enterprise. I recently had a post titled Transparency in Architecture that talked about a need for projects to make their architectural decisions transparent throughout the process (and the same holds true for enterprise architects and their development of reference architectures and strategies). Blogs and wikis create an opportunity to increase the transparency in enterprise efforts. I’ve worked in environments that had a “need to know” policy for legitimate reasons, but for most enterprises, this shouldn’t be the case. When information isn’t shared, it may be a symptom of lack of trust in the enterprise, which can be disastrous for SOA or anything else IT does.

Blogs and wikis are about communication and collaboration. Communication and collaboration help to build up trust. That being said, you do need to be respectful of the roles and responsibilities within the organization. There’s a time and place for debate, and there’s a time and place for adherence to policies. I’ve been in the trenches and had my fair share of times where I didn’t agree with a decision that was made by people above me in the organization. I also understood that it wasn’t my decision to make. In general, however, I believe that things would have been better if there were transparency behind those decisions. That’s important because the principles that guide those decisions wind up influencing the decisions that are made further down the line. If the staff has no visibility to those principles, how can they be expected to make good decisions? The end result of that situation is increased distrust on both sides. It’s not easy to do, because once you expose that information, it leaves you open for debate, which is where mutual trust must come into play. Betrayals of trust either by not disclosing information or by misusing information that was disclosed can be detrimental.

In general, I’m an optimist and I give people the benefit of the doubt. As a result, I’m all for transparency and the use of blogs and wikis in the enterprise. Will blogs and wikis dramatically change the way IT works? Probably not, but it certainly has the potential to improve morale which certainly have a role in improving productivity.

Another Greg the Architect

Another Greg the Architect

A new Greg the Architect video has been posted, called Focus Pocus. I wasn’t aware of this before, but it’s now known that TIBCO is the driving force behind this series of videos. Anyway, watch Greg and his boss visit an SOA conference at “the Biscotti Center” in “beautiful, exotic, tropical … downtown San Francisco.”

SOA Insights Podcast

SOA Insights Podcast

Dana Gardner invited me to be a part of his Briefings Direct SOA Insights podcast a while back, and the first episode I was part of is now available. Topics included SOA hype, the SoftwareAG/WebMethods acquisition, and the role of Web 2.0 and wikis in SOA. Details are available here, or you can subscribe to the podcast via iTunes here.

I’ve always enjoyed listening to panel discussions, and now it’s great to have the opportunity to be a regular part of one. If there are particular topics you’d like to hear discussed, feel free to drop me a line and I’ll pass along the suggestion. Dana’s got a great group of people that participate on these sessions.

To ESB or not to ESB

To ESB or not to ESB

It’s been a while since I posted something more infrastructure related. Since my original post on the convergence of infrastructure in the middle was reasonably popular, I thought I’d talk specifically about the ESB: Enterprise Service Bus. As always, I hope to present a pragmatic view that will help you make decisions on whether an ESB is right for you, rather than coming out with a blanket opinion that ESBs are good, bad, or otherwise.

From information I’ve read, there are at least five types of ESBs that exist today:

- Formerly EAI, now ESB

- Formerly BPM, now ESB

- Formerly MOM/ORB, now ESB

- The WS-* Enabling ESB

- The ESB Gateway

Formerly EAI, now ESB

There’s no shortage of ESB products that people will claim are simply rebranding efforts of products formerly known as EAI tools. The biggest thing to consider with these is that they are developer tools. Their strengths are going to lie in their integration capabilities, typically in mapping between schemas and having a broad range of adapters for third party products. There’s no doubt that these products can save a lot of time when working with large commercial packages. At the same time, however, this would not be the approach I’d take for a load balancing or content-based routing solution. Those are typically operational concerns where we’d prefer to configure rather than code. Just because you have a graphical tool doesn’t mean it doesn’t require a developer to use it.

Formerly BPM, now ESB

Many ESBs are adding orchestration capabilities, a domain typically associated with BPM products. In fact, many BPM products are simply rebranding of EAI products, and there’s at least one I know of that is now also marketed as an ESB. There’s definitely a continuum here, and the theme is much the same. It’s a graphical modeling tool that is schema driven, possibly with built-in adapter technology, definitely with BPEL import/export, but still requires a developer to properly leverage it.

Both of these two categories are great if your problem set centers around orchestration and building new services in a more efficient manner. If you’re interested in sub-millisecond operations for security, routing, throttling, instrumentation, etc., recognize that these solutions are primarily targeted toward business processing and integration, not your typical “in-the-middle” non-functional concerns. It is certainly true that from a modeling perspective, the graphical representation of a processing pipeline is well-suited for the non-functional concerns, but it’s the execution performance that really matters.

Formerly MOM/ORB, now ESB

These products bring things much closer to non-functional world, although as more and more features are thrown at the ESB umbrella, they may start looking more like one of the first two approaches. In both cases, these products try to abstract away the underlying transport layer. In the case of MOM, all service communication is put onto some messaging backbone. The preferred model is to leverage agents/adapters on both endpoints (e.g. having a JMS client library for both the consumer and provider), potentially not requiring any centralized hub in the middle. The scalability of messaging systems certainly can’t be denied, however, the bigger concern is whether agents/adapters can be provided for all systems. All of the products will certainly have a fallback position of a gateway model via SOAP/HTTP or XML/HTTP, but you lose capabilities in doing so. For example, having endpoint agents can ensure reliable message delivery. If one endpoint doesn’t have it, you’ll only be reliable up to the gateway under control of the ESB. In other words, you’re only as good as your weakest link. One key factor in looking at this solution is the heterogeneity of your IT environment. The more varied systems you have, the greater challenge you have in finding an ESB that supports all of them. In an environment where performance is critical, these may be good options to investigate, knowing that a proprietary messaging backbone can yield excellent performance, versus trying to leverage something like WS-RM over HTTP. Once again, the operational model must be considered. If you need to change a contract between a consumer and a provider, the non-functional concerns are enforced at the endpoint adapters. These tools must have a model where those policies can be pushed out to the nodes, rather than requiring a developer to change some code or model and go through a development cycle.

The WS-* Enabling ESB

This category of product is the ESB that strives to allow an enterprise to have a WS-* based integration model. Unlike the MOM/ORB products, they probably only require the use of agents on the service provider side, essentially to provide some form of service enablement capability. In other words, a SOAP stack. While 5 years ago this may have been very important, most major platforms now provide SOAP stacks, and many of the large application vendors provide SOAP-based integration points. If you don’t have an enterprise application server, these may be worthwhile options to investigate, as you’ll need some form of SOAP stack. Unlike simply adding Axis on top of Tomcat, these options may provide the ability to have intercommunication between nodes, effectively like a clustered application server. If not apparent, however, these options are very developer focused. Service enablement is a development activity. Also, like the MOM/ORB solutions, these products can operate in a gateway mode, but you do lose capabilities. Like the BPM/EAI solutions, these products are focused on building services, so there’s a good chance that they may not perform as well for the “in-the-middle” capabilities typically associated with a gateway.

The ESB Gateway

Finally, there are ESB products that operate exclusively as a gateway. In some cases, this may be a hardware appliance, it could be software on commodity hardware, or it could be a software solution deployed as a stand-alone gateway. While the decision between a smart-network or smart-node approach is frequently a religious one, there are certainly scenarios where a gateway makes sense. Perimeter operations are the most common, but it’s also true that many organizations don’t employ clustering technologies in their application servers, but instead leverage front-end load balancers. If your needs focus exclusively on the “in-the-middle” capabilities, rather than on orchestration, service enablement, or integration, these products may provide the operational model (configure not code) and the performance you need. Unlike an EAI-rooted system, an appliance is typically not going to provide an integrate anything-to-anything model. Odds are that it will provide excellent performance along a smaller set of protocols, although there are integration appliances that can talk to quite a number of standards-based systems.

Final words

As I’ve stated before, the number one thing is to know what capabilities you need first, before ever looking at ESBs, application servers, gateways, appliances, web services management products, or anything else. You also need to know what the operational model is for those capabilities. Are you okay with everything being a development activity and going through a code release cycle, or are there things you want to be fully controlled by an operational staff that configures infrastructure according to a standard change management practice? There’s no right or wrong answer, it all depends on what you need. Orchestration may be the number one concern for some. Service-enablement of legacy systems to another. Another organization may need security and rate throttling at the perimeter. Hopefully this post will help you on your decision making process. If I missed some of the ESB models out there or if you disagree with this breakdown, please comment or trackback. This is all about trying to help end users better understand this somewhat nebulous space.

Most popular posts to date

Most popular posts to date

It’s funny how these syndicated feeds can be just like syndicated TV. I’ve decided to leverage Google Analytics and create a post with links to the most popular entries since January 2006. My blog isn’t really a diary of activities, but a collection of opinions and advice that hopefully remain relevant. While the occasional Google search will lead you to find many of these, many of these items have long since dropped off the normal RSS feed. So, much like the long-running TV shows like to clip together a “best of” show, here’s my “best of” entry according to Google Analytics.

- Barriers to SOA Adoption: This was originally posted on May 4, 2007, and was in response to a ZapThink ZapFlash on the subject.

- Reusing reuse…: This was originally posted on August 30, 2006, and discusses how SOA should not be sold purely as a means to achieve reuse.

- Service Taxonomy: This was originally posted on December 18, 2006 and was my 100th post. It discusses the importance and challenges of developing a service taxonomy.

- Is the SOA Suite good or bad? This was originally posted on March 15, 2007 and stresses that whatever infrastructure you select (suite or best-of-breed), the important factor is that it fit within a vendor-independent target architecture.

- Well defined interfaces: This post is the oldest one on the list, from February 24, 2006. It discusses what I believe is the important factor in creating a well-defined interface.

- Uptake of Complex Event Processing (CEP): This post from February 19, 2007 discusses my thoughts on the pace that major enterprises will take up CEP technologies and certainly raised some interesting debate from some CEP vendors.

- Master Metadata/Policy Management: This post from March 26, 2007 discusses the increasing problem of managing policies and metadata, and the number of metadata repositories than may exist in an enterprise.

- The Power of the Feedback Loop: This post from January 5, 2007 was one of my favorites. I think it’s the first time that a cow-powered dairy farm was compared to enterprise IT.

- The expanding world of the “repistry”: This post from August 25, 2006 discusses registries, repositories, CMDBs and the like.

- Preparing the IT Organization for SOA: This is a June 20, 2006 response to a question posted by Brenda Michelson on her eBizQ blog, which was encouraging a discussion around Business Driven Architecture.

- SOA Maturity Model: This post on February 15, 2007 opened up a short-lived debate on maturity models, but this is certainly a topic of interested to many enterprises.

- SOA and Virtualization: This post from December 11, 2006 tried to give some ideas on where there was a connection between SOA and virtualization technologies. It’s surprising to me that this post is in the top 5, because you’d think the two would be an apples and oranges type of discussion.

- Top-Down, Bottom-Up, Middle-Out, Outside-In, Chicken, Egg, whatever: Probably one of the longest titles I’ve had, this post from June 6, 2006 discusses the many different ways that SOA and BPM can be approached, ultimately stating that the two are inseparable.

- Converging in the middle: This post from October 26, 2006 discusses my whole take on the “in the middle” capabilities that may be needed as part of SOA adoption along with a view of how the different vendors are coming at it, whether through an ESB, an appliance, EAI, BPM, WSM, etc. I gave a talk on this subject at Catalyst 2006, and it’s nice to see that the topic is still appealing to many.

- SOA and EA… This post on November 6, 2006 discussed the perceived differences between traditional EA practitioners and SOA adoption efforts.

Hopefully, you’ll give some of these older items a read. Just as I encouraged in my feedback loop post, I do leverage Google Analytics to see what people are reading, and to see what items have staying power. There’s always a spike when an entry is first posted (e.g. my iPhone review), and links from other sites always boost things up. Once a post has been up for a month, it’s good to go back and see what people are still finding through searches, etc.

My iPhone Review

My iPhone Review

As previously mentioned, I was the first iPhone buyer at the AT&T store nearest to my house. While there’s no shortage of iPhone reviews out there, I’m not a professional reviewer, so my focus is on how well it does the things I need it to do.

Choosing a model

I chose the 8GB model, simply because when I purchased the original iPod, I had the 5GB model and spent about a year managing songs because I kept running out of space. While 8GB clearly isn’t large enough for my entire music library, that’s why I have my other iPod. I planned on using the iPhone solely for podcasts and video. Since I have been traveling quite a bit, I needed one that I could comfortably store about 2-3 hours of video. To give you an idea on space requirements, an hour long TV show with the commercials edited out tends to be around 250 MB at 320×200. A two-hour long movie at 640×400 is about 1.33 GB. The decision I’ll need to make is how much music to put on it. I’ve got over 2200 songs in my full library. I put together a playlist of my favorite artists which wound up being 479 songs at 3.01 GB. The normal collection of daily/weekly updating podcasts took up about 300 MB, so clearly, the music library will be where I need to do some creative management. If you don’t already have an iPod, the smaller storage will be an issue if you want to carry your full music library. Given this requirement, I think a nice improvement would be a means to designate a certain amount of space for music and then tell iTunes to fill it with random music, just as you can do with the shuffle.

Activation

Activation went without a hitch for me. I had no issues whatsoever. I transferred my existing phone number from T-Mobile, and even that only took about 15 minutes to complete. So, my experience here was very, very good. While I had my laptop with me, I waited until I got home to activate, as my battery was down to about 6% after using it while I was waiting in line. I didn’t want to take a chance on having it go dead during activation.

Signal Strength and Call Quality

T-Mobile’s signal strength in my house has always been weak. While I’m in a coverage zone, the particular geography around my house doesn’t lend itself to a strong signal with T-Mobile unless I’m on the top floor. AT&T’s strength likewise goes down as I go downstairs, but is far better. The first call I made (while on the top floor) was crystal clear. This was a significant improvement over T-Mobile. For reference, I was previously using a Motorola V360.

The Calling Interface

I found myself wanting an Address Book icon on the home page. To get to your contacts, you first have to enter into phone mode, and then access your contacts. Once you’re there, making a call is a snap. Cycling through my contact list on my V360 was a slow painful process, the UI on the iPhone makes it a snap. One of the first things I did after using the iPhone once was to put a lot more contacts into the group that I sync with my phone, because it’s so much more manageable.

The second thing that I really liked was the ability to hold a conversation on the speaker phone and run other applications. I was talking to my mom and needed to write something down. She asked if I had a pencil, and I instead tried something out. I put her on speakerphone, hit the Home button, and then launched Notes. The whole time, I could keep the conversation going and just typed as she talked. This was very cool.

The On-Screen Keyboard

I think the pre-release reviews were right on with this one. Overall, it takes a bit of getting used to, but it’s far better than I expected. I think with some practice, it will easily be nearly as fast as a tactile keyboard. The visual and audio cues are more than enough feedback. The only slightly awkward thing is that the automatic word correction is accepted when you hit space bar. If you’ve typed in a word and it is suggesting something else, you have a problem if you just hit space to keep typing the next sentence. Perhaps this will encourage us techies to stop talking in acronyms, because that’s typically where you’ll run into this situation!

Connecting to the Network

Configuring it to use my WiFi network was a bit tricky. It came up with a list of networks to choose from, but I only saw my neighbors’. My access point doesn’t advertise its name, so I needed to manually type it in. The popup that shows up when it detects access points doesn’t allow you to do this. You have to go to the home menu and then to Settings to manually enter a network name. This is a one-time operation, as it remembers past settings. It would have been more convenient to add an “Other…” option to the default popup.

The speed has not been an issue with me. I’ve brought up web pages (ESPN) and Google Maps when on the EDGE network at the local Dairy Queen as well as at a friend’s house, and the speed was acceptable. It’s certainly not as fast as my Sprint Broadband modem (U720), but for what I need to use the iPhone for, it was fine. Incidentally, I had Google Maps on Satellite mode, so I was probably downloading more data than normal maps usage, and I still found it just fine.

The iPod

The iPod capabilities are outstanding. When people complain about the higher price of this compared to other smartphones, you really need to consider the interface presented for watching videos and listening to music. Besides the truly outstanding screen which makes video watching far more enjoyable than on my current Video iPod, the experience is just great. This alone I think justifies the price difference between a typical smartphone and the iPhone.

Surfing the Web

I’m really surprised at how well the experience is. The logic they’ve put in place to scale the display even when fully zoomed out is outstanding. The zooming experience is even better. The only trick is when you’ve got some ad-heavy page being displayed. You’re best off letting the whole page load, doing a nice slow zoom-in, and then clicking on what you need. I’ve had an occasion where I wanted to zoom, tapped to quickly and inadvertently clicked on some add, because the page was zoomed out. The one slick feature I like is how the Safari controls disappear once the page is fully loaded, and only show up when you pan the page back to the top. This reserves more screen space for working with the page.

I would like to see more pages optimized for the iPhone. For example, I frequently go to the MLB scores page on ESPN.com. While it’s fully usable on the iPhone, having a version that was specifically designed for the iPhone interface would be even better. For example, the page should be optimized so that if you are viewing it in landscape mode, the screen would be filled with the line score for one game. I expect we’ll see more and more of this as the iPhone agent starts showing up in people’s log results.

Camera

The camera has performed very well for me. Despite not having a flash, the quality of pictures taken indoors at night with normal interior lighting has been very good. Once again, the integration and ability to send pictures to contacts was far less clunky than on my old Motorola phone.

Overall

Overall, I’m very happy with my purchase. I think it’s worth every penny I paid for it. Just as with the original iPod, it’s not that Apple is providing some particular functionality that other phones don’t have, it’s the way that Apple has made that functionality available to the end user that makes the difference. This is something that you really can’t put into words, as you have to use it to understand it. I think Apple’s videos have done a good job in showing this. If you just look at a feature list, and compare it based on checkmarks, you won’t really understand what the difference is. About the only knock I have on the user interface is that I find myself wishing for a “back” button on many occasions. For example, if you click on a link in email, it opens Safari. To get back to email, you need to go to the home menu, and then email. I’d prefer than if I closed the Safari window or had a back arrow, that it would take me back to email. Outside of this, the user experience is simply outstanding. Yes, I’d like to see an AIM or Jabber client on it, but I expect that will come. On a five-star scale, I’d definitely give the device 5 stars. Is there room for improvement? Yes. Can this phone be considered an elite smartphone? Yes.

Woo hoo! Thank you AT&T store!

Woo hoo! Thank you AT&T store!

It’s 4:03 p.m. here in the central time zone, and thanks to living near a rural community (although only 20-30 minutes from downtown St. Louis), I am NUMBER ONE in line to get my iPhone. Very cool. There’s a total of four of us in line right now. Here’s a picture.

Update: It’s 5:33 p.m. and we have a total of 7 people. It was quite funny when the police drove by as they had been informed to expect potentially large crowds. I feel no sympathy for the 100+ people waiting at the Apple store at the mall…

Update number two: I am editing this from my iPhone! Cool!

Transparency in Architecture

Transparency in Architecture

Brandon Satrom posted a blog last week asking the question, “Is Enterprise Architecture Declarative or Imperative?” In clarifying the question, he pointed out that declarative programming is where a developer instructs a system what to do, but leaves it to the system to decide how best to implement that instruction. Imperative programming is where the system is told both the what and how. He goes on to discuss that his thoughts are that EA should be declarative, but working closely with a group of Solution Architects (much like I presented in my People! Policies! Process! post) that take the visions and translate them into execution.

I will agree that for the most part, EA is a declarative activity, although I wouldn’t go so far as to say that the path to execution falls to solution architects. If EA’s merely define the future state, but don’t define the executable actions (albeit at a higher level, they’re not providing project plans), they’ll likely become an ivory tower. Personally, I think the model of increasing constraints is probably a more accurate depiction of what goes on. Through reference models and architectures, there’s a certain set of constraints established that projects must now adhere to. Within the project, there are additional constraints that are established based upon the solution architecture, and then the class design, etc. You could argue that each set of constraints represents a declaration of what things should be.

As soon as you establish constraints, the subject of governance now comes up. I had an interesting discussion with someone today regarding this topic and he made the point that the projects that are intended to operate within the constraints established by EA must be transparent. Rather than assign someone from the security force to watch over the project team with an iron fist, simply make the architectural decisions visible so that at any point, someone can take a look. This made perfect sense to me. I’ve worked at a company that had taken a similar approach for financial governance on projects, where reports were generated every week and the budget watchers could easily determine whether things were compliant or not. It struck me that this would be a good approach for architectural governance, as well. Of course, this does imply that there needs to be a way to actually see the architecture from the outside, which is another story. Visibility to the source code does not equate to visibility of the solution architecture. What are your thoughts on this?

Another great conversation…

Another great conversation…

In the latest Technometria podcast from IT Conversations, Phil, Ben, and Scott speak with Robert Glushko from U.C. Berkeley and OASIS on documents, data, XML, semantics, etc. Sometimes it’s good to just listen to smart people have a conversation, as you can learn a lot. This qualified as one of those conversations for me!